Hello @Sarva, Pavan

Thanks for reaching out to us, I am a little bit confused about what you mean by parties other than the tenant, could you please provide more details?

If you are mentioning other individuals who has no roles to your resource(no contributor/ owner/ ...), the answer is no. Other than that, you can set for no person review from Microsoft side too.

At the meantime, since you are asking questions about privacy, I want to provide some basic guidelines for security and privacy of your data while using Azure OpenAI.

What data is passed to OpenAI

- The data submitted to the Azure OpenAI Service remains within Microsoft Azure and is not passed to OpenAI (the company) for model predictions.

- Azure has sole control and governance of the data and OpenAI.

- Learn more details on how data that is provided to the Azure OpenAI service is handled, processed, used, and stored .

- The Azure OpenAI model does not have an ability to connect in real time to the open internet

- Azure OpenAI was previously trained on certain data, at a point in time, and has no ability to get "the latest info" from the web

- The Azure OpenAI service automatically encrypts any data that is persisted to the cloud, including training data and fine-tuned models.

- This encryption helps protect the data and ensures that it meets organizational security and compliance requirements.

- Learn further details on how Azure OpenAI handles the encryption of data at rest .

- Azure OpenAI processes the following types of data:

- Text prompts, queries and responses submitted by the user via the completions, search, and embeddings operations.

- Training & validation data. You can provide your own training data consisting of prompt-completion pairs for the purposes of fine-tuning an OpenAI model.

- Results data from training process. After training a fine-tuned model, the service will output meta-data on the job which includes tokens processed and validation scores at each step.

- Azure OpenAI stores and processes data to provide the service, monitor for abusive use, and to develop and improve the quality of Azure's Responsible AI systems.

- Training data provided by the customer is only used to fine-tune the customer's model and is not used by Microsoft to train or improve any Microsoft models.

- No prompts or completions are stored in the model during these operations, and prompts and completions are not used to train, retrain or improve the models.

- See the Microsoft Products and Services Data Protection Addendum , which governs data processing by the Azure OpenAI Service except as otherwise provided in the applicable Product Terms .

- Azure OpenAI stores and processes data to provide the service, monitor for abusive use, and to develop and improve the quality of Azure's Responsible AI systems.

Ways to secure your data

Although Azure OpenAI was designed to meet data protection, privacy, and security standards, it is the customer's responsibility to use the technology in compliance with applicable laws and regulations and in a manner that aligns with their specific business needs.

To ensure that only authorized people can access the data or the model when training on the model or using the model, you can use Azure role-based access control (Azure RBAC).

Azure Cognitive Services provides a layered security model. This model enables you to secure your Cognitive Services accounts to a specific subset of networks by using Virtual Networks and Private Endpoints .

How does the Azure OpenAI Service process data?

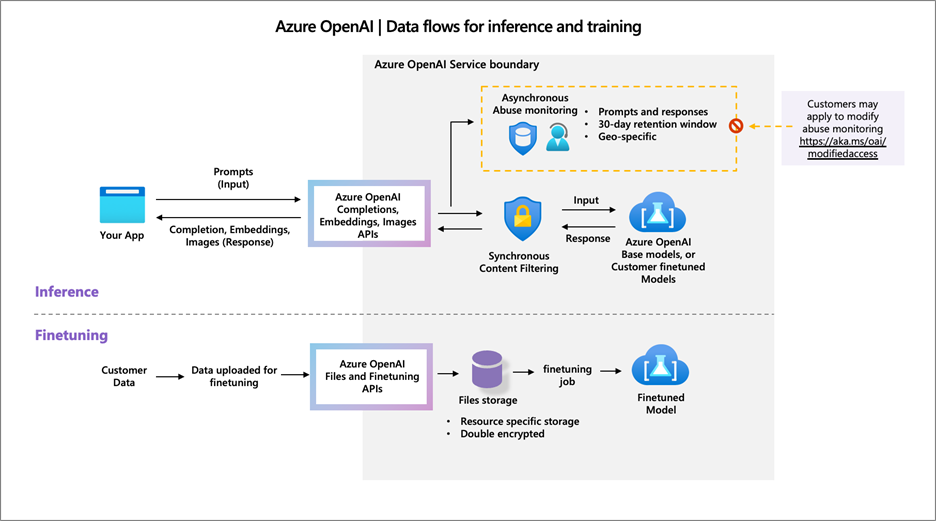

The diagram below illustrates how your data is processed. This diagram covers three different types of processing:

- How the Azure OpenAI Service creates a fine-tuned (custom) model with your training data

- How the Azure OpenAI Service processes your text prompts to generate completions, embeddings, and search results; and

- How the Azure OpenAI Service and Microsoft personnel analyze prompts & completions for abuse, misuse or harmful content generation.

Azure OpenAI Service includes a content management system that works alongside core models to filter content. See Content filtering in Azure OpenAI Service to learn more.

Data Retention

- In addition to synchronous content filtering, the Azure OpenAI Service stores prompts and completions from the service for up to thirty (30) days to monitor for content and/or behaviors that suggest use of the service in a manner that may violate applicable product terms.

- Authorized Microsoft employees may review prompt and completion data that has triggered our automated systems to investigate and verify potential abuse.

- For customers who have deployed Azure OpenAI Service in the European Economic Area , the authorized Microsoft employees will be located in the European Economic Area.

Resources

- Microsoft Products and Services Data Protection Addendum

- Supplemental Terms of Use for Microsoft Azure Previews

- Data Privacy FAQ

- Microsoft General Data Protection Regulation GDPR

- General FAQ

I hope this helps. Please let me know if you need anything else. Thanks.

Regards,

Yutong

-Please kindly accept the answer and vote 'Yes' if you feel helpful to support the community, thanks a lot.