I'm trying to create a Copy Activity within Azure Data Factory that makes a call to an API and stores the received object on my blob storage.

The API I'm trying to call needs a bunch of parameters, like this:

https://website.com/organizations/MY_ORGANIZATION/groups/MY_GROUP/mentions?access_token=MY_ACCESS_TOKEN&from_time=TIMESTAMP_X&to_time=TIMESTAMP_Y&count=100&offset=0

All the values you see above in all caps are parameters that I need to provide to my API call because they may change, except for MY_ORGANIZATION and MY_ACCESS_TOKEN.

My approach so far has been to split the URL and put the https://website.com/organizations/MY_ORGANIZATION/groups/MY_GROUP/ part in the "Base URL" box of the REST API linked service and the rest in the "Relative URL" box of the dataset.

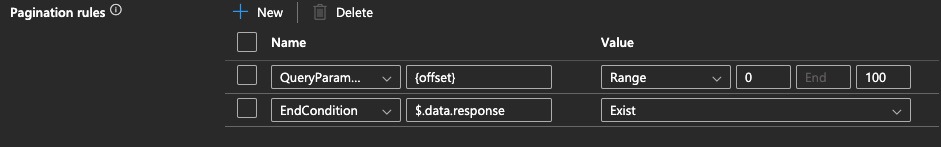

When testing the Copy Activity with all of the values hardcoded, I get a JSON file in my blob storage as expected. I can also substitute the last zero with {offset} and configure pagination rules, which also generates a JSON file with as many dictionaries as API calls, as expected once again. However, I cannot manage to make pagination work when I try to parametrize the values.

I've set up pipeline parameters for all of the values in all caps and I've managed to make successful API calls, but I'm unable to make pagination work at all and I can't figure out what I'm doing wrong.

My current string for the Base URL in the Linked Service is:

@{concat('https://websire.com/organizations/',linkedService().org_id, '/groups/', linkedService().election_code,'/')}

And my current string for the Relative URL in the dataset is:

@concat('mentions?access_token=', dataset().token, '&from_time=', string(sub(div(ticks(dataset().start_datetime),10000000),div(ticks('1970-01-01T00:00:00.000'),10000000))),'&to_time=', string(sub(div(ticks(dataset().end_datetime),10000000),div(ticks('1970-01-01T00:00:00.000'),10000000))), '&count=100&offset={offset}')

(The "from_time" and "to_time" parameters require epoch timestamps, which is why they look so ugly here because the pipeline receives datetimes as input parameters. Also, I'm aware that the token must be stored as a secret but this is for testing purposes).

The Pagination parameters are not changed from the testing I did with hardcoded values in the URL.

Am I doing something wrong? Should I use a different variable name for the offset inside the Relative URL?

Thank you for your help.