Updated by new case:

Hi, I am having a huge excel file want to import to SQL DB.

for each (DataRow drSheet in dtsheet.Rows)

{

sheetname = drsheet ["TABLE_NAME"].ToString();

OleDBCommand ocon = new OleDBCommand("select * from [" + sheetname + "]", cnn);

OleDataAdapter adp = new OleDataAdapter (ocon);

DataSet ds = new DataSet();

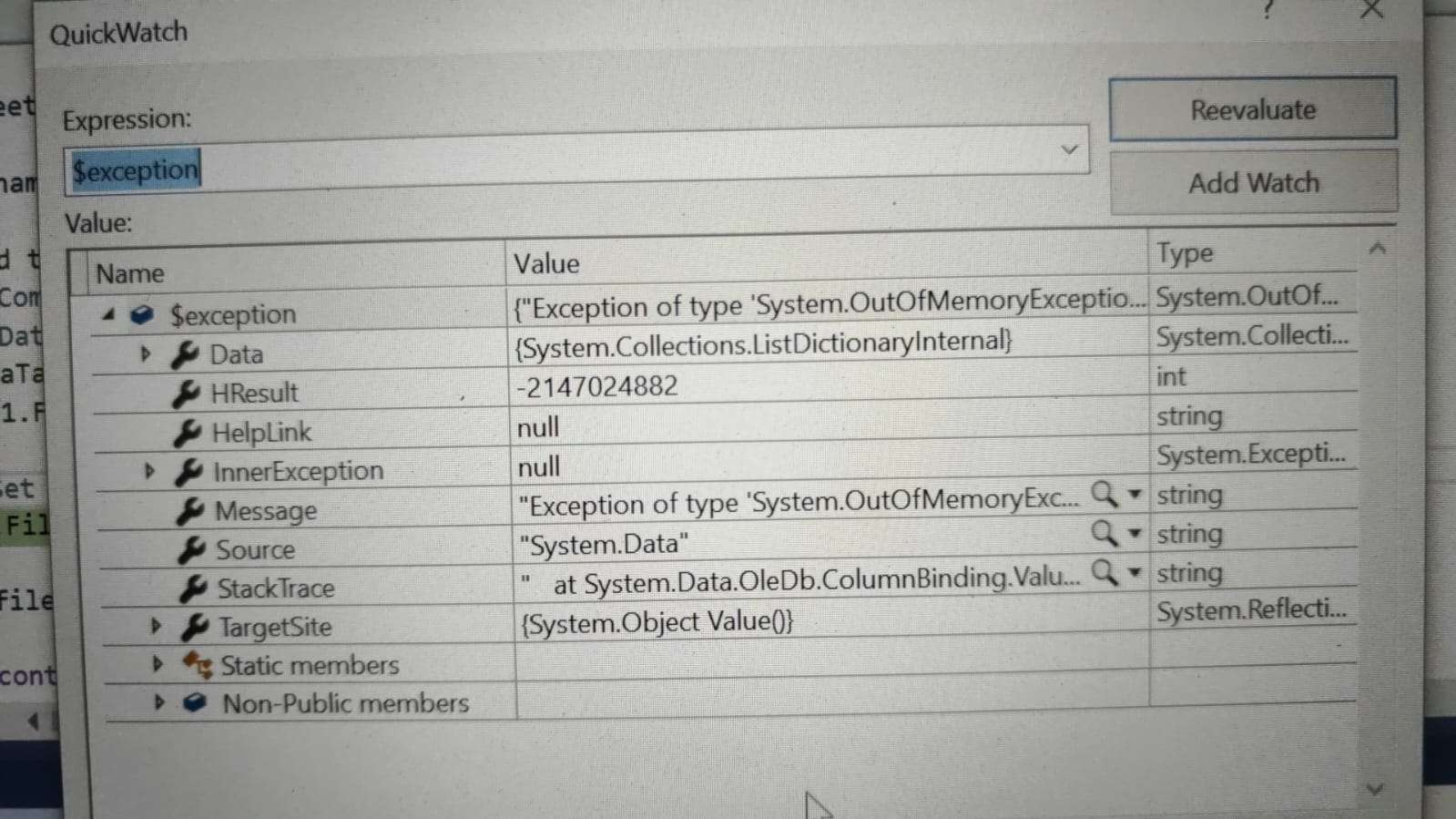

adp.Fill(ds); //Getting an error

Hi, I'm getting out of memory exception at merge. I have excel file having 150 columns with 1 million records. Here is my code. Please suggest me what to do?

Datatable dt = new DataTable();

dt.Columns.Add("Empid");

dt.Columns.Add("Empfname");

dt.Columns.Add("Emplname");

dt.Columns.Add("Empid").DefaultValue = 1;

dt.Columns.Add("Empfname") DefaultValue = "test";

dt.Columns.Add("Emplname").DefaultValue = "test2";

for(int = 1 < i < xlcolcnt; i++)

{

xlData.Columns[i].Columname = "Column" + i;

}

dt.Merge(xlData, true, MissingSchemaAction, Add); //---getting error out of memory here

int xlcocnt = dt.columns.count;

DataTable dt1 = new DataTable();

int xlcocnt = dt.columns.count;

int diffcnt = dbcont - xlcocnt;

for(int i = xlcocnt; i++)

{

dt1.columns.Add("Column" + i + 1)

}

dt.Merge(dt1, true, MissingSchemaAction, Add);

using(SqlBulkCopy dtbc = new SqlBulkCopy(adoconn)

{

dtbc.BulkCopyTimeout = 180;

dtbc.DestinationTableName = "Emp";

dtbc.WriteToServer(dt);

}