I am running into an odd issue regarding the usage of spark notebooks to call a stored procedure that updates an Azure SQL Table. I have an Azure Synapse pipeline that calls spark notebooks to do a variety of things and the second to last step is to update a logging table in our Azure SQL db.

When I run the spark notebook manually (by entering in parameters or the one generated by the Synapse pipeline at runtime), the stored procedure executes and the table is updated. This stored procedure updates the rows copied and the execution timestamp.

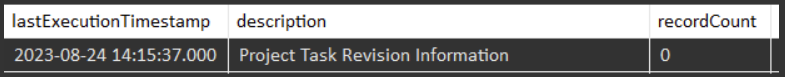

However, when the pipeline calls the spark notebook and runs it, the notebook runs successfully, including the portion with the stored procedure, but the table shows an update only for the execution timestamp and the recordCount field is ALWAYS 0, not the value shown in the screenshot.

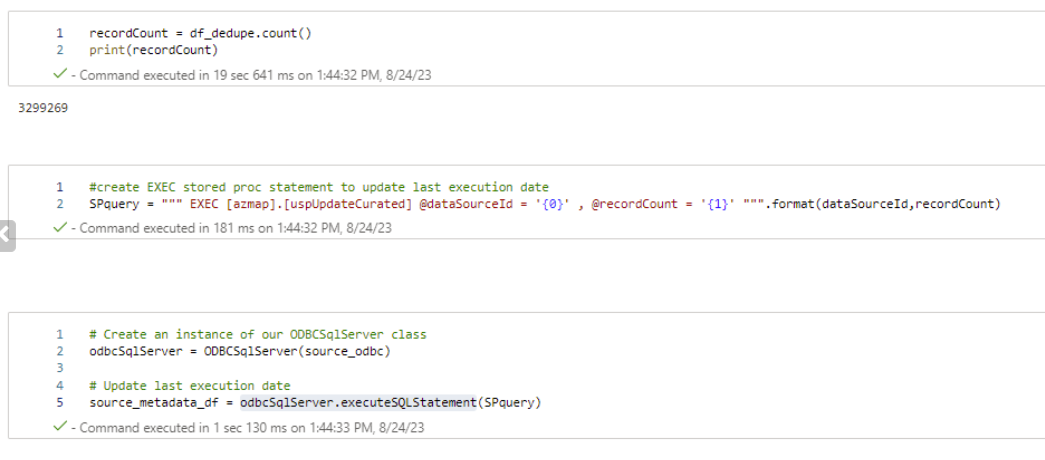

The screen shot below is from the spark notebook generated at runtime:

Here is the python below:

# Get a secret from key vault

def getKeyVaultSecret(secretName):

return mssparkutils.credentials.getSecret('synapsekeyvaultname',secretName,'keyvaultname')

class ODBCSqlServer():

"""This class encapsulates a JDBC connection to a SQL Server instance"""

def __init__(self, odbcUrl, service_principal_id=None, service_principal_secret=None, tenant_id=None):

"""Class Constructor, receives the JDBC URL"""

# Save the JDBC URL as an instance variable

self.odbcUrl = odbcUrl

self.service_principal_id = service_principal_id

self.service_principal_secret = service_principal_secret

self.tenant_id = tenant_id

self.use_service_principal = True if self.service_principal_id else False

# This is our "Skip Marker"

self.skip_marker = '>>>>>SKIP<<<<<'

def executeSQLStatement(self, sqlStatement):

with contextlib.closing(self.get_connection()) as conn:

with conn:

with contextlib.closing(conn.cursor()) as cursor:

cursor.execute(sqlStatement)

conn.commit()