I have a simple Azure Function (github code), that processes messages from a service bus queue. It's task is to make a few HTTP calls (exactly 6) and save the retrieved data to the MongoDb. The entire processing logic takes usually no more than 5 seconds. However, I'm seeing a huge number of function execution failures reported in the Application Insights with the following stack traces:

Microsoft.Azure.WebJobs.Host.FunctionTimeoutException:

at Microsoft.Azure.WebJobs.Host.Executors.FunctionExecutor+<TryHandleTimeoutAsync>d__35.MoveNext (Microsoft.Azure.WebJobs.Host, Version=3.0.37.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35: D:\a\_work\1\s\src\Microsoft.Azure.WebJobs.Host\Executors\FunctionExecutor.cs:663)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Microsoft.Azure.WebJobs.Host.Executors.FunctionExecutor+<InvokeWithTimeoutAsync>d__33.MoveNext (Microsoft.Azure.WebJobs.Host, Version=3.0.37.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35: D:\a\_work\1\s\src\Microsoft.Azure.WebJobs.Host\Executors\FunctionExecutor.cs:571)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Microsoft.Azure.WebJobs.Host.Executors.FunctionExecutor+<ExecuteWithWatchersAsync>d__32.MoveNext (Microsoft.Azure.WebJobs.Host, Version=3.0.37.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35: D:\a\_work\1\s\src\Microsoft.Azure.WebJobs.Host\Executors\FunctionExecutor.cs:527)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Microsoft.Azure.WebJobs.Host.Executors.FunctionExecutor+<ExecuteWithLoggingAsync>d__26.MoveNext (Microsoft.Azure.WebJobs.Host, Version=3.0.37.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35: D:\a\_work\1\s\src\Microsoft.Azure.WebJobs.Host\Executors\FunctionExecutor.cs:306)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Microsoft.Azure.WebJobs.Host.Executors.FunctionExecutor+<ExecuteWithLoggingAsync>d__26.MoveNext (Microsoft.Azure.WebJobs.Host, Version=3.0.37.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35: D:\a\_work\1\s\src\Microsoft.Azure.WebJobs.Host\Executors\FunctionExecutor.cs:352)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification (System.Private.CoreLib, Version=6.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e)

at Microsoft.Azure.WebJobs.Host.Executors.FunctionExecutor+<TryExecuteAsync>d__18.MoveNext (Microsoft.Azure.WebJobs.Host, Version=3.0.37.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35: D:\a\_work\1\s\src\Microsoft.Azure.WebJobs.Host\Executors\FunctionExecutor.cs:108)

This doesn't make much sense to me because as previously mentioned the entire logic of the function shouldn't take too long to execute. I have also setup a timeout on the HTTP calls to 5 seconds to reduce running time of the function if there's an issue with the APIs queried.

I can't see any good reason for why this would be happening. It also seems to be somehow connected to an increase in the service bus load. I also checked MongoDb monitoring (because it doesn't show in the AI logs) and all of the reported operations are sub 50ms. When I tried to replicate with pushing a few messages onto a queue, these were processed very quickly (sub 5 sec) without any issues.

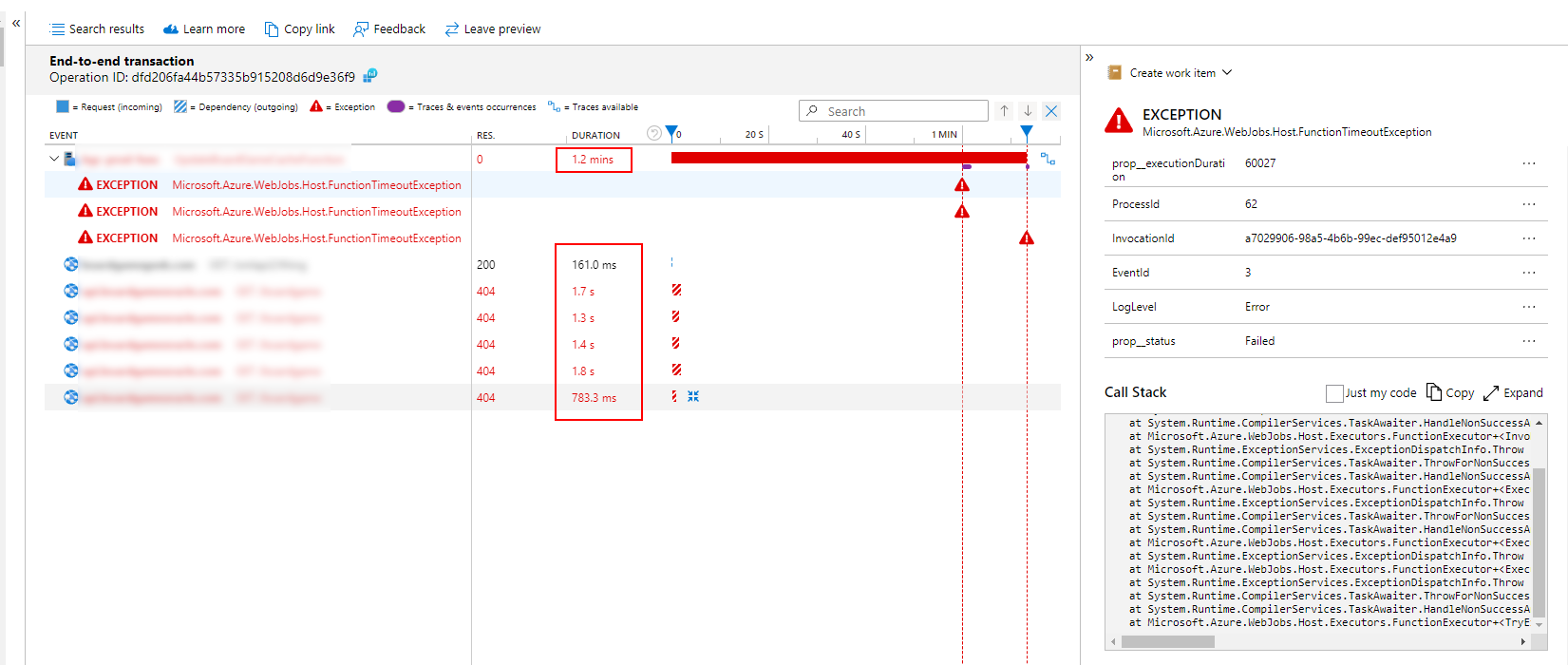

The below is the screenshot from one of the reported timeouts.

I should also mention that initially function timeout was set to 5min and similar was happening. I reduced it to 1min (see below config) to avoid unnecessary costs related to function running longer than expected.

Unsure if this will help with diagnosing this issue but the below is my host.json file content:

{

"version": "2.0",

"extensions": {

"serviceBus": {

"clientRetryOptions": {

"mode": "exponential",

"tryTimeout": "00:01:00",

"delay": "00:00:00.80",

"maxDelay": "00:01:00",

"maxRetries": 3

},

"prefetchCount": 0,

"transportType": "amqpWebSockets",

"autoCompleteMessages": true,

"maxAutoLockRenewalDuration": "00:05:00",

"maxConcurrentCalls": 16,

"maxConcurrentSessions": 8,

"maxMessageBatchSize": 1000,

"minMessageBatchSize": 1,

"maxBatchWaitTime": "00:00:30",

"sessionIdleTimeout": "00:01:00",

"enableCrossEntityTransactions": false

}

},

"functionTimeout": "00:01:00",

"logging": {

"applicationInsights": {

"samplingSettings": {

"isEnabled": true,

"excludedTypes": "Request"

}

}

}

}

Any help would be appreciated. I'm happy to share more logs if necessary

Kind Regards

Mikolaj