Hello there

I was wondering how to import custom metrics from a python function app over the azure open cencus integration to the azure application insights. See MS documentation under: opencensus-python.

I can create one view which also shows under the metrics tab under application insights but sadly the DistributionAggregation view seems not to work. Are there some limitations or am I doing something wrong? Here is a code example from my LogHandler class:

import os

from datetime import datetime

from opencensus.ext.azure import metrics_exporter

from opencensus.stats import aggregation as aggregation_module

from opencensus.stats import measure as measure_module

from opencensus.stats import stats as stats_module

from opencensus.tags import tag_key as tag_key_module

from opencensus.stats import view as view_module

from opencensus.tags import tag_map as tag_map_module

from opencensus.tags import tag_value as tag_value_module

class LogHandler(object):

def __init__(self):

#intantiate base classes from opencencus

self.stats = stats_module.stats

self.view_manager = self.stats.view_manager

self.stats_recorder = self.stats.stats_recorder

#Azure integration exporter

self.exporter = metrics_exporter.new_metrics_exporter(connection_string= os.environ['APPLICATIONINSIGHTS_INSTRUMENTATION_KEY'])

self.view_manager.register_exporter(self.exporter) #register exporter

#create tags

self.create_tags()

#create views

self.create_views()

#register all views

self.register_all_views()

def create_tags(self):

self.base_url_tag = tag_key_module.TagKey("url")

self.params_tag = tag_key_module.TagKey("params")

self.response_code_tag = tag_key_module.TagKey("response_code")

self.response_message_tag = tag_key_module.TagKey("response_message")

def create_views(self):

# request view

self.request_measure = measure_module.MeasureInt("requests", "number of outbound requests per function", "requests")

self.requests_view = view_module.View("requests view",

"number of requests",

[self.base_url_tag, self.params_tag, self.response_code_tag, self.response_message_tag],

self.request_measure,

aggregation_module.CountAggregation())

# latency view

self.latency_measure_ms = measure_module.MeasureFloat("latency", "The request latency in milliseconds", "ms")

self.latency_view = view_module.View("latency", "The distribution of the request latencies",

[self.base_url_tag, self.params_tag, self.response_code_tag, self.response_message_tag],

self.latency_measure_ms,

# Latency in buckets:

# [>=0ms, >=500ms, >=1500ms, >=2500ms, >=3500ms, >=5000ms, >=7500ms, >=10s, >=15s, >=30s]

aggregation_module.DistributionAggregation([0, 500, 1500, 2500, 3500, 5000, 7500, 10000, 15000, 30000]))

def register_all_views(self):

self.view_manager.register_view(self.requests_view)

self.view_manager.register_view(self.latency_view)

def log_request(self, base_url, param, response_code, latency):

codes = {200: 'OK', 400: "BAD_REQUEST", 404: "NOT_FOUND", 408: "TIMEOUT"}

response_message = codes[response_code]

mmap = self.stats_recorder.new_measurement_map()

tmap = tag_map_module.TagMap()

mmap.measure_int_put(self.request_measure, 1) #increment by one

mmap.measure_float_put(self.latency_measure_ms, latency)

tmap.insert(self.base_url_tag, tag_value_module.TagValue(str(base_url)))

tmap.insert(self.params_tag, tag_value_module.TagValue(str(param)))

tmap.insert(self.response_code_tag, tag_value_module.TagValue(str(response_code)))

tmap.insert(self.response_message_tag, tag_value_module.TagValue(str(response_code)))

mmap.record(tmap)

metrics = list(mmap.measure_to_view_map.get_metrics(datetime.utcnow()))

print(metrics[0].time_series[0].points[0])

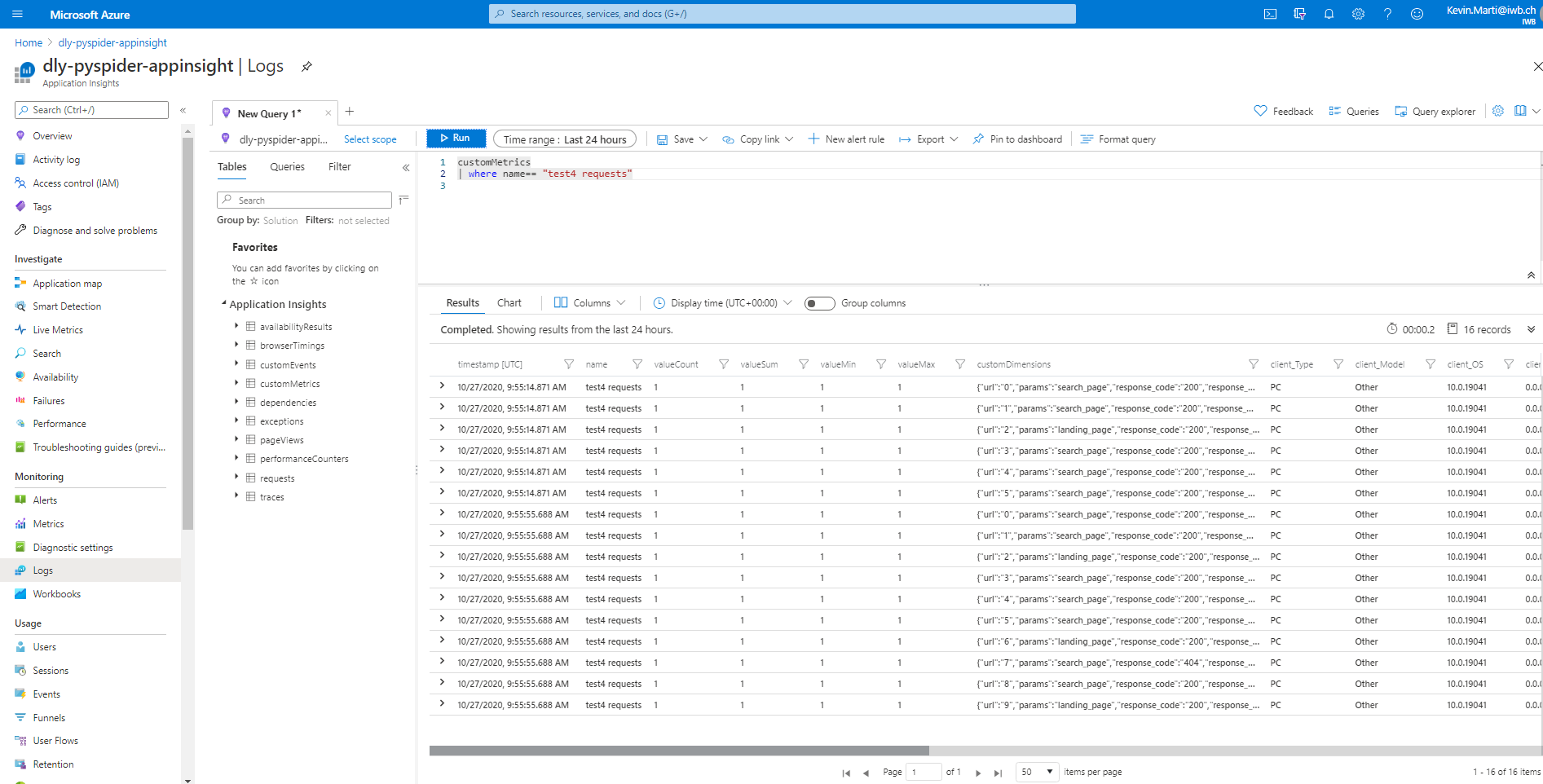

Edit: I found the tags afterwards in the custom dimensions tag.

Best regards

Kevin Marti