Hello @Yu Geng and welcome to Microsoft Q&A, and thank you for sharing the situation. I did much experimentation, but now I have an answer.

To reverse the conversion (0x224556454E545F545950 -> "IkVWRU5UX1R...)

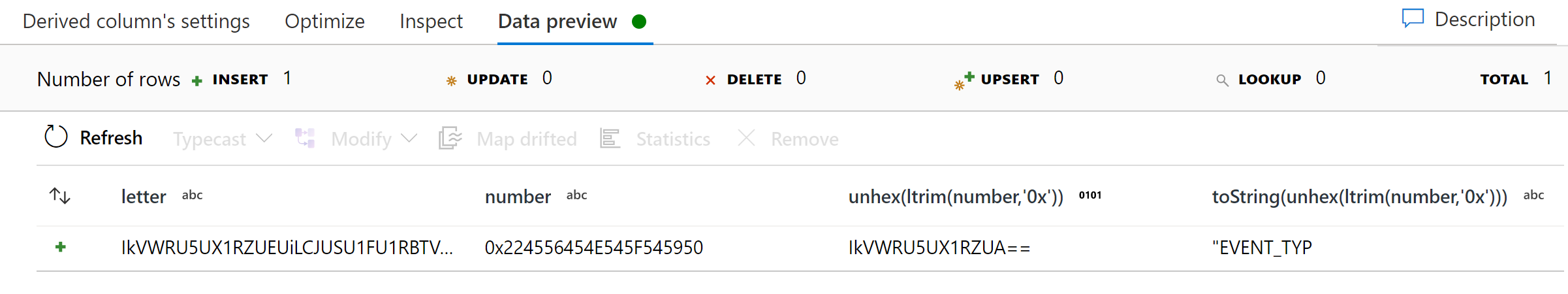

unhex(ltrim(number,'0x'))

Also, I discovered you may not need to use the fromBase64 when I tried:

toString(unhex(ltrim(number,'0x')))

I saw readable text. See the below screenshot. I think the text is truncated because the hex value you shared is much shorter than the base64 value you shared. Base64 is more information-dense than hex notation on a per-character bases.

Also, it may be possible to alter the Copy Activity mapping or dataset schemas so the contents do not get converted to hex. I do not have a Salesforce environment, so I cannot tell you exactly which to change, but I think it is worth trying. The change would be to type string. Probably on sink, but could be both.