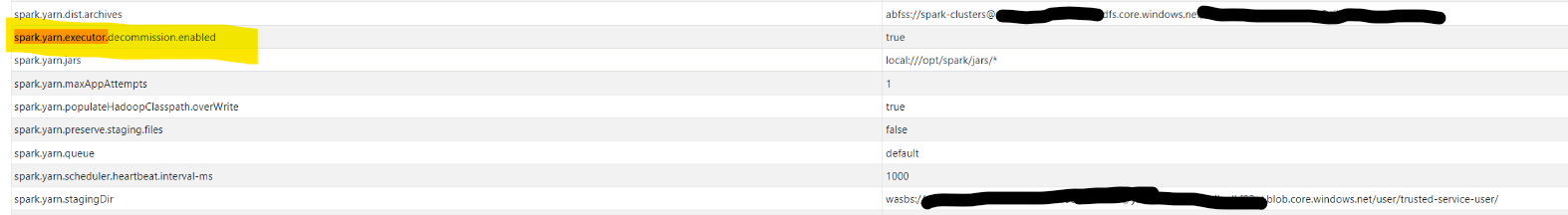

The Microsoft implementation of Spark that is found in Synapse has an unusual property named "spark.yarn.executor.decommission.enabled". This seems to be totally undocumented.

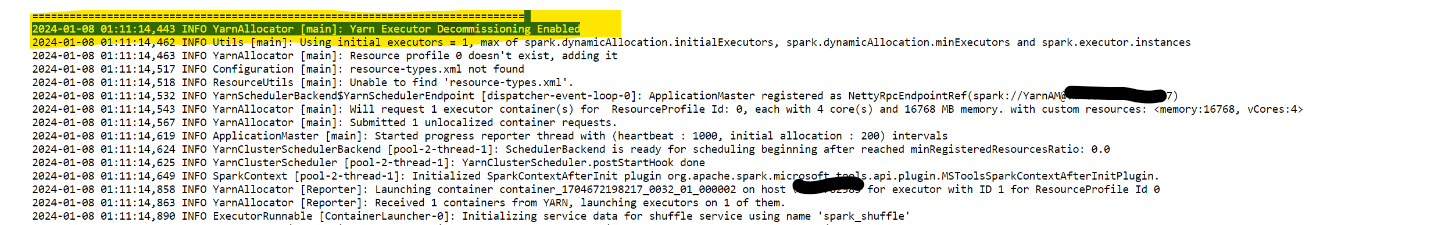

Whenever it is present, and set to "true", it will print a message in the driver logs like so:

INFO YarnAllocator [main]: Yarn Executor Decommissioning Enabled

I have not found documentation, but I believe this is related to the YARN resource manager implementation in Synapse, and I believe it is related to "autoscale" in particular, which allows nodes of the cluster to be removed if needed.

It would be nice to have a better understanding of this property. I'm finding that this may be a Microsoft customization to Yarn, and it might be incompatible with certain Spark features. For example this property seems to conflict (or supercede) the behavior of the idle timeout for dynamically allocated executors: spark.dynamicAllocation.executorIdleTimeout

When I set the spark.dynamicAllocation.executorIdleTimeout to ten minutes or so, it is not respected. The underlying node will be powered off at an unexpected time, even though the Spark executor that was running on it was not idle for the full ten minutes. This is causing a number of problems, especially for features like "localCheckpoint". I would prefer it if the Synapse Yarn cluster would not power off the nodes that are being used for Spark jobs.

Can anyone share the intended behavior of spark.yarn.executor.decommission.enabled? Is it compatible with dynamic executor allocation? Is this a Microsoft enhancement to Spark? Is it found in the other Spark offerings (Fabric and HDInsight)? Should it be causing problems with the expected behavior of spark.dynamicAllocation.executorIdleTimeout? How do I get support when these properties are conflicting with each other? I have tried to open a couple cases with support (CSS) and they don't appear to have any information to share, despite the fact that this Yarn-related property seems to be something that was home-grown at Microsoft.

Any help would be very much appreciated.