Veera Mummidi - Thanks for the question and using MS Q&A platform.

It looks like the error message is related to the permissions of the Managed Identity (MI) or Service Principal (SP) used for authentication in Azure Data Factory. The error message suggests that the MI/SP does not have the necessary permissions to access the source and sink data stores. When you run the pipeline in debug mode, it uses your own credentials to access the data stores, which is why it succeeds. However, when you trigger the pipeline, it uses the MI/SP credentials, which may not have the necessary permissions.

To resolve this issue, you can try the following steps:

- In Storage Explorer, grant the MI/SP at least Execute permission for ALL upstream folders and the file system, along with Read permission for the files to copy. Alternatively, in Access control (IAM), grant the MI/SP at least the Storage Blob Data Reader role.

- In Storage Explorer, grant the MI/SP at least Execute permission for ALL upstream folders and the file system, along with Write permission for the sink folder. Alternatively, in Access control (IAM), grant the MI/SP at least the Storage Blob Data Contributor role.

- Ensure that the network firewall settings in the storage account are configured correctly. Turning on firewall rules for your storage account blocks incoming requests for data by default, unless the requests originate from a service operating within an Azure Virtual Network (VNet).

To resolve the issue, make sure you have assigned proper permissions on the Storage account.

- As source: In Storage Explorer, grant at least Execute permission for ALL upstream folders and the file system, along with Read permission for the files to copy. Alternatively, in Access control (IAM), grant at least the Storage Blob Data Reader role.

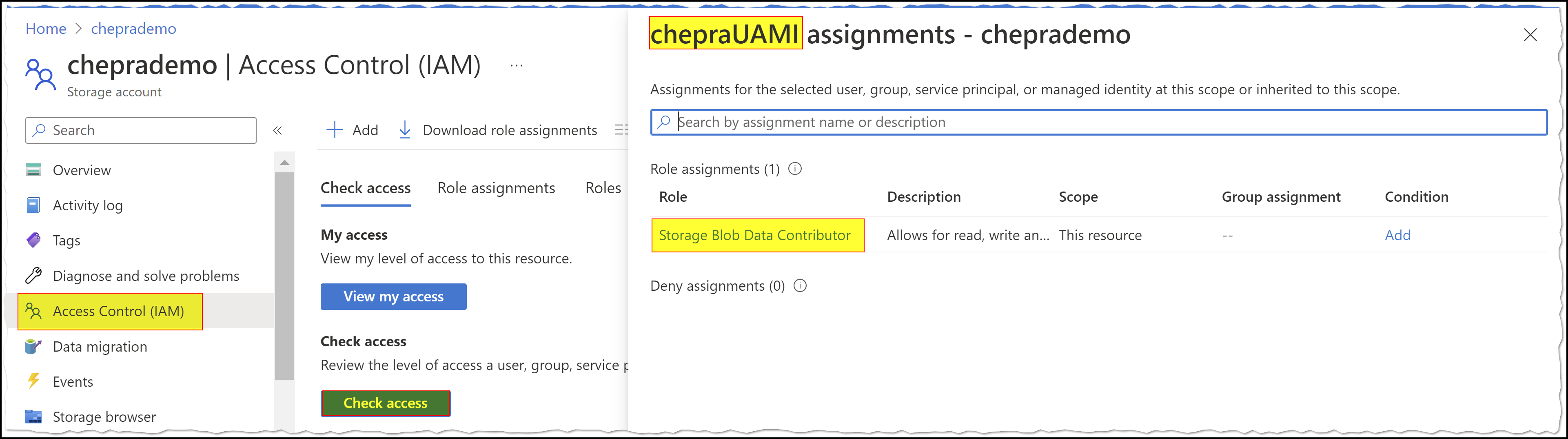

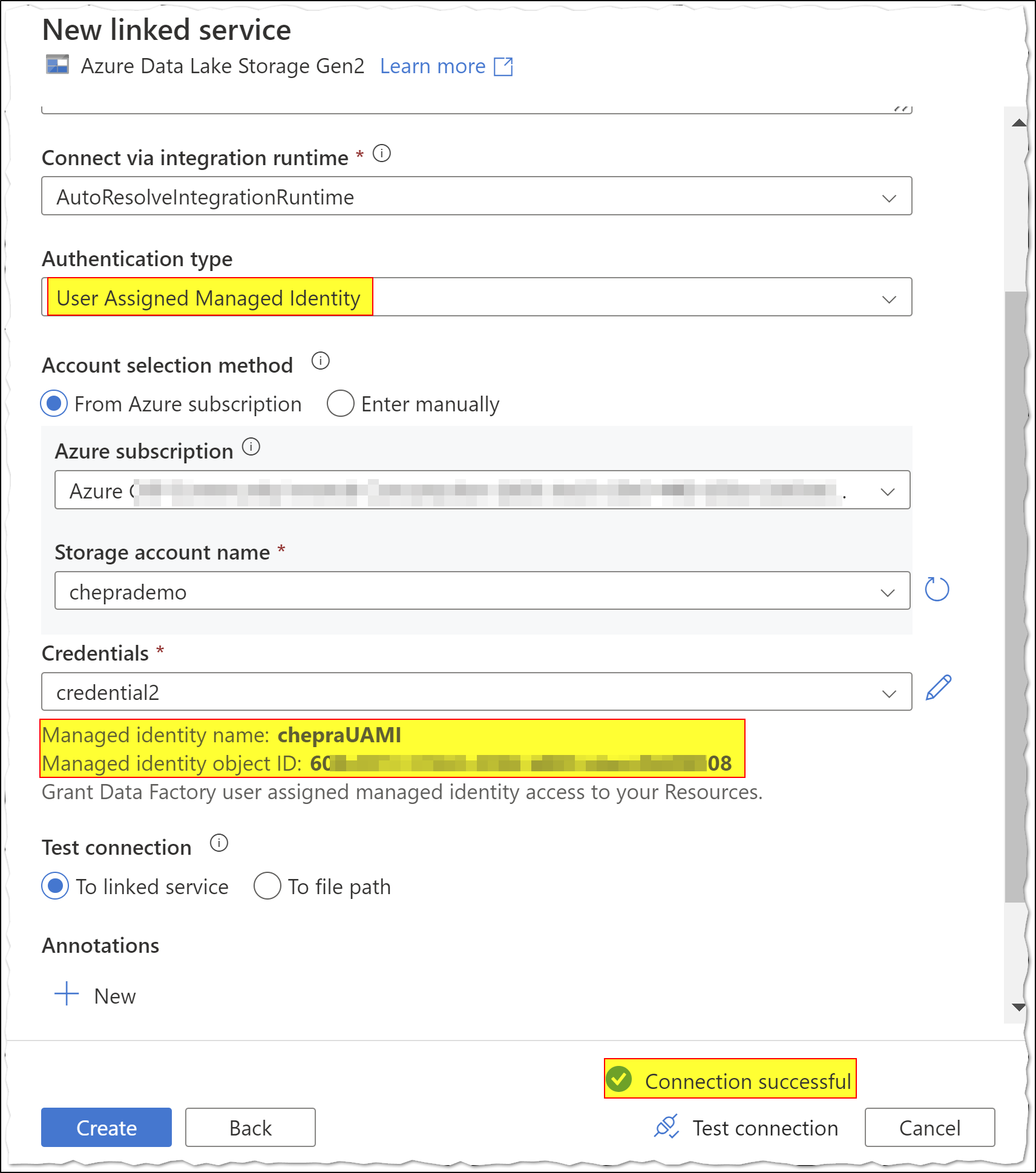

As per the repro, I had created a User Assigned Managed Identity named chepraUAMI.

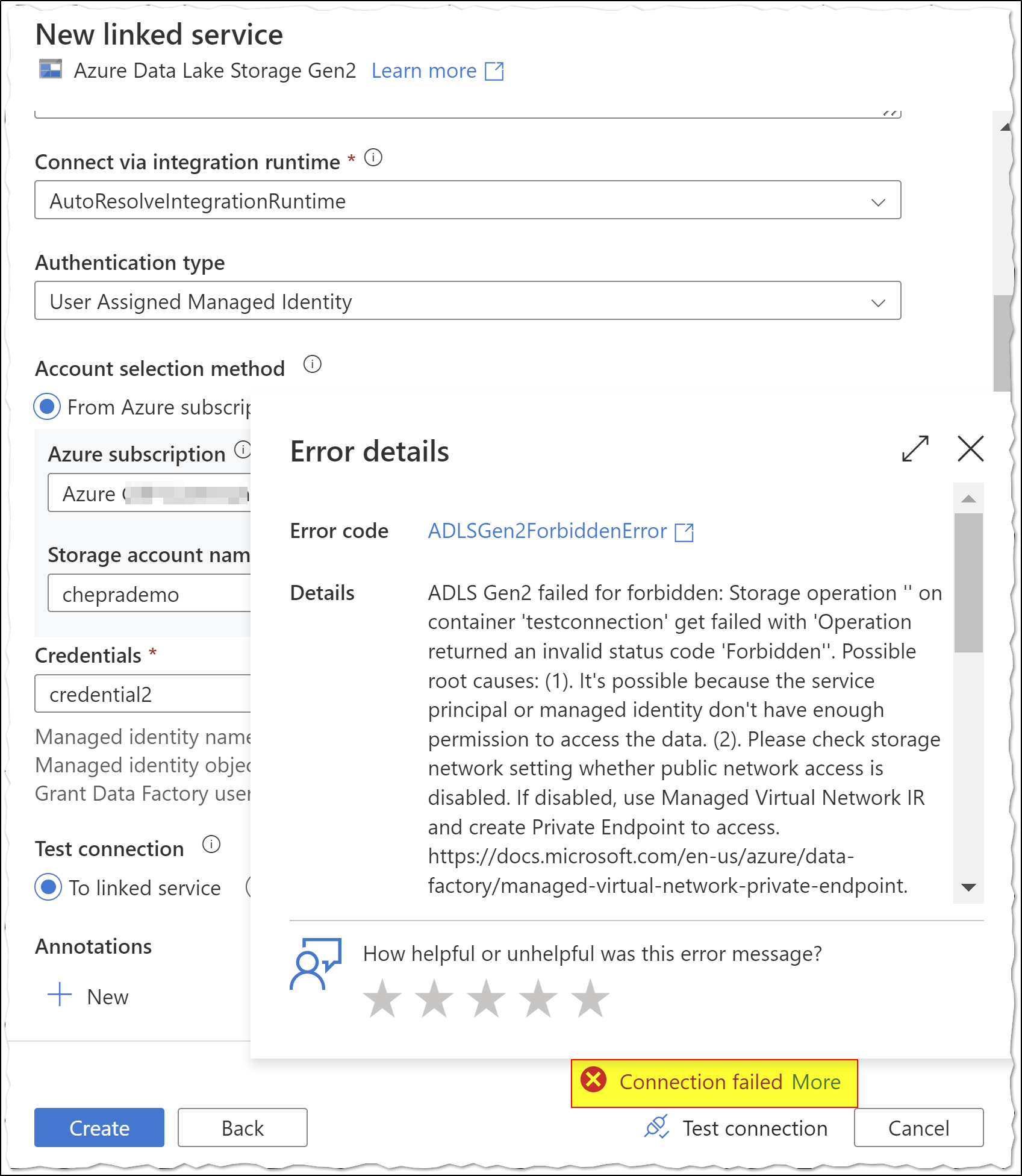

Error: When I tried to to access the storage account without permissions:

Make sure you have

Storage Blob Data Contributorpermission on the User Assigned Managed Identity.

Success After granting the permissions, able to successfully connected via User Assigned Managed Identity.

For more details, refer to ADF - User-assigned managed identity authentication and Support for user-assigned managed identity in Azure Data Factory.

If the issue persists, you may need to recreate the MI/SP with the necessary permissions and update the credentials in Azure Data Factory.

Hope this helps. Do let us know if you any further queries.

If this answers your query, do click Accept Answer and Yes for was this answer helpful. And, if you have any further query do let us know.