I have a data factory pipeline that's been running for a year without any issues then suddenly we received an error

Internal Server Error In Synapse batch operation: 'Cluster creation failed due to unexpected error. Please retry'

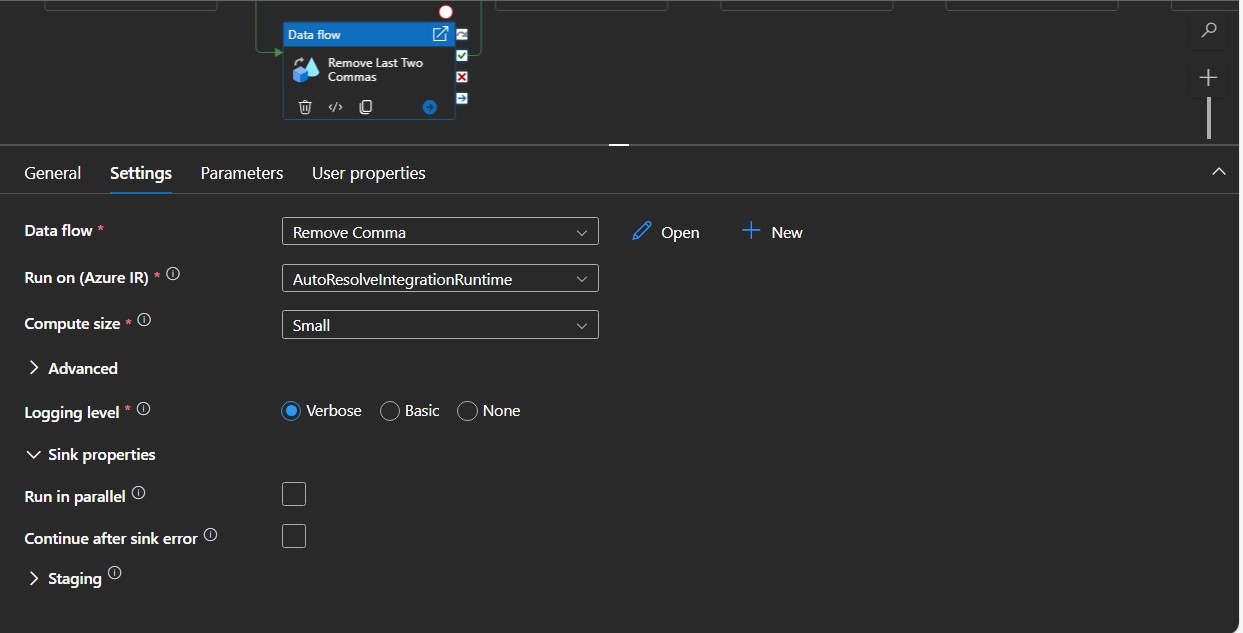

This error came from a Data Flow Activity that removes the last commas of every row in the source csv file. That file contains 7000+ rows. Here is how the activity itself and its properties look like

In the History of the error itself I've checked the input and output of the activity run for this part.

INPUT:

{

"dataflow": {

"referenceName": "Remove Comma",

"type": "DataFlowReference",

"parameters": {},

"datasetParameters": {

"GetSourceFile": {},

"sink1": {}

}

},

"staging": {},

"compute": {

"coreCount": 8,

"computeType": "General"

},

"traceLevel": "Fine",

"continuationSettings": {

"customizedCheckpointKey": "Accounting_CleanUp-Remove Last Two Commas-daf923f2-bf64-4531-aa3b-daa49fb8399f"

}

}

OUTPUT:

{

"status": {

"Name": "Dataflow",

"WorkspaceName": "Sample",

"ComputeName": "General-8-1",

"Id": 281,

"FabricJobId": null,

"AppId": null,

"Result": "Failed",

"AppInfo": null,

"SchedulerInfo": {

"CurrentState": "ended",

"SubmittedAt": "02/28/2024 13:09:26",

"QueuedAt": "02/28/2024 13:09:26",

"ScheduledAt": "02/28/2024 13:09:31",

"EndedAt": "02/28/2024 13:35:27",

"CancellationRequestedAt": null

},

"ErrorInfo": [

{

"Message": "[plugins.Sample.General-8-1-a65edd63-a1ae-4d97-9e7c-e6373b8e4e4e.5879e7dc-a96c-40b2-b118-dc1e96bdd010 WorkspaceType:<ADF> CCID:<>] MaxClusterCreationAttempts=[3] Attempt=[0] ComputeNodeSize=[Small] ClusterId=[c04f0d81-84af-4d54-a7f0-d91b3eb9a586] AdlaResourceId=[] [Creation] -> [Cleanup]. The cluster creation has failed more than the [3] of times. IsTimeout=[False] IsTerminal=[True] IsRetryable=[True] RetryOnClusterCreation=[False] ErrorType=[None] ErrorMessage=[]",

"ErrorCode": "FAILED_CLUSTER_CREATION"

}

],

"LivyInfo": {

"JobCreationRequest": null

},

"State": "error",

"Log": null,

"PluginInfo": {

"PreparationStartedAt": "02/28/2024 13:09:31",

"ResourceAcquisitionStartedAt": "02/28/2024 13:09:31",

"SubmissionStartedAt": null,

"MonitoringStartedAt": null,

"CleanupStartedAt": "02/28/2024 13:35:27",

"CurrentState": "Ended"

}

},

"effectiveIntegrationRuntime": "AutoResolveIntegrationRuntime",

"executionDuration": 1705,

"durationInQueue": {

"integrationRuntimeQueue": 0

}

}

I'm not sure what suddenly went wrong since we didn't change anything in this pipeline.

Should we increase compute size any other than small since data being ingested are becoming larger?