Hi - Please can you help me understand the limits imposed by the managed online endpoints ?

I'm attempting to simulate making concurrent calls to the endpoint, which helps understand what happens when several calls may be issued by callers at the same time. At the moment I can't get the endpoints to respond to more than a set number requests at the same time.

That set number appears to be determined by the amount of instances you are running per deployment - at that number seems set at 2 per instance - which is a low number

When testing I have...

- created a Managed Online Endpoint

- created a single Deployment of a model using 1 instance

- used k6 from https://k6.io/ to test performance

When running the following command using k6 to the endpoint/deployment...

k6 run --vus 2 --duration 1s .\sample_test.js

...I receive 200 responses. If I increase this to...

k6 run --vus 3 --duration 1s .\sample_test.js

...I receive a mix of 429 and 200 responses.

If I increase the number of running instances of the Deployment to 2 I can increase my --vus switch to 4 and receive 200's - but stepping to 5 I receive the mix of 429 and 200 responses again

Similarly if I increase my Deployment instance count to 3 then --vus can increase to 6 and no more before 429's reoccur.

The magic number per Deployment then appears to be just 2. It doesn't matter what size SKU you use on your Deployment compute - I've had the SKU set anywhere between x2 and x16 vCPU's and various RAM configurations with no change in the numbers.

I originally tested this in the UKSouth region, and to eliminate that region as an issue I also spun up the same in the WestEurope region with the same results

I also tested single calls at the same time from x3 different locations (x3 different VM's rather than my local client machine) to test x3 different inbound IP requests at the same time - with the same results.

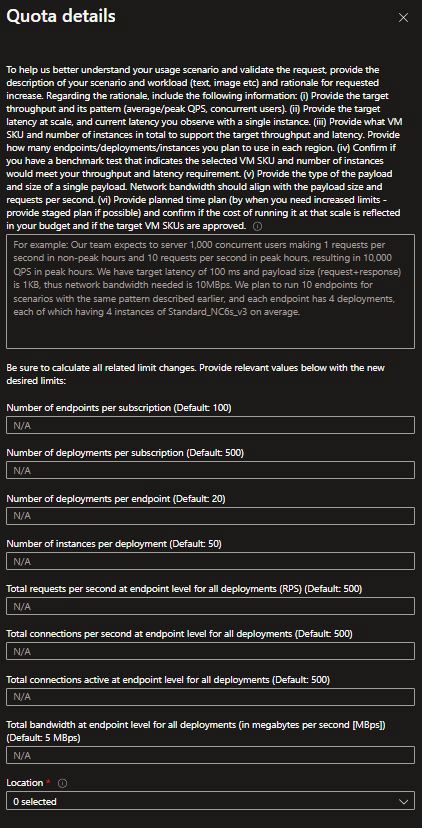

My question therefore is - why is number so low (2) and if this is Quota thing, what am I doing here to increase this? And is this documented anywhere - thanks

To recreate what I have done...

- Deploy a managed online endpoint

- Deploy a deployment of your model to that endpoint - running a single instance of 1

- Have some sample request data in a file (

sample_data.json in my example on a Windows machine)

- Install k6 from https://k6.io/docs/get-started/installation/

- Use the sample_test.js file below and replacing your values under

const for...

- apiUrl

- bearerToken

- modeldeploymentname

- jsonDataPath

- Run the k6 command

-

k6 run --vus 2 --duration 1s .\sample_test.js

-

k6 run --vus 3 --duration 1s .\sample_test.js

- This will report 429s and 200s

Note: I chose the k6 tool to recreate an issue one of our developers found when receiving 429 errors when calling the model deployment from one of our APIs - the k6 itself is not the issue, merely a simple way to recreate the same problem experienced by other calls in.

Thanks in advance - Neil

The sample_test.js file you can use

// Sample Test Call using K6 - Neil McAlister Feb 2024

// For use with the k6 executable e.g.

// k6 run --vus 2 --duration 1s c:\location\pathtothis\sample_test.js

import http from 'k6/http';

import { check } from 'k6';

// Replace these values with your actual endpoint and token

const apiUrl = 'https://yourendpointname.yourlocation.inference.ml.azure.com/score';

const bearerToken = 'YourEndpointPrimaryKeyFromTheConsumeTab';

const modeldeploymentname = 'YourDeploymentName'

// Path to your JSON file containing input data

const jsonDataPath = 'C:\\WindowsPath\\To\\YourJSONfile\\sample_data.json';

// Read input data from the JSON file

const jsonData = open(jsonDataPath);

export default function () {

// Define the HTTP headers, including the Authorization header with the bearer token

const headers = {

'Content-Type': 'application/json',

'Authorization': `Bearer ${bearerToken}`,

'azureml-model-deployment' : `${modeldeploymentname}`,

};

// Make the POST request

const response = http.post(apiUrl, jsonData, { headers: headers });

// Check if the request was successful (status code 2xx)

check(response, {

'Request was successful': (r) => r.status >= 200 && r.status < 300,

});

// Log the response details (you can remove this in a real test)

console.log(`Response status: ${response.status}`);

//console.log(`Response body: ${response.body}`);

}