Greetings, I hope this message finds you well.

I'm currently working on a solution for transferring over 10TB of data from a "Preservation Hold Library" to "Azure Blob Storage" for archiving SharePoint online deleted files.

Upon reviewing various resources, it has become apparent that utilizing PowerShell would necessitate downloading the files to local storage before copying them to Azure Blob Storage, which doesn't align with my desired approach. However, I am open to reconsidering PowerShell if there exists a method to directly move SharePoint online files to Azure Blob Storage.

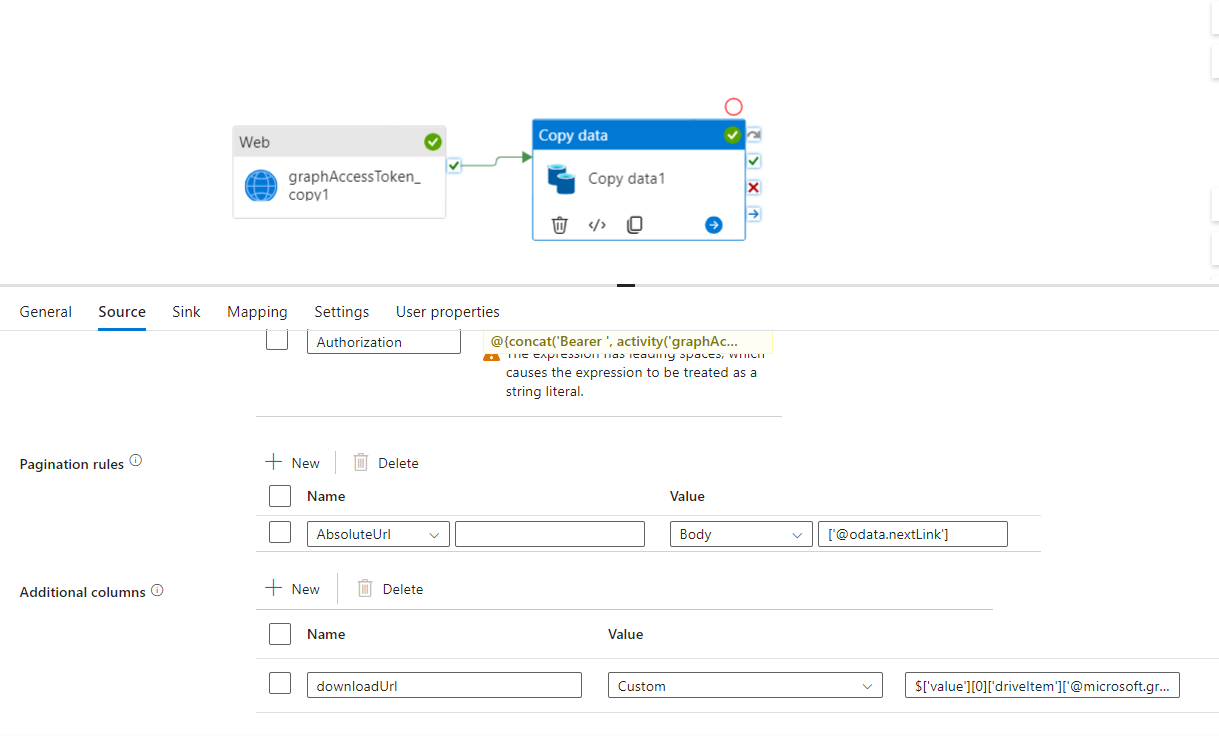

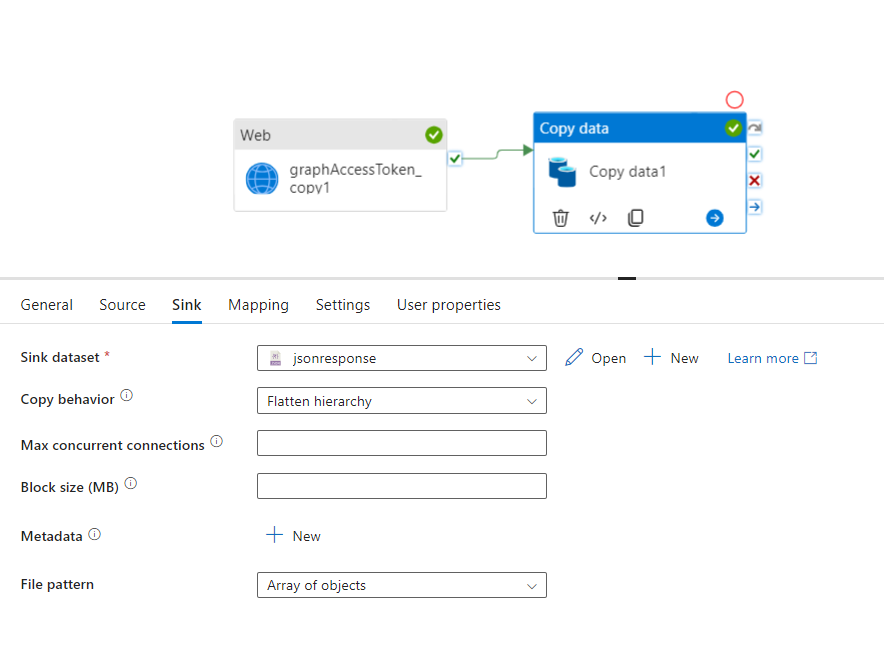

Given the size of the files, I've opted for Azure Data Factory (I am new to ADF) to facilitate the migration of SharePoint online files to Azure Blob Storage. The initial step involves making paginated MS Graph API calls to retrieve more than 20,000 files from the Preservation Hold library using the Copy Activity, and then storing the resulting JSON file in Azure Blob Storage through Azure Data Factory. However, I've encountered several challenges with this approach:

- The paginated response is an array of objects, meaning that I cannot retrieve all the files from a single object, I may need to iterate through each object, which contains 200 files, open for suggestion.

- I'm uncertain about the optimal method for reading the output JSON file from Azure Blob Storage to extract the SharePoint download URLs.

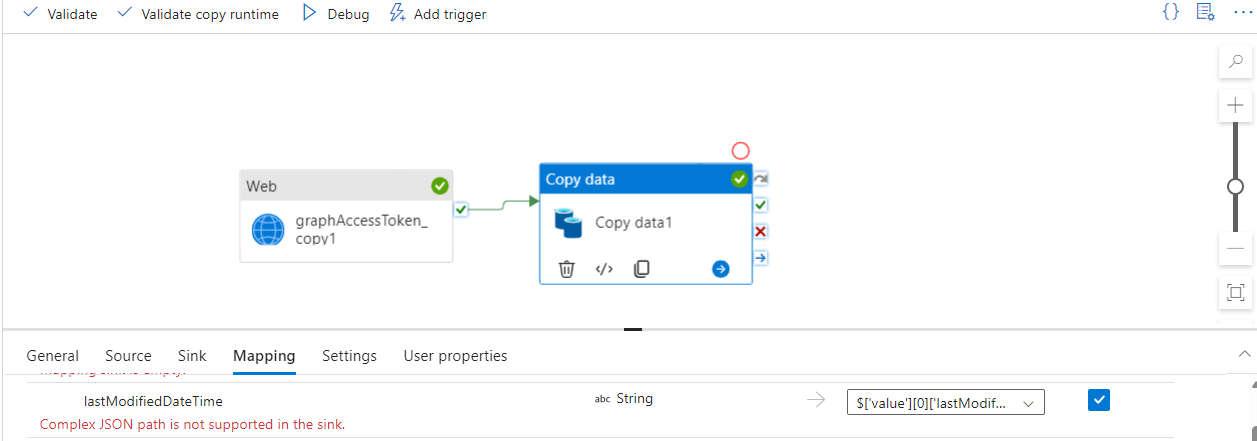

- As you can see in the attached screenshot, i am getting "Complex JSON path is not supported in the sink" error.

- I'm also seeking advice on whether I can simply append the JSON response (the "value: []" tag) to an array variable instead of storing it in Azure Blob Storage and then reading it from there.

Thank you very much for your assistance in advance.