I'm encountering an issue while using the Copy Activity in Data Factory with the Polybase copy option. The error message I receive is as follows:

“…..SQL DW Copy Command operation failed with error 'Unable to allocate 257707 KB for columnstore compression because it exceeds the remaining memory from total allocated for current resource class and DWU. Please rerun query at a higher resource class, and also consider increasing DWU…..”

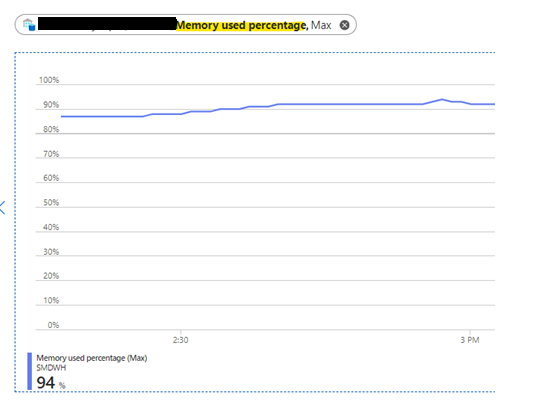

The memory usage is close to 100%.

The Copy Activity is transferring data from an on-premise SQL source to Azure Synapse.

I've come across suggestions to increase the DWU, but I believe this is not the correct solution. Not every performance issue can be solved by increasing the processing power.

When I "Suspend" and then "Resume" the Synapse, the memory usage drops from 94% to 5%. Why does this happen?

Is there a way to free up space without performing suspend and resume, or is there a way to limit the memory usage percentage to 90%?

Any insights or suggestions would be greatly appreciated. Thank you in advance for your help.