@uuu zhu - Thanks for the question and using MS Q&A platform.

UPDATE:(30/04/2024: 8:30 PM IST)

When I tried to run this code on the Apache Spark version: 3.3 got the same error message as shown above:

As per the error message: AnalysisException: Cannot modify the value of a Spark config: spark.rpc.message.maxSize. See also 'https://spark.apache.org/docs/latest/sql-migration-guide.html#ddl-statements' and if you check the document it shows what is excepted on Apache Spark version: >3.0

To resolve the issue, You can disable such a check by setting spark.sql.legacy.setCommandRejectsSparkCoreConfs to false

spark.conf.set("spark.sql.legacy.setCommandRejectsSparkCoreConfs", False)

The solution works without any issue on Apache Spark version: 2.4:

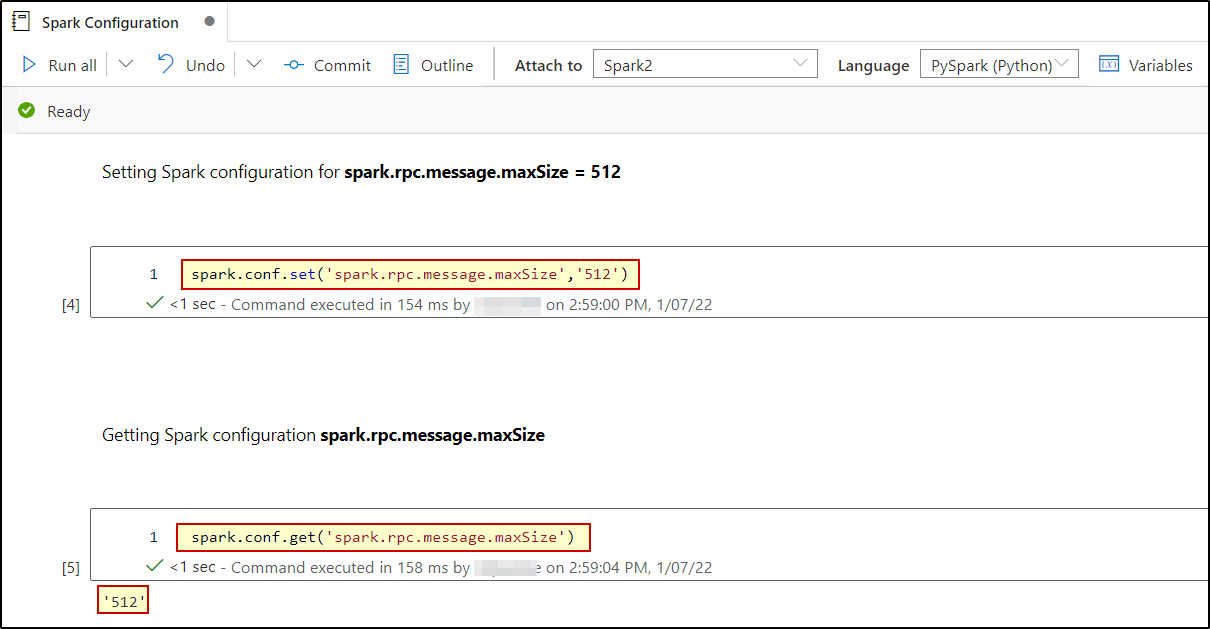

In Synapse Notebook, you can set the spark configuration as shown below:

spark.conf.set('spark.rpc.message.maxSize','512') # To set the spark configuration

spark.conf.get('spark.rpc.message.maxSize') # To get the spark configuration

Hope this helps. Do let us know if you any further queries.

If this answers your query, do click Accept Answer and Yes for was this answer helpful. And, if you have any further query do let us know.