We have a requirements.txt file which we have uploaded in the packages section under the apache spark pool we have .

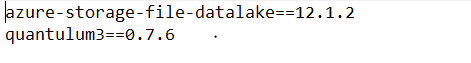

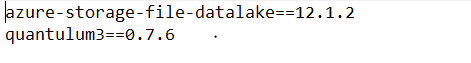

The requirements file looks something like this:

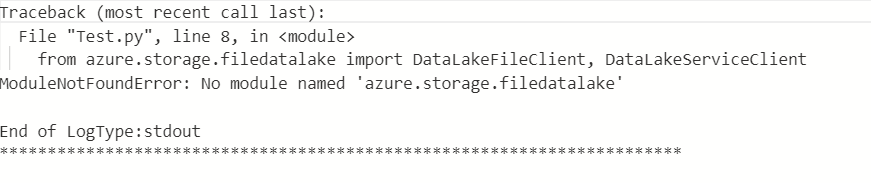

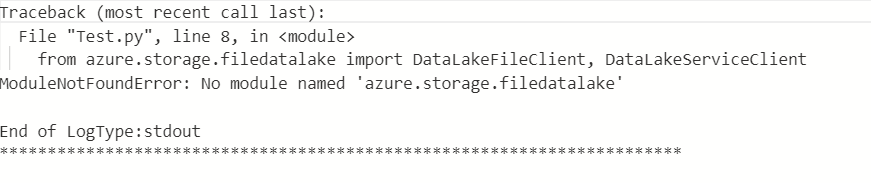

However, on executing the sparkjob in the spark pool, we get the following error output which says it could not find the module :

In order to confirm whether the packages mentioned in the Requirements.txt are installed , I rand the following code:

import pkg_resources

for d in pkg_resources.working_set:

print(d)

And the output confirmed that the packages in the file are not installed as they are not present in the pkg_resources

The thing to note here is thata the exact same spark job on the exact same pool with the exact same Requirements.txt ran perfectly fine a couple of weeks ago, but when I tried executing it yesterday I was facing these issues.

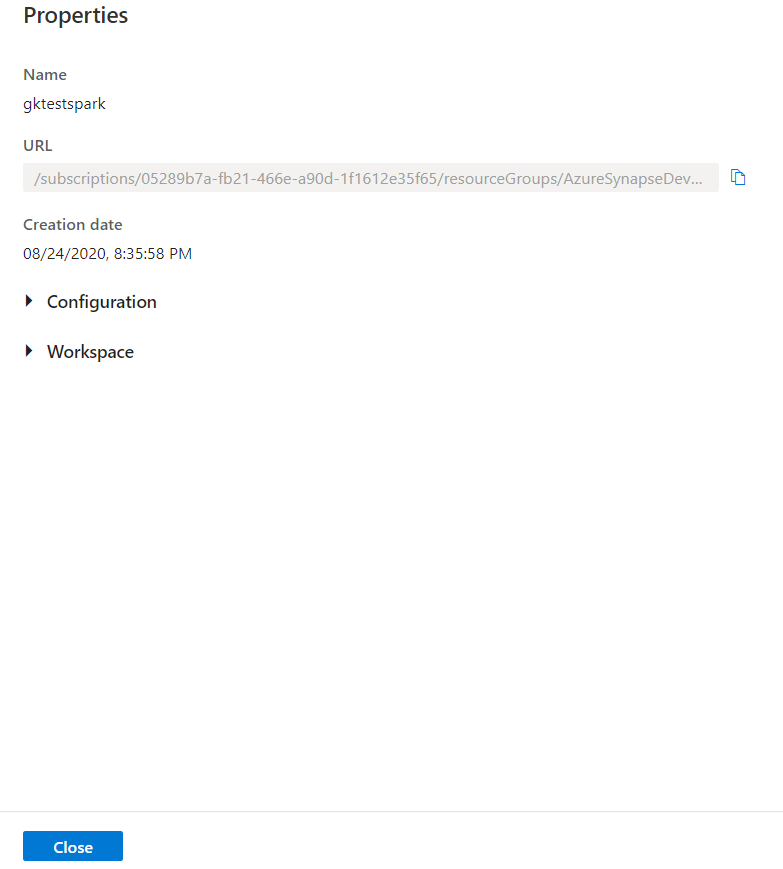

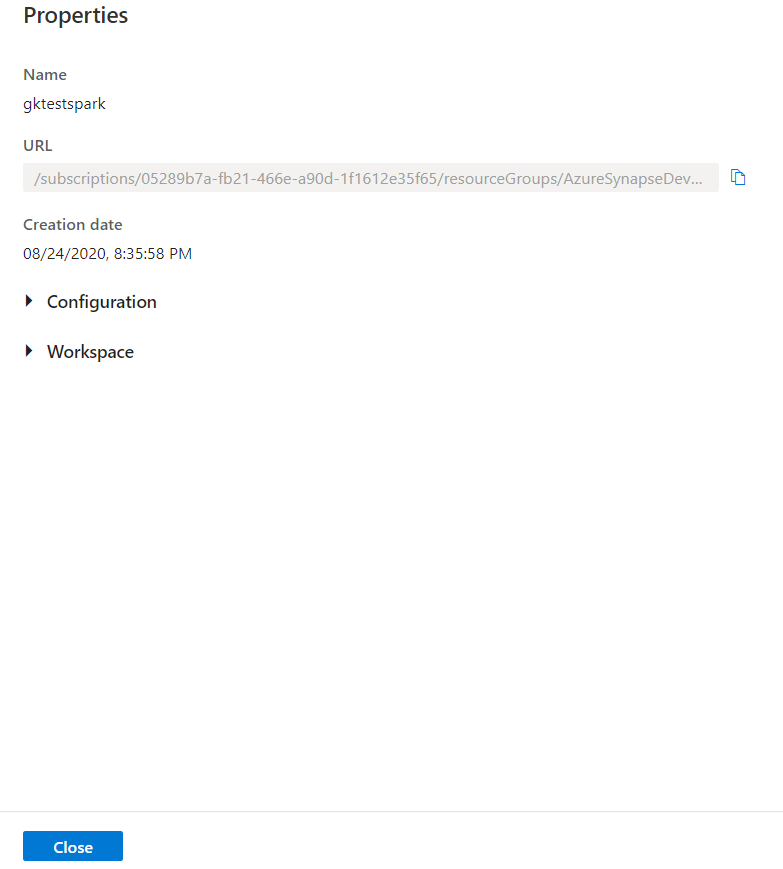

What we also noticed was that the packages section which used to appear in Manage -> Apache Spark Pool -> Properties of Synapse Studio is now missing. Not really sure if this is one of the symptoms of the problem we are facing, but i thought it would be good to bring it to your notice:

Also, we did wait for about an hour of uploading the Requirements .txt file to execute the code but it still throws an error and it does not show up in the list of installed packages either.

Please guide me on the same as I can't figure out why a functioning thing suddenly stopped working . This is kind of urgent for us.

Thanks!