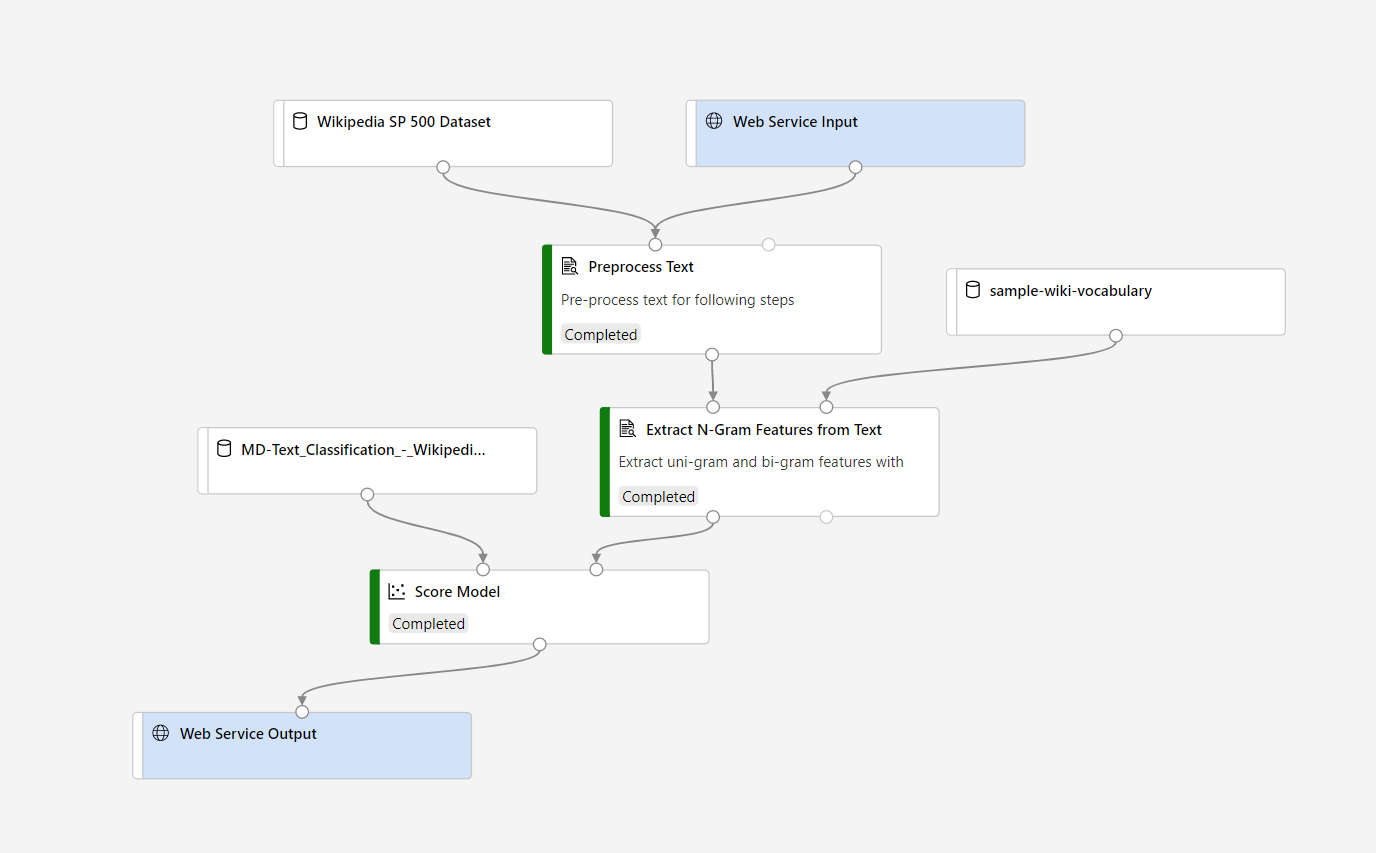

Once you create a real-time inference pipeline, please make the further modifications below:

- Find the output Result_vocabulary dataset from Extract N-Gram Features from Text module.

- Register the dataset as with a name

- Update real-time inference pipeline like below:

We will improve the documentation accordingly. Thanks for reporting the issue!