Hi,

I have a Synapse workspace with DEP enabled, and since PyPI libraries cannot be installed directly in the Spark pool, I want to install azure-mgmt-kusto and azure-kusto-data on the pool. I tried the following methods but encountered the error below.

- Create Wheel file for that package: pip wheel --wheel-dir=./ azure-kusto-data

- Locate the wheel file on the disk

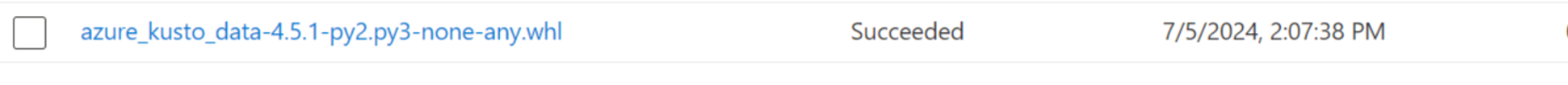

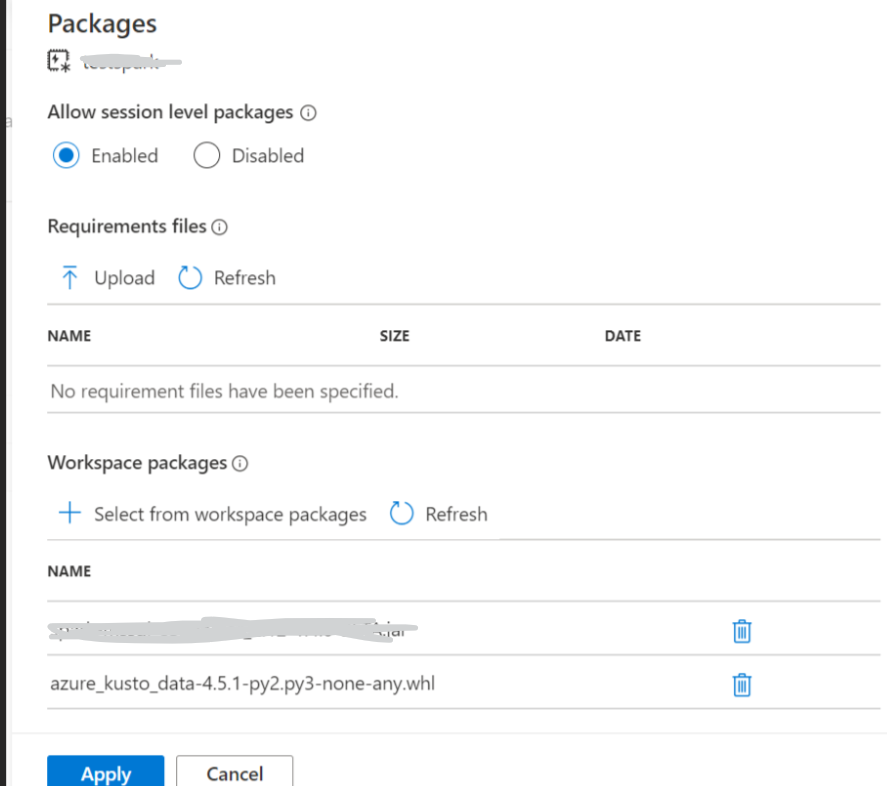

- Workspace Packages 3.1 Upload Wheel File under workspace packages

3.2 Install Wheel Package on spark pool

3.3 Error

ProxyLivyApiAsyncError

LibraryManagement - Spark Job for xxxxxxxxxxxxxx in workspace xxxxxxxxxxxxxx in subscription xxxxxxxxxxxxxx failed with status:

{"id":9,"appId":"application_xxxxxxxxxxxxxx_0001","appInfo":{"driverLogUrl":"https://web.azuresynapse.net/sparkui/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx/api/v1/workspaces/xxxxxxxxxxxxxx/sparkpools/systemreservedpool-librarymanagement/sessions/9/applications/application_xxxxxxxxxxxxxx_0001/driverlog/stderr","sparkUiUrl":"https://web.azuresynapse.net/sparkui/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx/workspaces/xxxxxxxxxxxxxx/sparkpools/systemreservedpool-librarymanagement/sessions/9/applications/application_xxxxxxxxxxxxxx_0001","isSessionTimedOut":null,"isStreamingQueryExists":"false","impulseErrorCode":"Spark_Ambiguous_NonJvmUserApp_ExitWithStatus1","impulseTsg":null,"impulseClassification":"Ambiguous"},"state":"dead","log":["ERROR: No matching distribution found for ijson~=3.1","","","CondaEnvException: Pip failed","","24/07/05 21:50:15 ERROR b"Warning: you have pip-installed dependencies in your environment file, but you do not list pip itself as one of your conda dependencies. Conda may not use the correct pip to install your packages, and they may end up in the wrong place. Please add an explicit pip dependency. I'm adding one for you, but still nagging you.\nCollecting package metadata (repodata.json): ...working... done\nSolving environment: ...working... done\nPreparing transaction: ...working... done\nVerifying transaction: ...working... done\nExecuting transaction: ...working... done\nInstalling pip dependencies: ...working... Ran pip subprocess with arguments:\n['/home/trusted-service-user/cluster-env/clonedenv/bin/python', '-m', 'pip', 'install', '-U', '-r', '/usr/lib/library-manager/bin/lmjob/xxxxxxxxxxxxxx/condaenv.u3u2igfc.requirements.txt']\nPip subprocess output:\nProcessing ./wheels/azure_kusto_data-4.5.1-py2.py3-none-any.whl (from -r /usr/lib/library-manager/bin/lmjob/xxxxxxxxxxxxxx/condaenv.u3u2igfc.requirements.txt (line 1))\nRequirement already satisfied: python-dateutil>=2.8.0 in /home/trusted-service-user/cluster-env/clonedenv/lib/python3.10/site-packages (from azure-kusto-data==4.5.1->-r /usr/lib/library-manager/bin/lmjob/xxxxxxxxxxxxxx/condaenv.u3u2igfc.requirements.txt (line 1)) (2.9.0)\nRequirement already satisfied: requests>=2.13.0 in /home/trusted-service-user/cluster-env/clonedenv/lib/python3.10/site-packages (from azure-kusto-data==4.5.1->-r /usr/lib/library-manager/bin/lmjob/xxxxxxxxxxxxxx/condaenv.u3u2igfc.requirements.txt (line 1)) (2.31.0)\nRequirement already satisfied: azure-identity<2,>=1.5.0 in /home/trusted-service-user/cluster-env/clonedenv/lib/python3.10/site-packages (from azure-kusto-data==4.5.1->-r /usr/lib/library-manager/bin/lmjob/xxxxxxxxxxxxxx/condaenv.u3u2igfc.requirements.txt (line 1)) (1.15.0)\nRequirement already satisfied: msal<2,>=1.9.0 in /home/trusted-service-user/cluster-env/clonedenv/lib/python3.10/site-packages (from azure-kusto-data==4.5.1->-r /usr/lib/library-manager/bin/lmjob/xxxxxxxxxxxxxx/condaenv.u3u2igfc.requirements.txt (line 1)) (1.27.0)\nINFO: pip is looking at multiple versions of azure-kusto-data to determine which version is compatible with other requirements. This could take a while.\n\nfailed\n"","24/07/05 21:50:15 INFO Cleanup following folders and files from staging directory:","24/07/05 21:50:23 INFO Staging directory cleaned up successfully","24/07/05 21:50:23 INFO Waiting for parallel executions","24/07/05 21:50:23 INFO Closing down clientserver connection"],"registeredSources":null}

Spark UI Note: there is no requirements.txt file on spark pool

- Use Storage Account 4.1 Uploaded Wheel File on storage account 4.2 Code: spark.conf.set( "spark.jars.packages", "wasbs://******@tests.blob.core.windows.net/azure_kusto_data-4.5.1-py2.py3-none-any.whl" ) Error: AnalysisException Traceback (most recent call last) Cell In[5], line 1 ----> 1 spark.conf.set(

2 "spark.jars.packages",

3 "wasbs://******@tests.blob.core.windows.net/azure_kusto_data-4.5.1-py2.py3-none-any.whl"

4 )

File /opt/spark/python/lib/pyspark.zip/pyspark/sql/conf.py:47, in RuntimeConfig.set(self, key, value)

40 @since(2.0)

41 def set(self, key: str, value: Union[str, int, bool]) -> None:

42 """Sets the given Spark runtime configuration property.

43

44 .. versionchanged:: 3.4.0

45 Supports Spark Connect.

46 """

---> 47 self._jconf.set(key, value) File ~/cluster-env/env/lib/python3.10/site-packages/py4j/java_gateway.py:1322, in JavaMember.call(self, *args) 1316 command = proto.CALL_COMMAND_NAME +\ 1317 self.command_header +\ 1318 args_command +\ 1319 proto.END_COMMAND_PART 1321 answer = self.gateway_client.send_command(command) -> 1322 return_value = get_return_value( 1323 answer, self.gateway_client, self.target_id, self.name) 1325 for temp_arg in temp_args: 1326 if hasattr(temp_arg, "_detach"): File /opt/spark/python/lib/pyspark.zip/pyspark/errors/exceptions/captured.py:175, in capture_sql_exception.<locals>.deco(*a, **kw)

171 converted = convert_exception(e.java_exception)

172 if not isinstance(converted, UnknownException):

173 # Hide where the exception came from that shows a non-Pythonic

174 # JVM exception message.

--> 175 raise converted from None

176 else:

177 raise

AnalysisException: [CANNOT_MODIFY_CONFIG] Cannot modify the value of the Spark config: "spark.jars.packages".

See also '

[https://spark.apache.org/docs/latest/sql-migration-guide.html#ddl-statements']().

Thanks,

Abhiram