Hello Anshal,

Greetings! Welcome to Microsoft Q&A Platform.

We might not store the whole 150TB data at one point, but if we do and have one data lake storage, would performance issues be caused?

Storing and managing 150TB of data in a data lake can be challenging, but with the right strategies, you can optimize performance and manage costs effectively. Storing large amounts of data in Azure Blob Storage can be cost-effective, as you only pay for the storage you use.

Azure Blob Storage is Microsoft's object storage solution for the cloud. Blob Storage is optimized for storing massive amounts of unstructured data. Unstructured data is data that doesn't adhere to a particular data model or definition, such as text or binary data. Blob Storage is designed for:

- Serving images or documents directly to a browser.

- Storing files for distributed access.

- Streaming video and audio.

- Writing to log files.

- Storing data for backup and restore, disaster recovery, and archiving.

- Storing data for analysis by an on-premises or Azure-hosted service.

- Users or client applications can access objects in Blob Storage via HTTP/HTTPS, from anywhere in the world. Objects in Blob Storage are accessible via the Azure Storage REST API, Azure PowerShell, Azure CLI, or an Azure Storage client library. Client libraries are available for different languages.

- You want your application to support streaming and random-access scenarios.

- You want to be able to access application data from anywhere.

- You want to build an enterprise data lake on Azure and perform big data analytics.

Blob has many features compared other services: https://learn.microsoft.com/en-us/azure/storage/blobs/storage-feature-support-in-storage-accounts

Security recommendations for Blob storage

What are the alternate strategy?

As for the best practices for storing large amounts of data in Azure Blob Storage, it is generally recommended to store data in multiple blobs rather than one large blob, as this can improve the performance of your application. It is also recommended to use the most cost-effective storage tier for your data, based on the access patterns of your application.

You can have all your data under one Storage account. Were you can create Multiple containers under blob. If you are using for test, dev and production environment you can have Mutiple account. It completely depends on your requirements.

You can create up to 250 storage accounts per region with standard endpoints in a given subscription. Azure DNS zone endpoints (preview). You can create up to 5000 storage accounts per region with Azure DNS zone endpoints in a given subscription. For more information refer here

What is the cost impact and what steps are to reduce costs?

The cost of storing data in Azure Blob Storage depends on the type of storage tier you choose. There are three storage tiers available: Hot, Cool, and Archive.

The Hot tier is intended for storing data that is accessed frequently and costs more per GB than the Cool and Archive tiers. The Cool tier is intended for storing data that is accessed less frequently and costs less per GB than the Hot tier. The Archive tier is intended for storing data that is rarely accessed and costs less per GB than the Hot and Cool tiers, but retrieval of data from the Archive tier may take longer.

This article provides detailed information on Azure billing , If you need detailed information about pricing based on your region and requirement, please reach out to Billing and Subscription team would be the best to provide more insight and guidance on this scenario: It's a Free support https://azure.microsoft.com/en-us/support/options/

Pricing calculator ( It will provide cost estimation ) https://azure.microsoft.com/en-gb/pricing/calculator/

Does tier stand and premium impact performance and to what extent?

Azure Blob Storage supports tiered data management with hot, cool, and archive access tiers. You can set lifecycle management policies to automatically move blobs to cooler tiers based on their age or usage patterns.

Azure Blob Storage supports multiple access tiers including hot, cool, and archive.

Adding to above information. When it comes to Standard Tier Azure Storage accounts, though there are no limits on the number of blobs, files, shares, queues, entities, or messages that can be stored within this capacity but there are some important considerations regarding data uploading and downloading,

You can upload and download data within the available capacity without any specific restrictions and the standard general-purpose V2 account has a capacity of five petabytes. If you need more capacity, you can request an extension through Azure support.

Azure storage capacity limits are set at the account level, rather than according to access tier. You can choose to maximize your capacity usage in one tier, or to distribute capacity across two or more tiers.

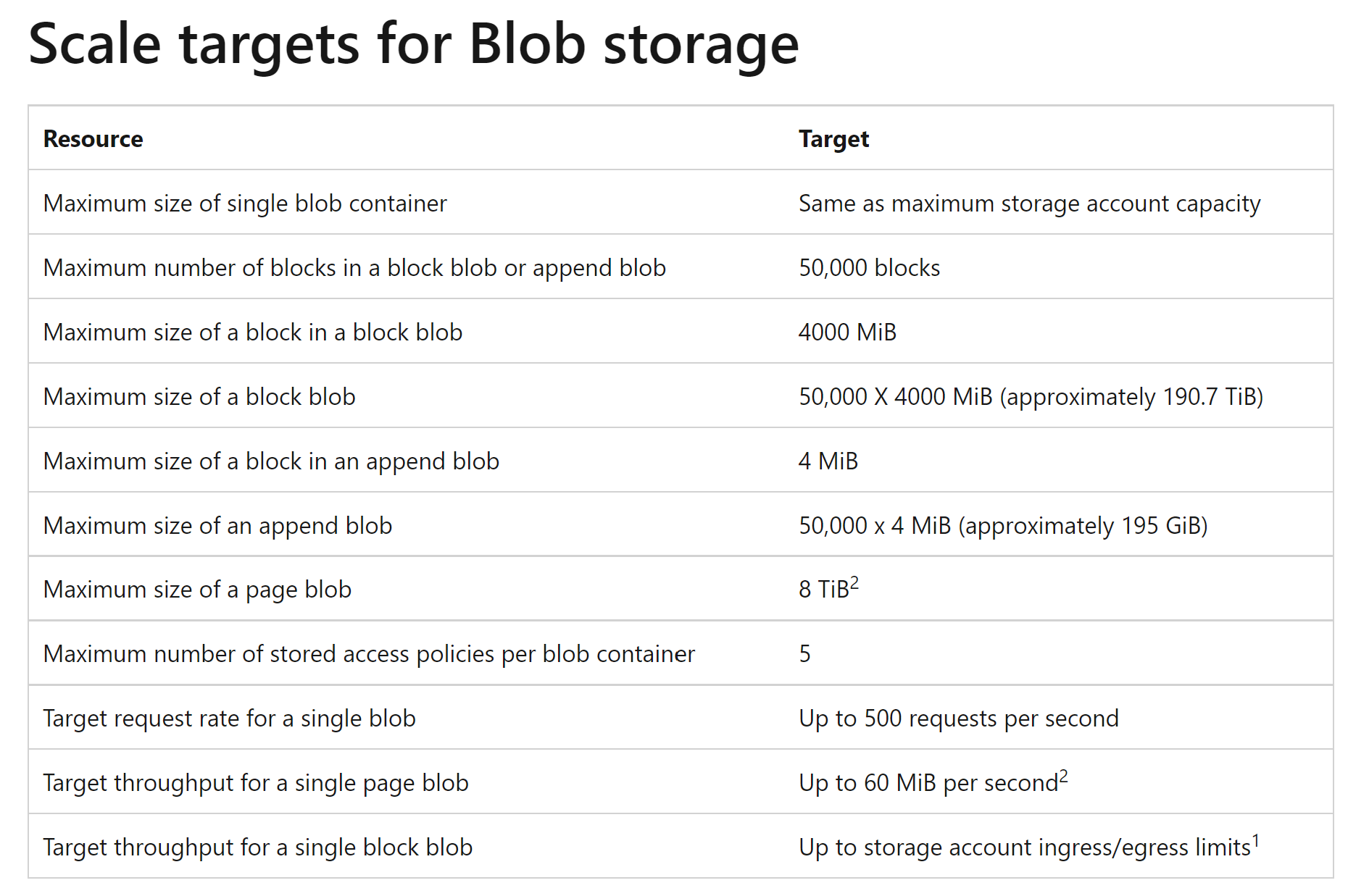

Block blobs are optimized for uploading large amounts of data efficiently. Block blobs are composed of blocks, each of which is identified by a block ID. A block blob can include up to 50,000 blocks. Each block in a block blob can be a different size, up to the maximum size permitted for the service version in use.

Note: Setting the access tier is only allowed on Block Blobs. They are not supported for Append and Page Blobs.

refer -https://learn.microsoft.com/en-us/azure/storage/common/storage-account-overview#scalability-targets-for-standard-storage-accounts, https://learn.microsoft.com/en-us/azure/storage/blobs/access-tiers-overview for more details.

Azure doesn’t directly provide local storage/cache specifications. However, Azure File Sync can be used to centralize file shares in Azure Files while maintaining the compatibility of an on-premises file server with caching capabilities. You can enable premium tier on a general-purpose version 2 storage account by enabling large file shares. However, there are some limitations to using large file shares, such as a maximum file size of 100 TiB and a maximum capacity of 100 TiB per share. Additionally, enabling large file shares may require some downtime and could potentially affect permissions.

Hope this answer helps! please let us know if you have any further queries. I’m happy to assist you further.

Please "Accept the answer” and “up-vote” wherever the information provided helps you, this can be beneficial to other community members.