Hello @Sabyasachi Dash and welcome to Microsoft Q&A.

There are a number of things that can be done to increase throughput. In your case, I suspect it is caused by a low degree of parallelism (1) and/or not using partitioned copy.

I am not overly familiar with the AWS ecosystem, but it sounds like you have an established connection, and so are using Azure Integration Runtime, rather than a Self-Hosted Integration Runtime. Please correct me if this is not the case.

If you are using Self-Hosted Integration Runtime, then you could try adding more nodes and/or scaling up the nodes, in addition to the following recommendations.

There are optimizations which are specific to Oracle, and there are generic optimizations. There is some interplay between them.

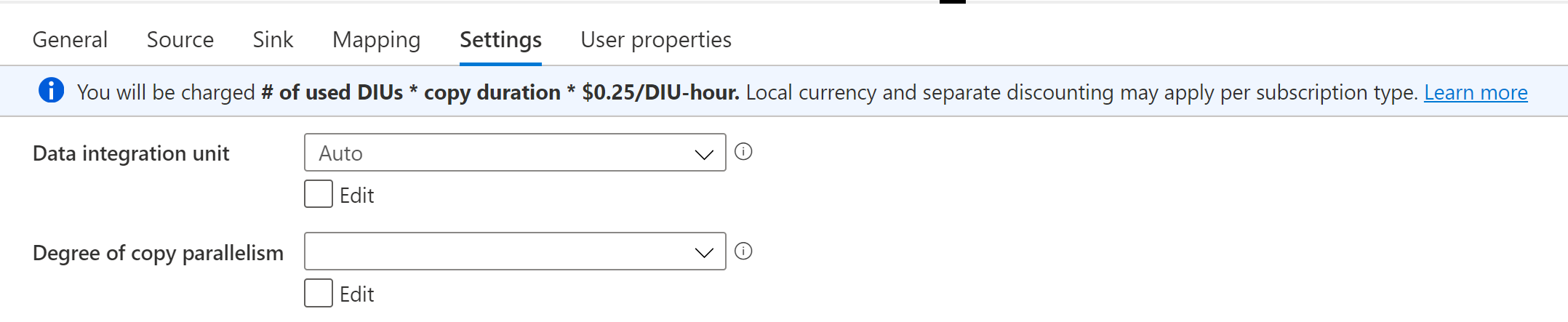

First, the generic optimizations. In the Copy Activity > Settings you can find Data Integration Units and Degree of copy parallelism. The Data Integration Units are a measure of how much "power" is assigned to the task. The Degree of copy parallelism is how many processes are available to read/write simultaneously. More is better.

In the Copy Data > Sink (for both Azure Data Lake Gen 1 and Gen 2), there is Max Concurrent connections. Max concurrent connections is how many simultaneous writers you will allow. More is better, but the default value is 'no limit'.

Depending upon the type of dataset, more options may be available (i.e. delimited text vs binary).

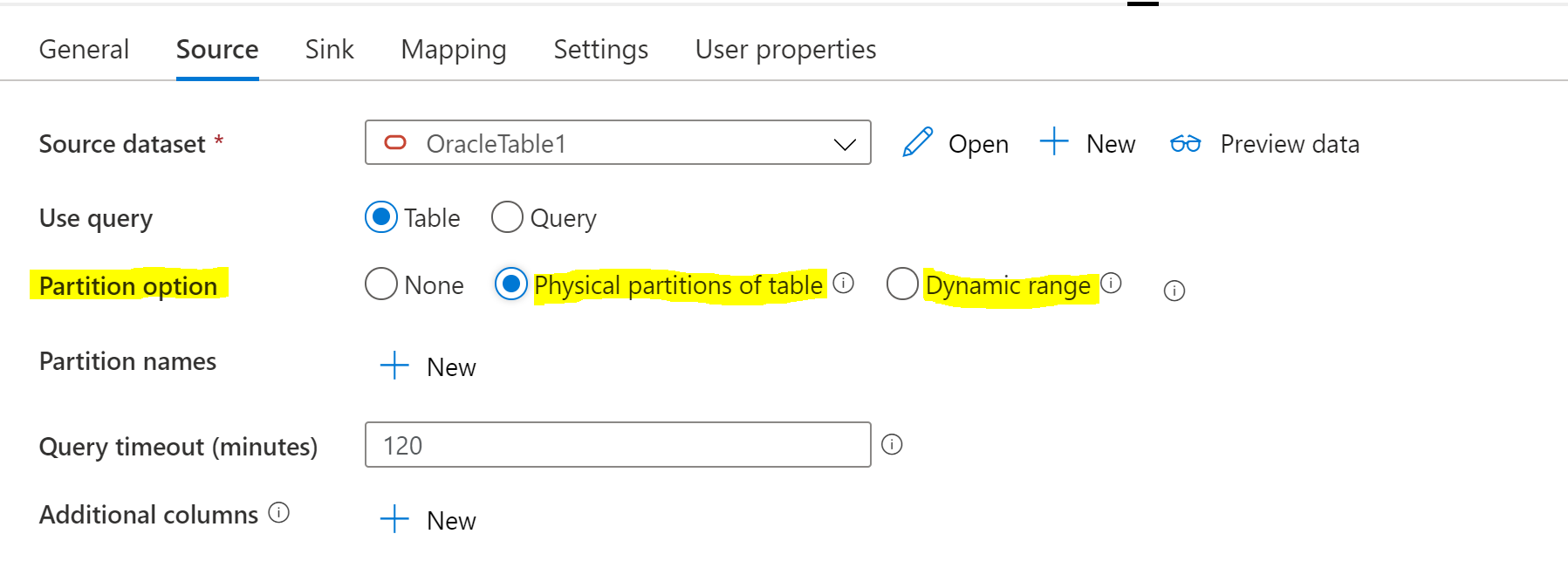

For the optimizations specific to Oracle, there is Partition Options. If you are currently using None, this would explain the low throughput. Whether you should select Physical partitions of table or Dynamic range depends on the particulars of your situation. Please see the article Parallel Copy from Oracle for details on determining which is right for you. The article also explains how the aforementioned Degree of parallelism interacts with the Partition options.

Please let me know if this helps you at all. Thank you for your patience.

Martin