Hello @Kunal Kumar Sinha and thank you for bringing this to our attention.

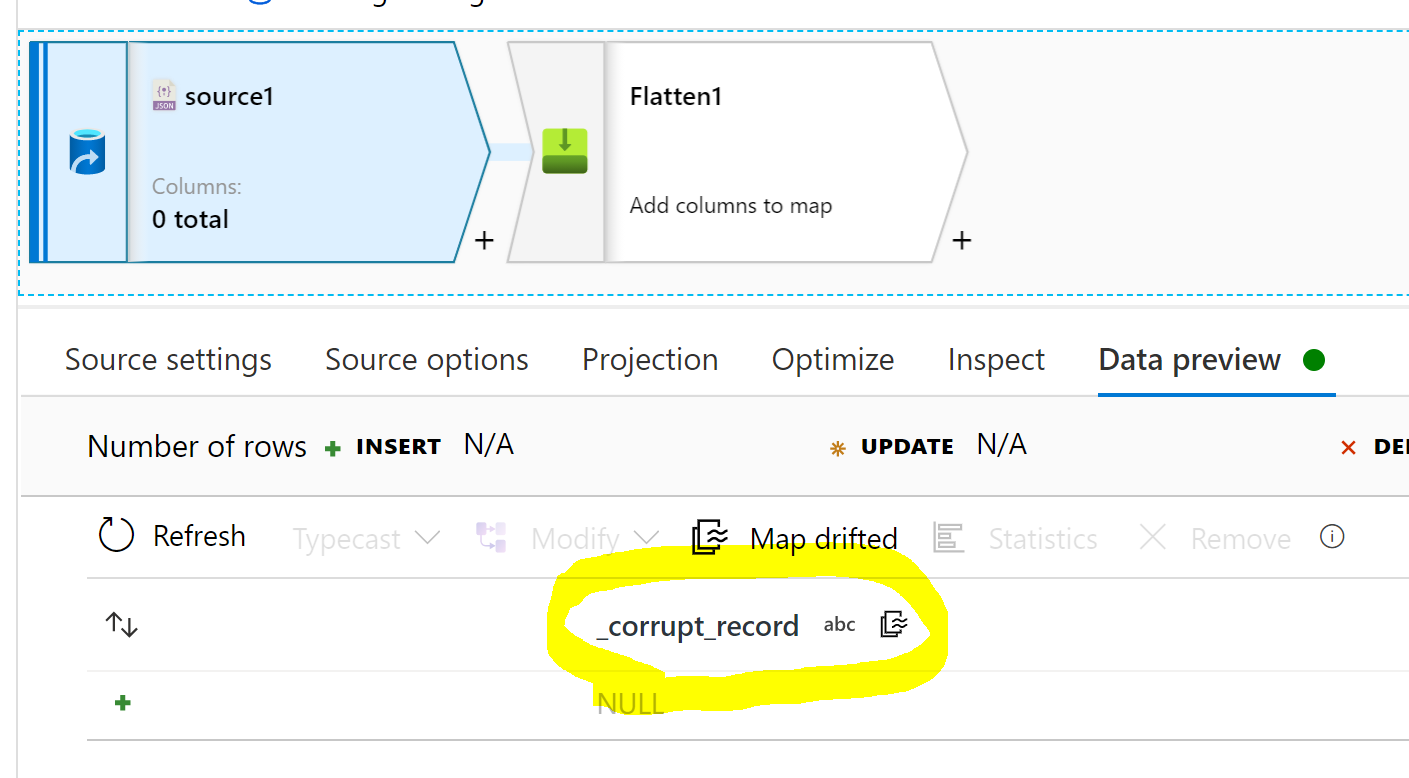

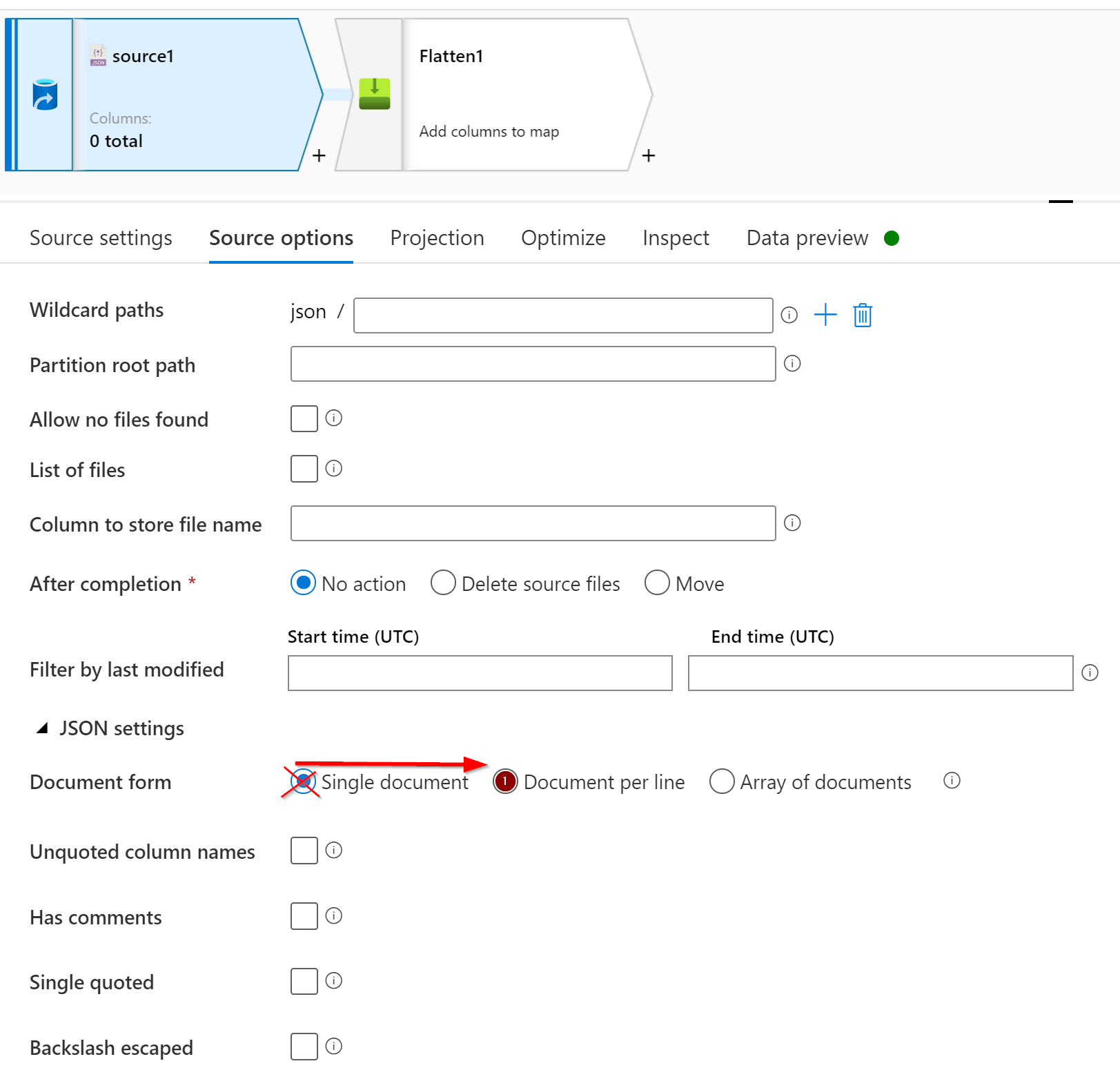

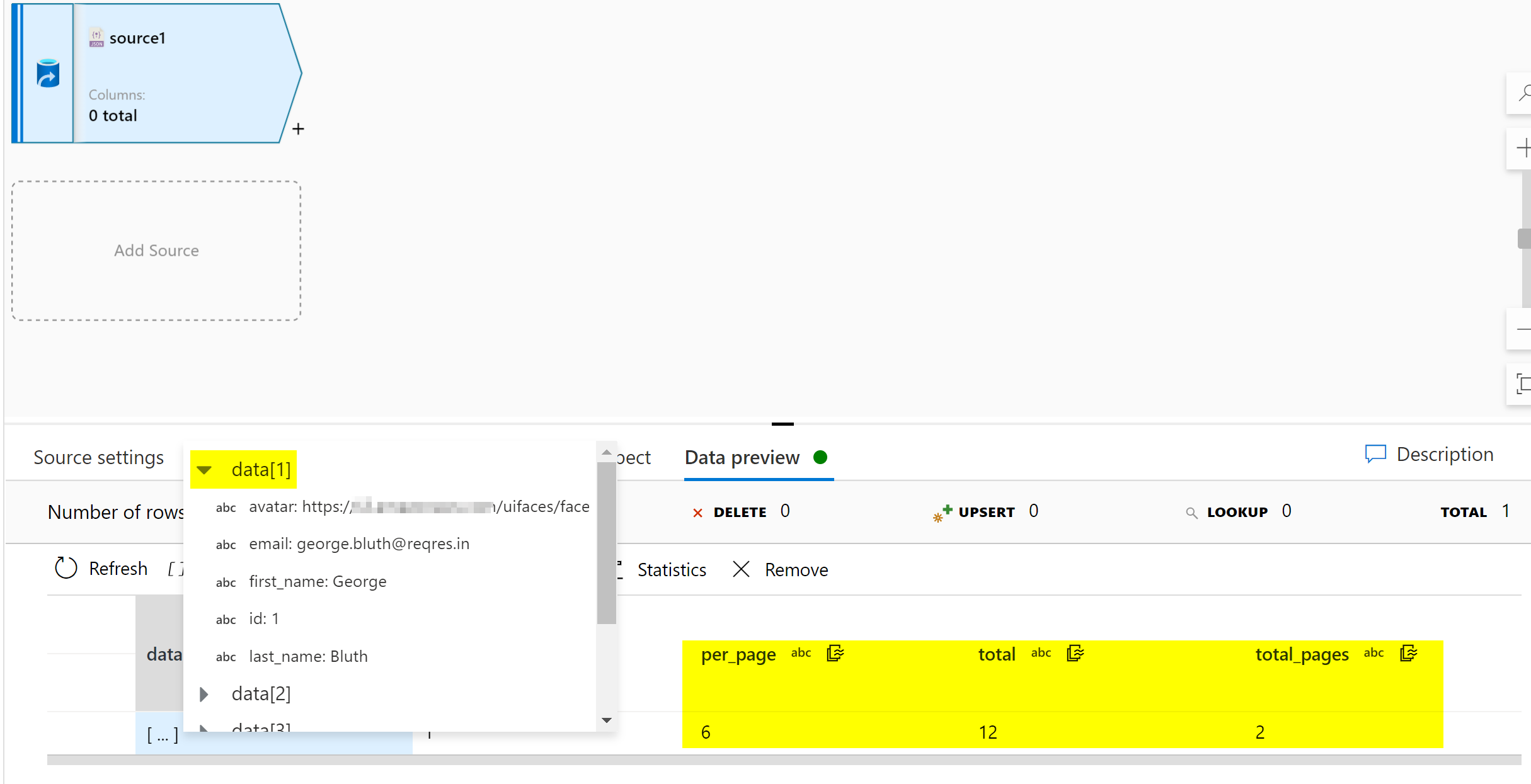

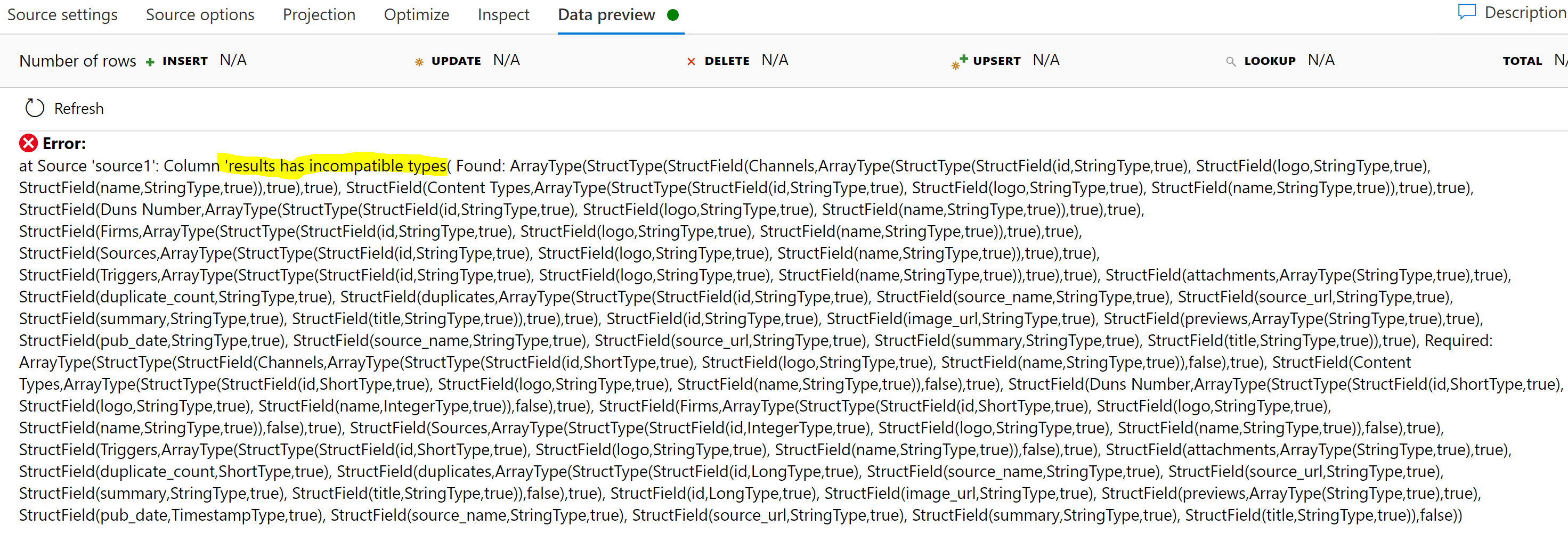

If I understand correctly, you have the same file/data written to blob storage by two different mechanisms, after which Mapping Data Flow cannot read one of them.

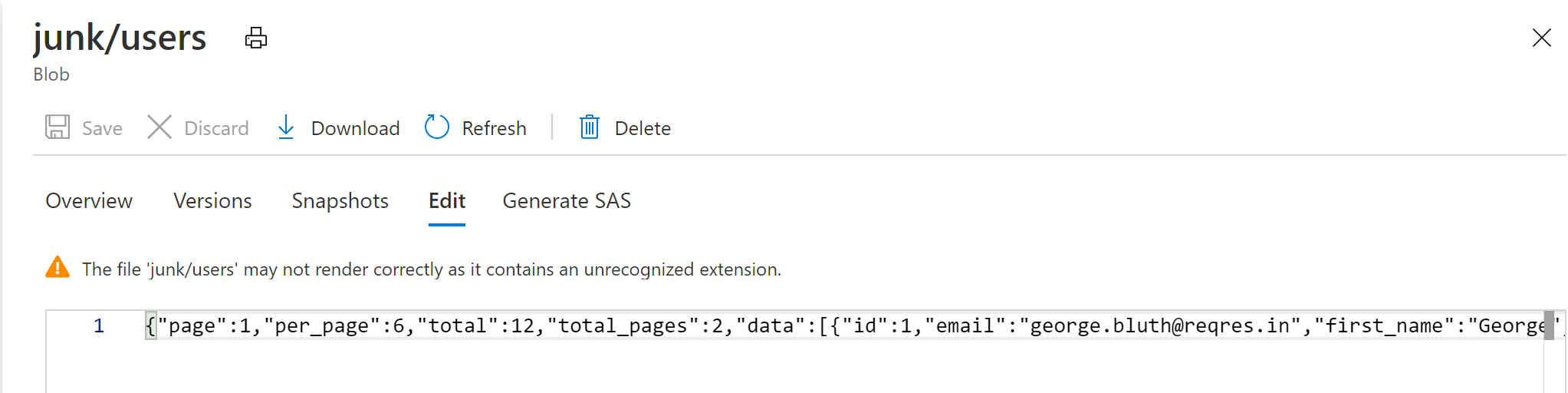

In the happy case you made a call to some API, and then manually uploaded the blob.

In the other case some service called the same API and got same response, and wrote to blob.

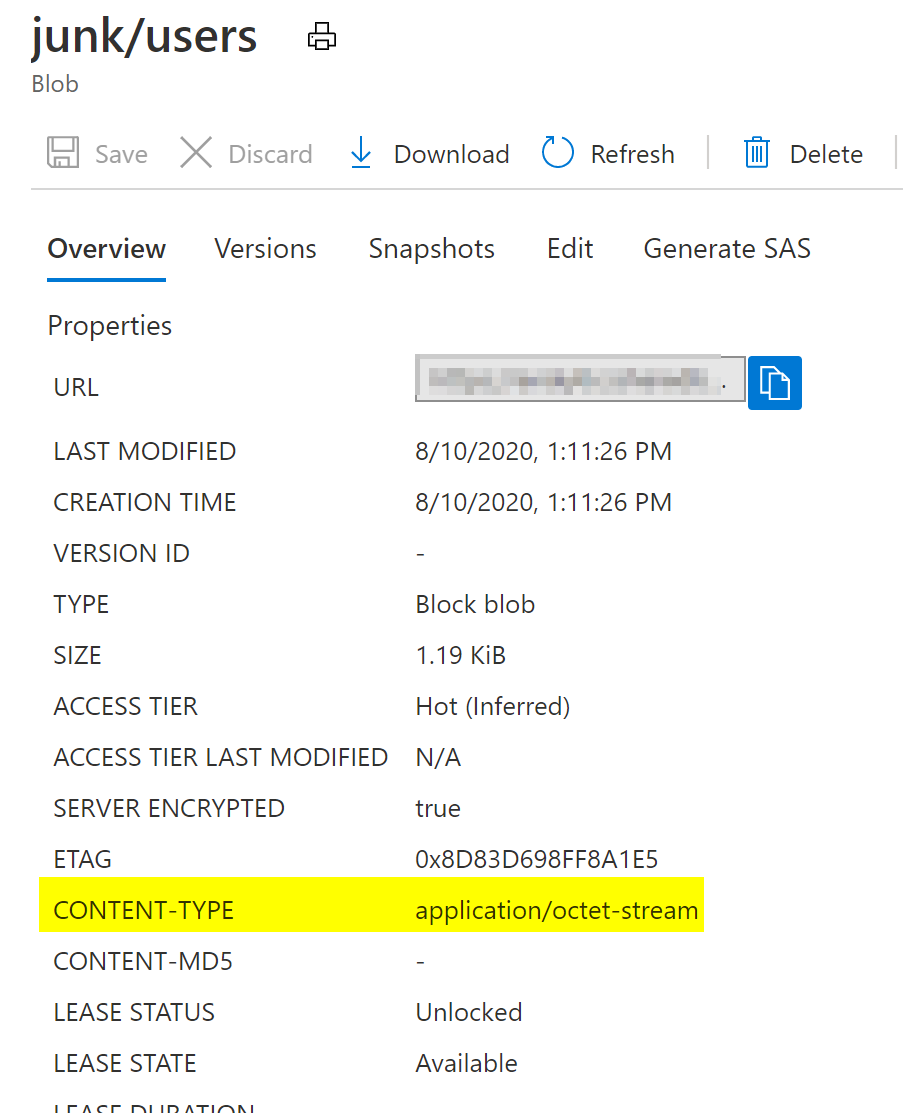

First, can you compare the file sizes or MD5s? Or do a diff on them to find the difference?

Also share a few properties of the blobs, specifically the blob type and content-type

Second, can you share what service is writing the bad blob?