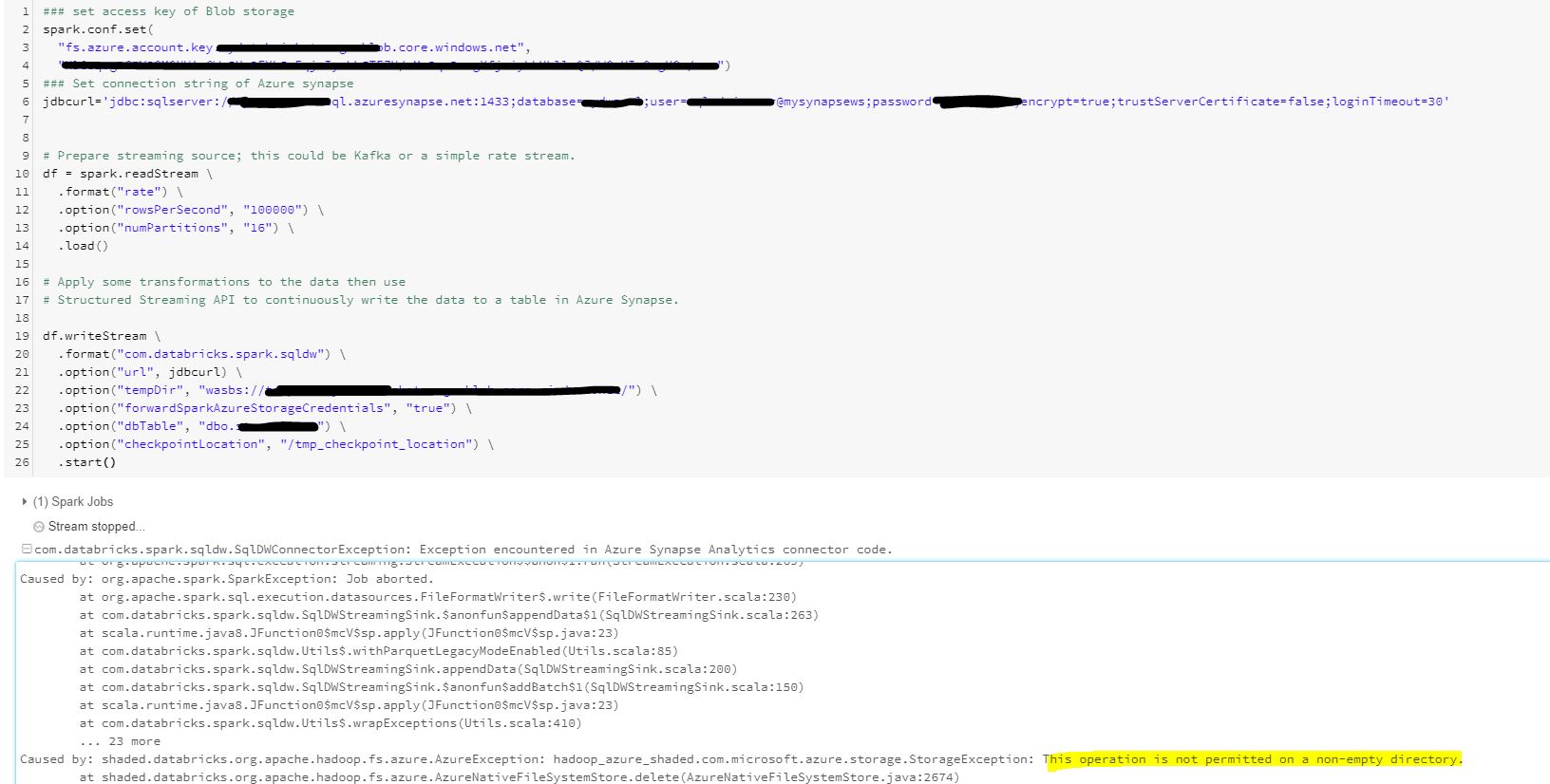

Hello @sakuraime ,

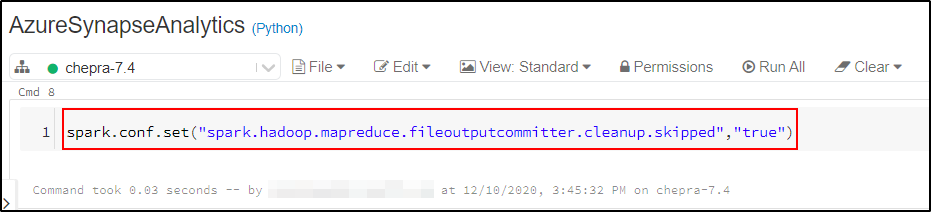

You can resolve this issue by using the below configuration:

spark.conf.set("spark.hadoop.mapreduce.fileoutputcommitter.cleanup.skipped","true")

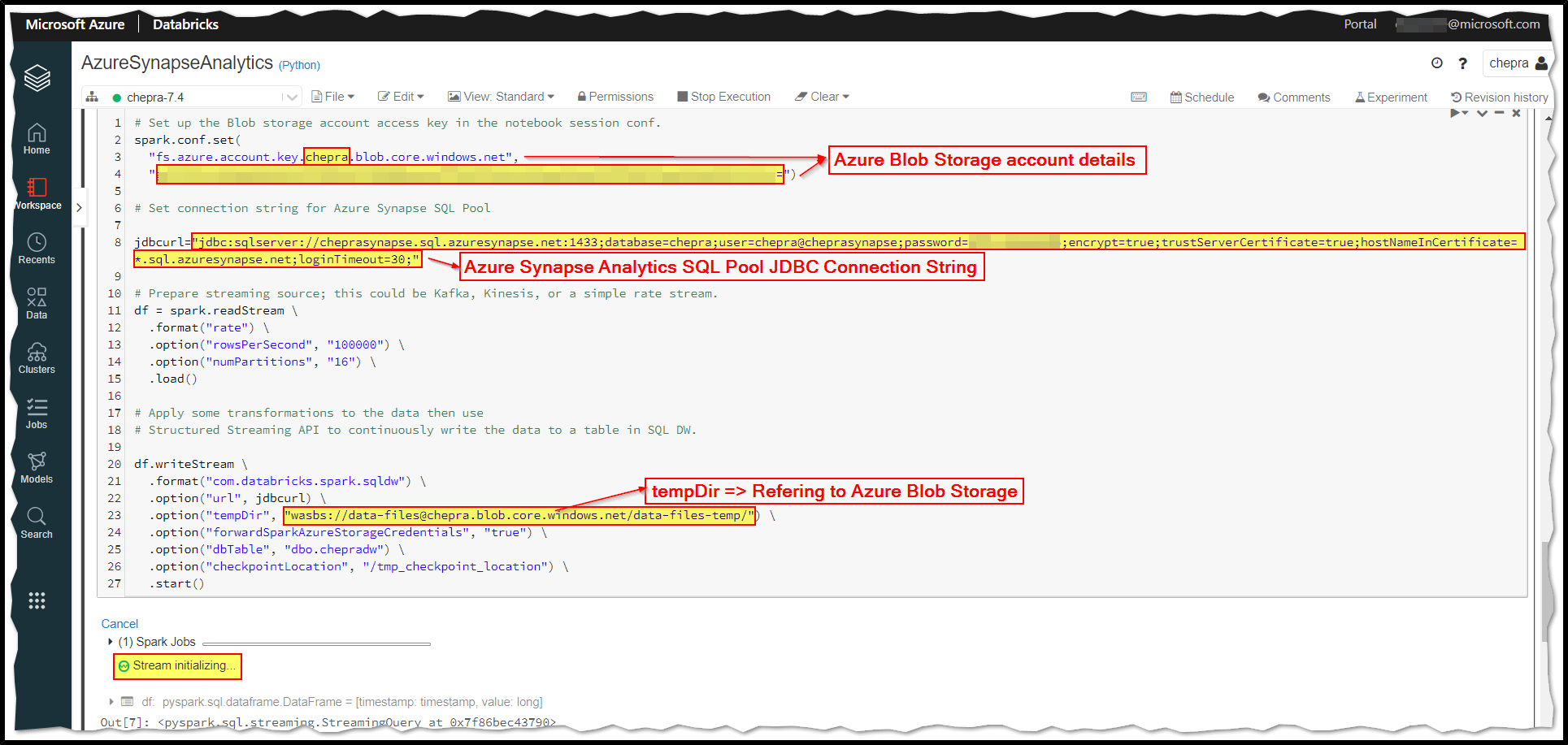

For “tempDir”, we recommend you use a dedicated Blob storage container for the Azure Synapse Analytics SQL pools.

Here is the python example of structured streaming:

# Set up the Blob storage account access key in the notebook session conf.

spark.conf.set(

"fs.azure.account.key.<your-storage-account-name>.blob.core.windows.net",

"<your-storage-account-access-key>")

# Prepare streaming source; this could be Kafka or a simple rate stream.

df = spark.readStream \

.format("rate") \

.option("rowsPerSecond", "100000") \

.option("numPartitions", "16") \

.load()

# Apply some transformations to the data then use

# Structured Streaming API to continuously write the data to a table in Azure Synapse.

df.writeStream \

.format("com.databricks.spark.sqldw") \

.option("url", "jdbc:sqlserver://<the-rest-of-the-connection-string>") \

.option("tempDir", "wasbs://<your-container-name>@<your-storage-account-name>.blob.core.windows.net/<your-directory-name>") \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("dbTable", "<your-table-name>") \

.option("checkpointLocation", "/tmp_checkpoint_location") \

.start()

The output of the Notebook:

Reference: Azure Databricks - Azure Synapse Analytics - Usage (Streaming).

Hope this helps. Do let us know if you any further queries.

------------

- Please accept an answer if correct. Original posters help the community find answers faster by identifying the correct answer. Here is how.

- Want a reminder to come back and check responses? Here is how to subscribe to a notification.