Hi @Aneesh Kumar A L ,

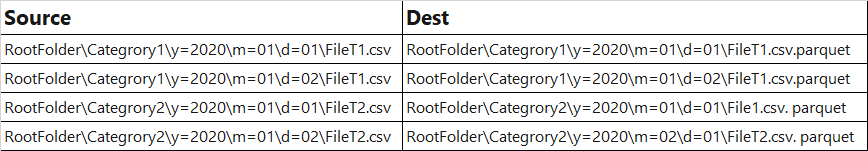

1: yes, it's possible to have the target file in the same hierarchy but the filename would be source: rootFolder/category/.../test.csv --> des: rootFolder/category/.../test.parquet, if you keep the same FilePath in Source and Sink Dataset. Only using the CopyActivity with Preserve Hierarchy, I am afraid it's not possible to change the filename to be user-defined yet.

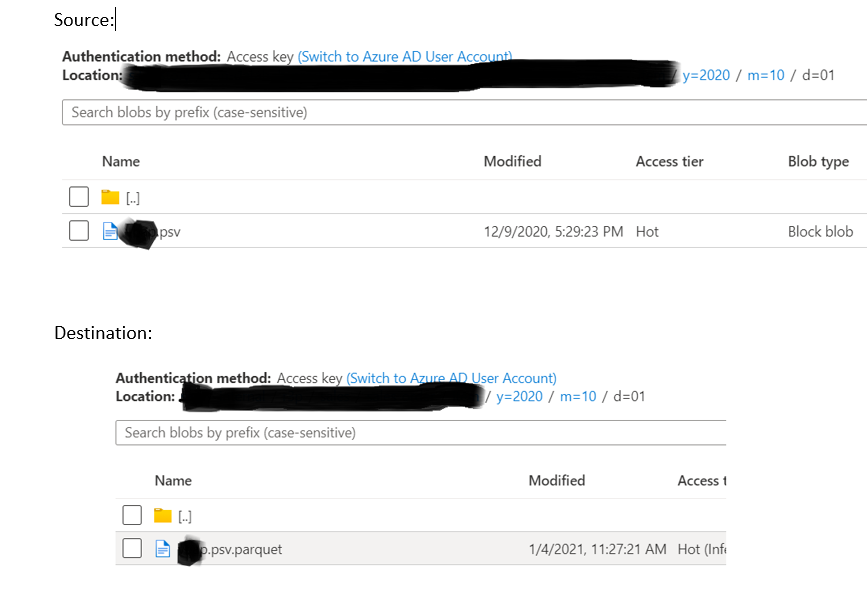

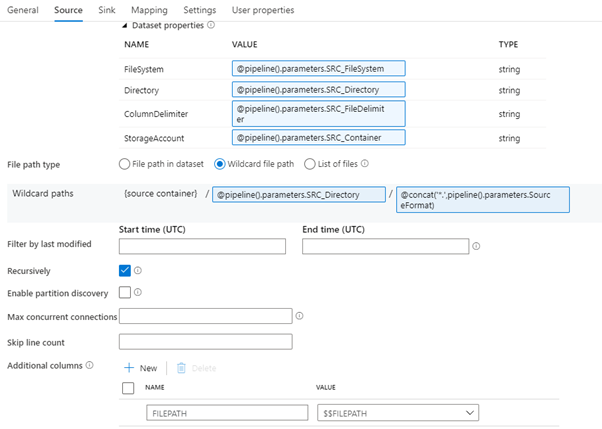

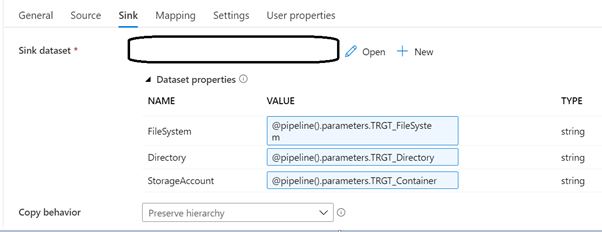

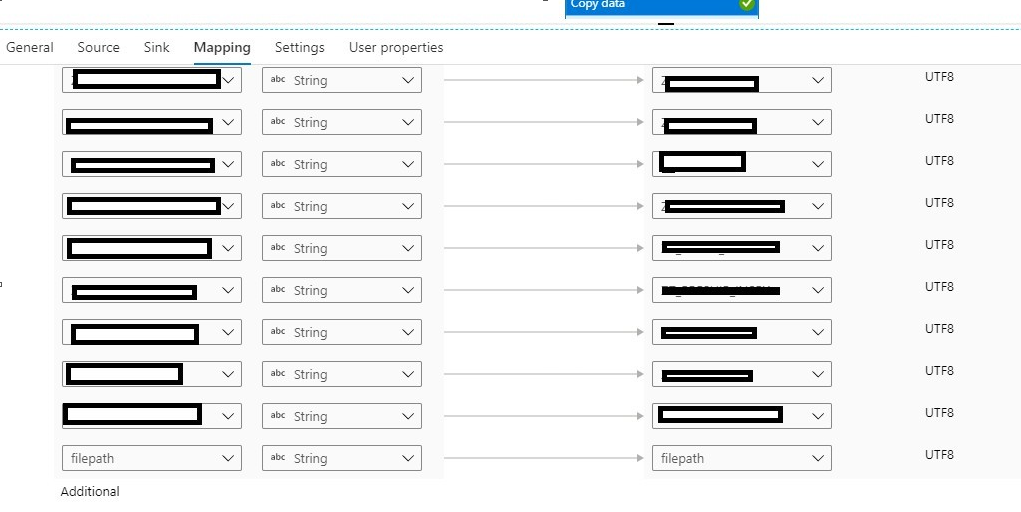

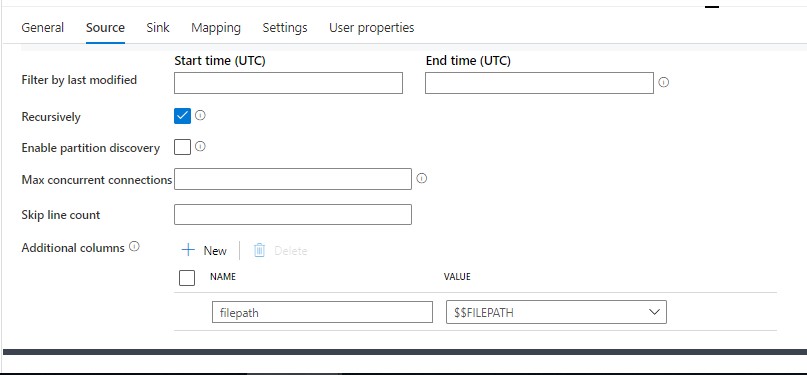

2 and 3: it possible with only copy activity. Add additional column $$FILEPATH in the CopyActivity-->source, no additional change need to be done in the mapping side, unless your source filetype change something else than .csv (e.g, .json, in such case, just reset the mapping and re-map. when filepath column appears, exclude the column in the mapping). You will get the relative-filepath information in the log file. Thanks! :)

Please see the screenshots for details:

Please let me know if this helps. If the above response helps, please "accept the answer" and "up-vote" the same! Thank you!