Thank you for clarifying the database type, @Gowri Shankar .

There is a tutorial specifically for Netezza migration. This tutorial includes many performance tips. While this tutorial eventually targets Synapse, it also works for blob. There is a section I would like to call out explicitly:

For larger tables (that is, tables with a volume of 100 GB or greater or that can't be migrated to Azure within two hours), we recommend that you partition the data by custom query and then make each copy-job copy one partition at a time. For better throughput, you can run multiple Azure Data Factory copy jobs concurrently. For each copy-job target of loading one partition by custom query, you can increase throughput by enabling parallelism via either data slice or dynamic range

I meant to post the below yesterday, but my message got eaten.

I do have some recommendations that are database agnostic.

First I would like to suggest partitioning your data. Given that it takes a long time to read all of a 1 trillion row table, it will also take a long time to read all of a 1 trillion row blob. If you have any plans to use this blob downstream, breaking this 1 blob up into many smaller blobs can help later services with ingestion speed.

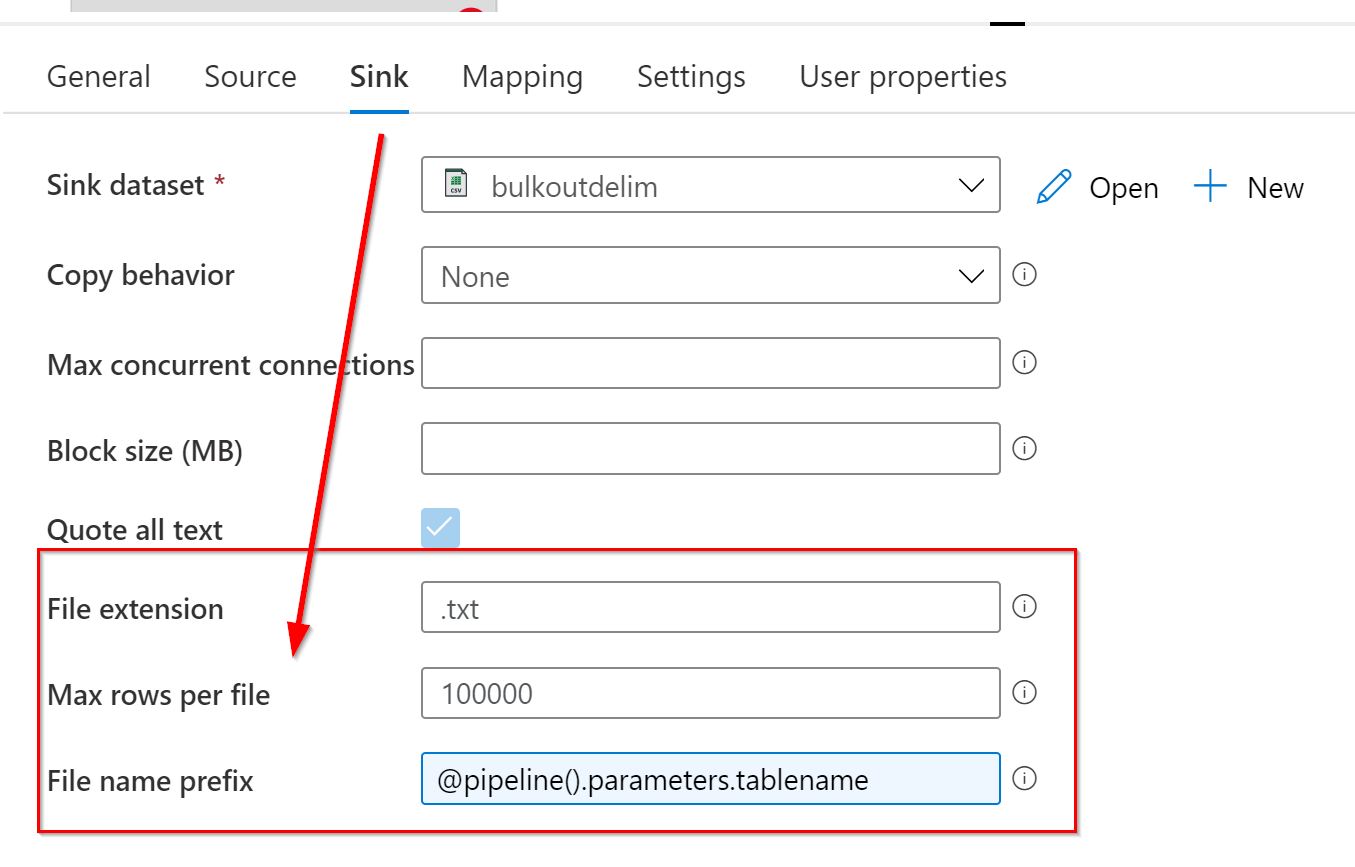

One way to do this when you do not have partitions on source, is to use the Max rows per file feature in the copy activity sink. This works for delimited text dataset, when no filename is specified in the dataset. We can use the File name prefix feature to give it a custom name.

If you do have partitions on source, target your queries or other options to use those instead.

Since your database is on-prem, it is probably in its own network behind a firewall, and not accessible to Azure directly. This means you would be using a Self-Hosted Integration Runtime (SHIR). Since the job is so big, scaling out the SHIR might be helpful. Scaling out consists of installing the same SHIR on multiple machines and registering with the same key. Each "node" increases the available parallelism by 4 or 5. The SHIR can be very resource-hungry, so decent hardware helps.

Parallelism is important. With too little parallelism, you are left with idle I/O on the database, and the copy takes a long time. With too much parallelism, threads are competing for I/O to the database , outgoing bandwidth, or other resources. This competition ends up slowing down the overall process and it takes too long.