@Vineet S - Thanks for the question and using MS Q&A platform.

Here is the example of copying file from one location to another:

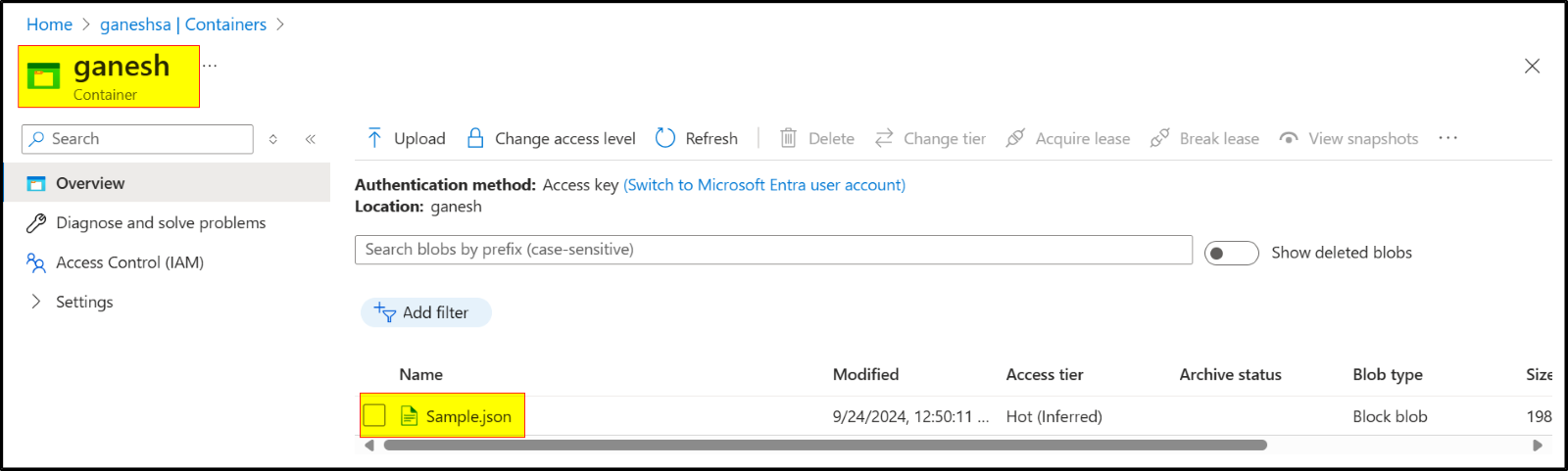

Step1: Create a storage account and create two containers named Input/ganesh and Output/sravan to copy the file from one location to another.

Note: Uploaded a sample JSON file to Input container in the storage account.

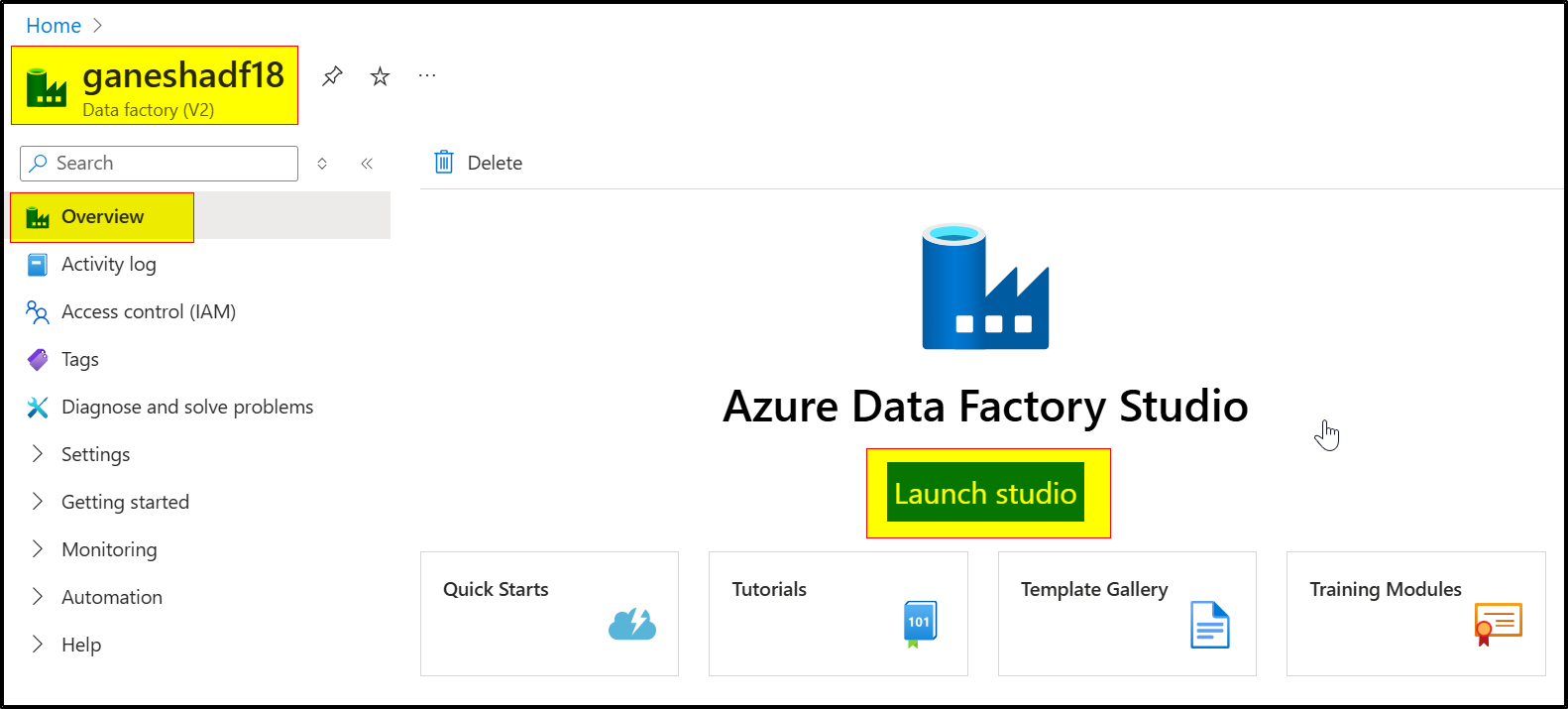

Step2: Create Azure data Factory and launch azure data studio.

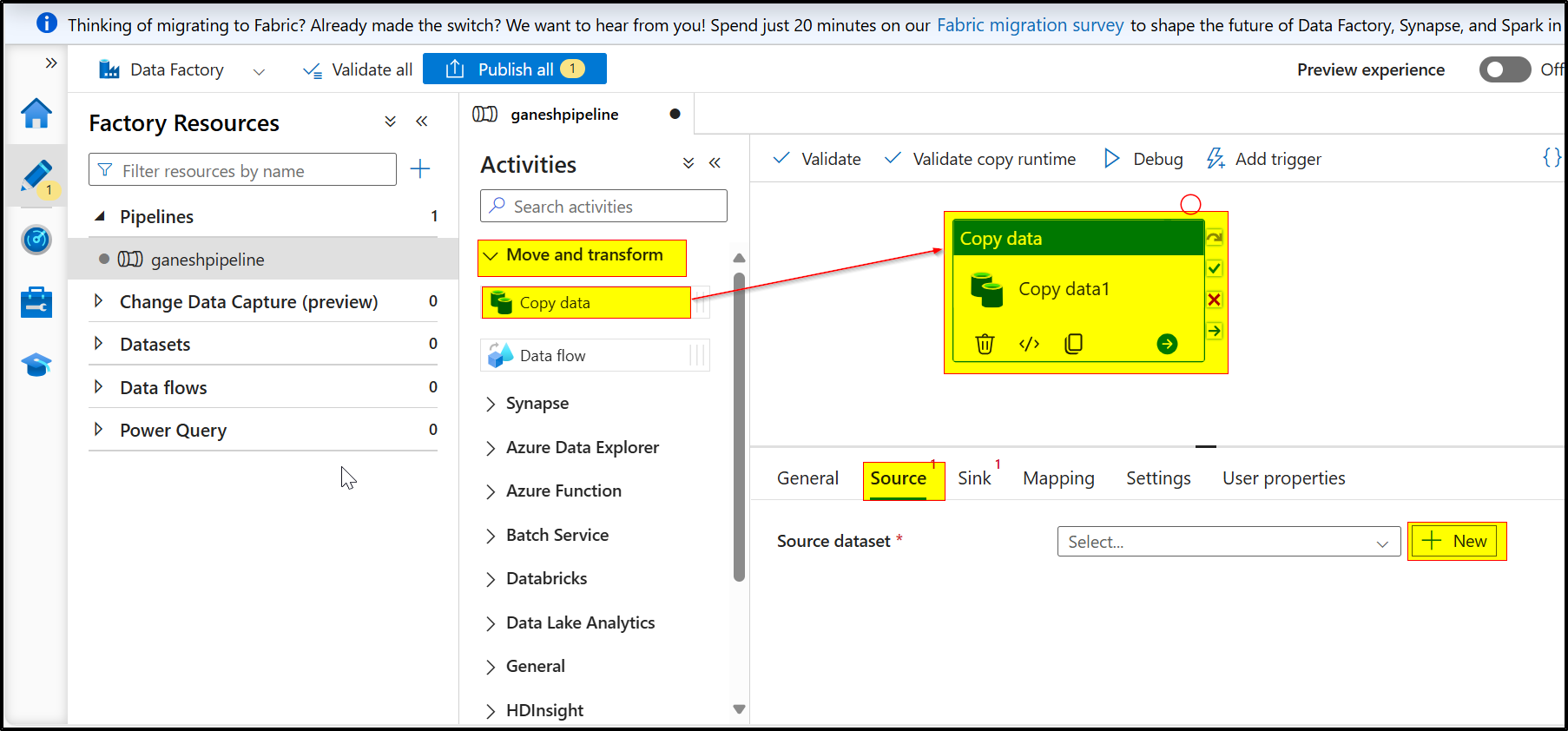

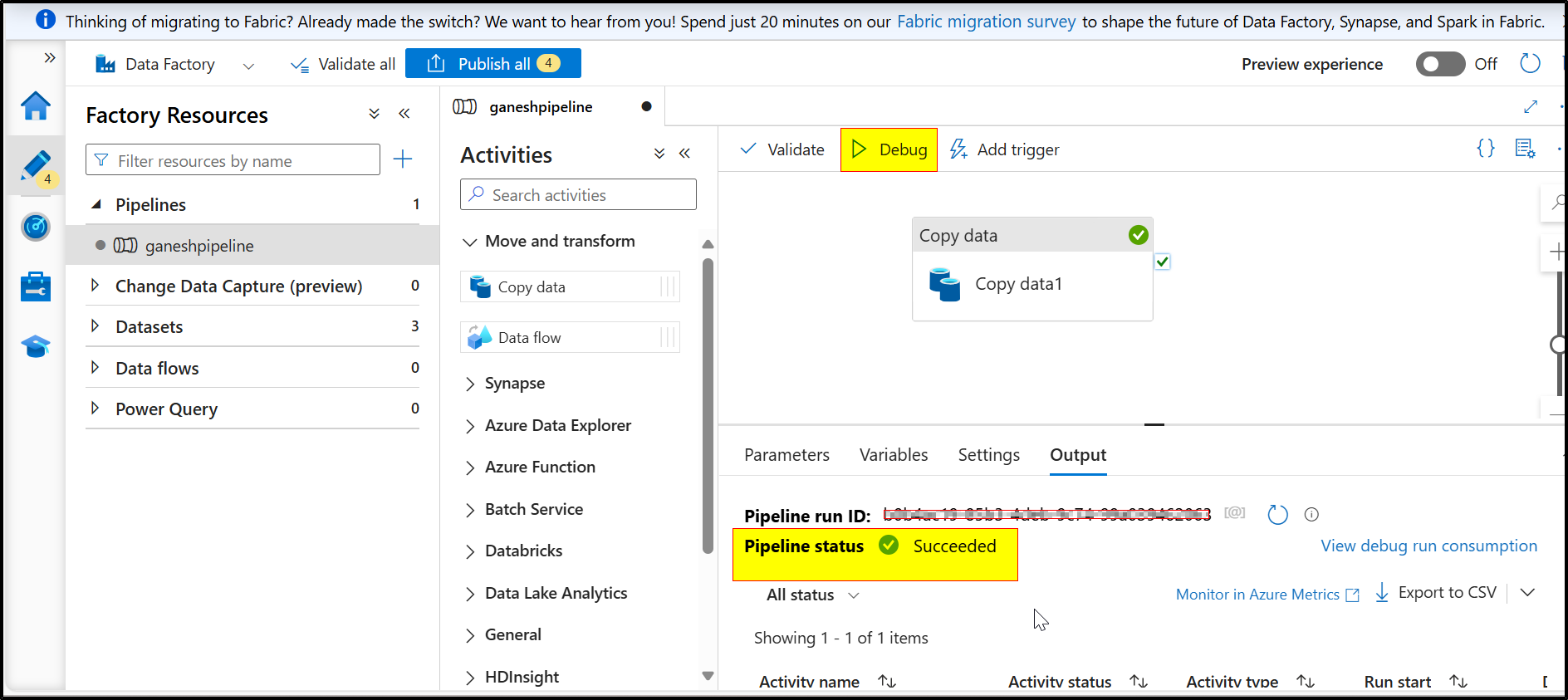

Step3: After opening, click on Author, pipelines and click on new pipeline and enter the name of the pipeline named CopyFromOneLocationToAnother aka ganeshpipeline

Step4: Click on "Move and transform," then drag and drop the "Copy activity" into the middle area. Next, click on "Source," then click "New." Enter a name for the dataset, choose the data store, and select the file type you want to copy.

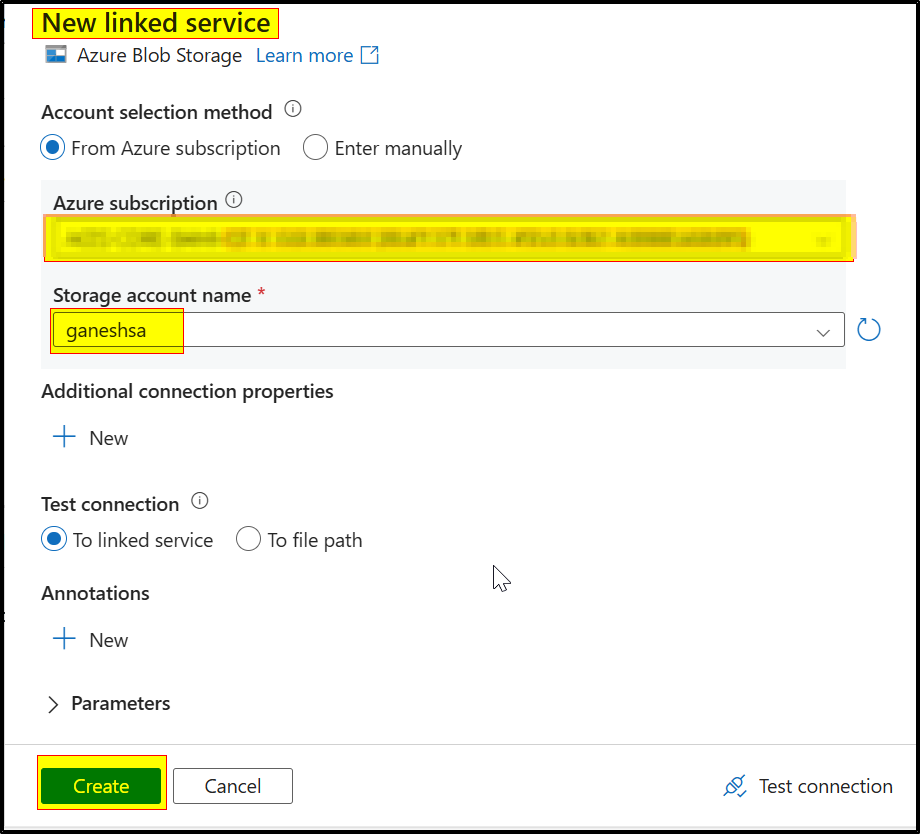

Step5: Now, select the subscription and the storage account name that you created, and then click "Create."

Step5: Now, select the subscription and the storage account name that you created, and then click "Create."

Step6: Choose the folder where the file is located, then select the file.

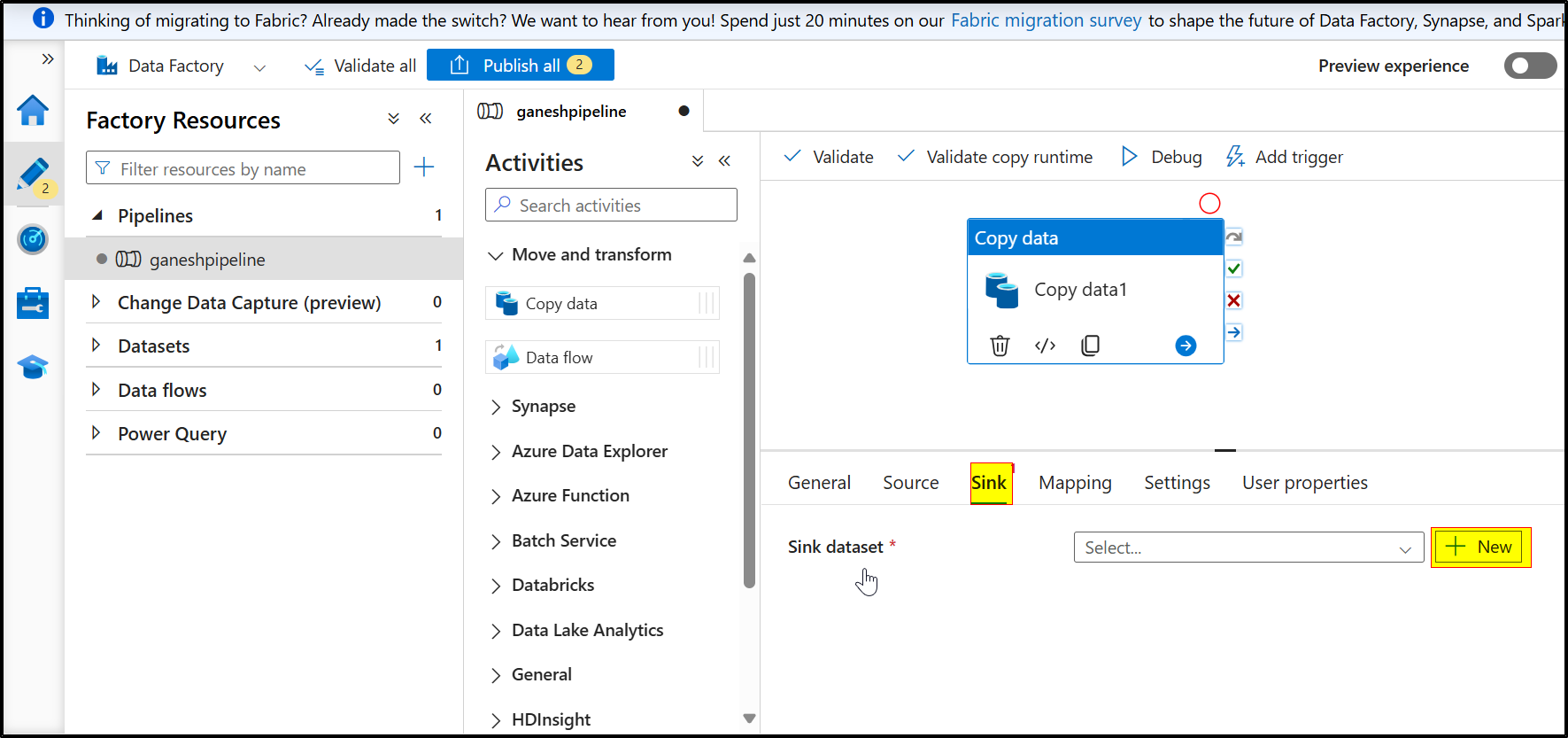

Step7: Click on "Sink," then click on "New Dataset." Choose the data store and select the file type.

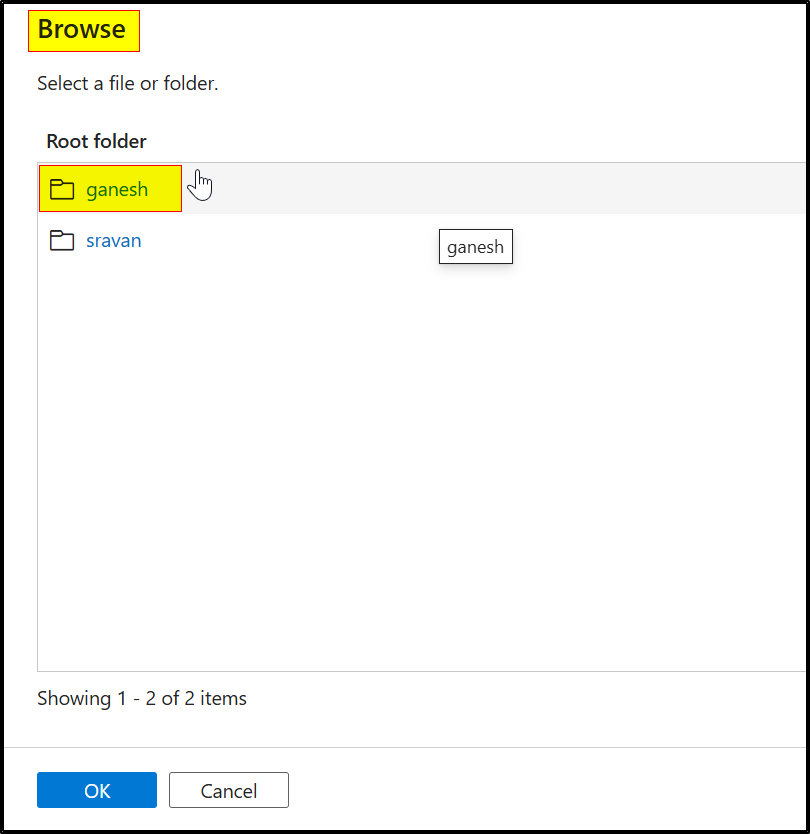

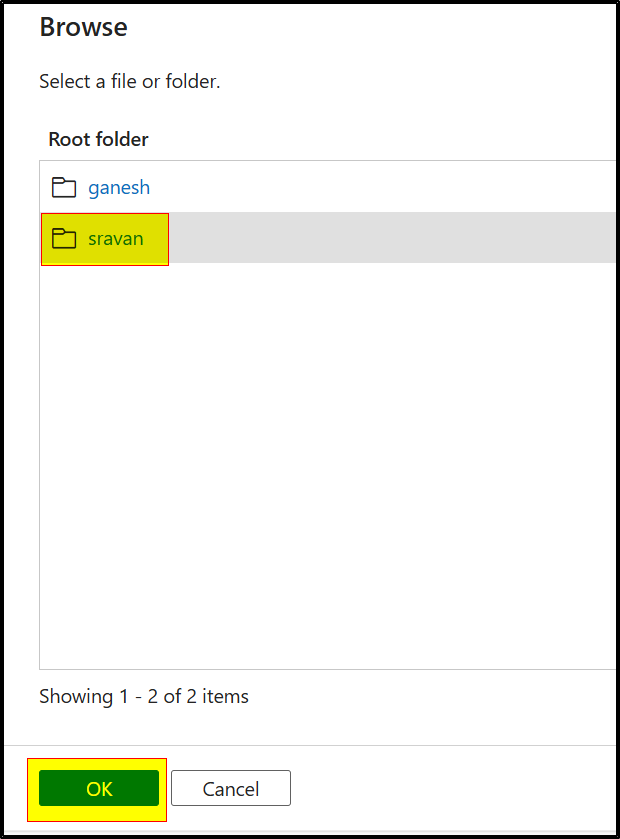

Step8: Now select the folder to which you want to copy the file.

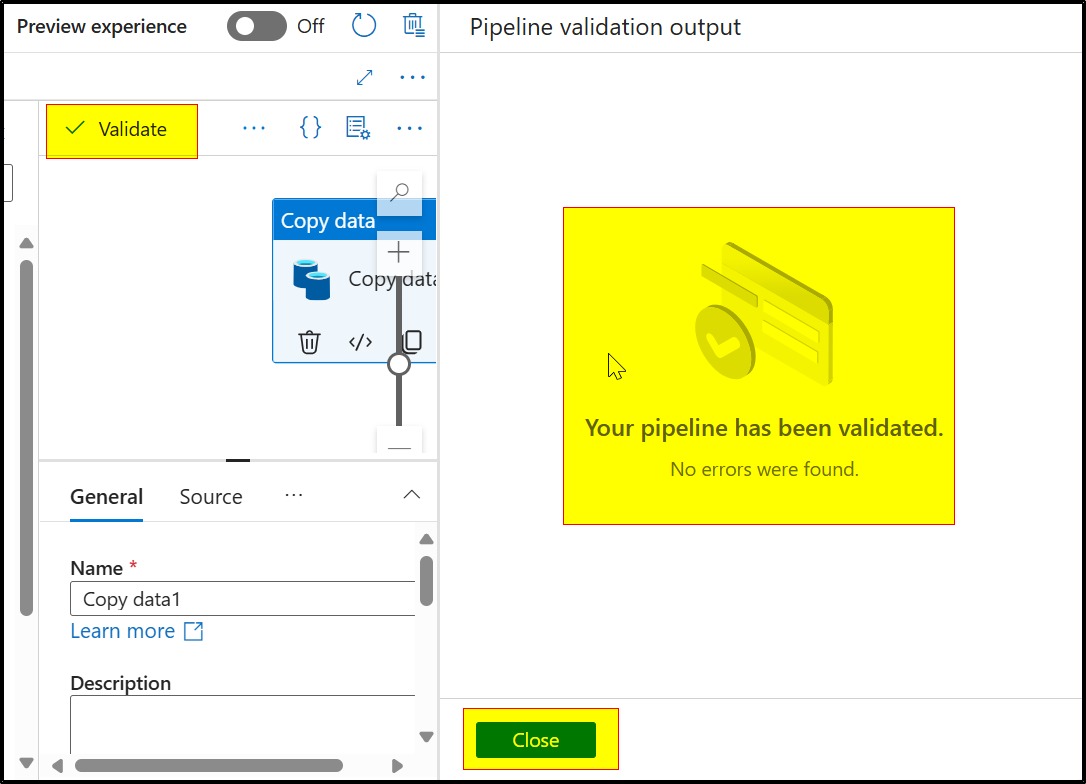

Step9: Click on "Validate" to ensure your pipeline is validated successfully without any errors.

Step10: Click on "Debug," wait for a moment, and you will see that the pipeline status is "Succeeded."

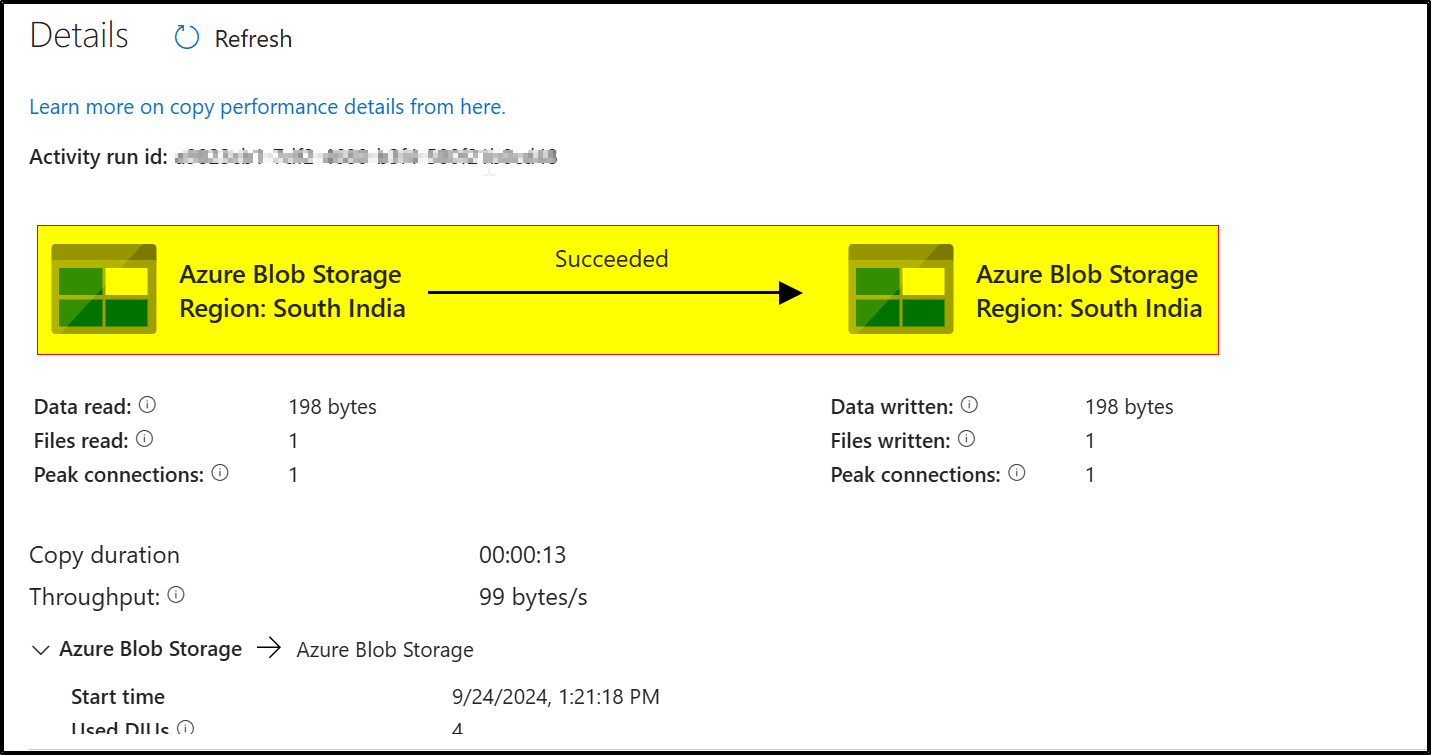

Here is the summary of copying from one location to another location.

Hope this helps. Do let us know if you have any further queries.

------------

If this answers your query, do click Accept Answer and Yes for was this answer helpful. And, if you have any further query do let us know.