Hello!

It is 2022.02.03 and i'm facing the same issue: trying to load from REST API Json to Parquet!

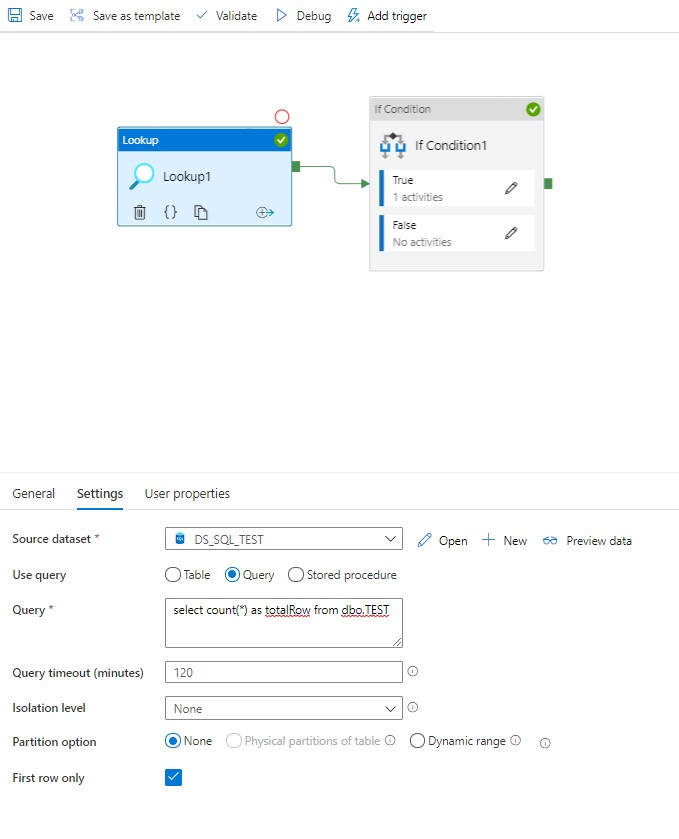

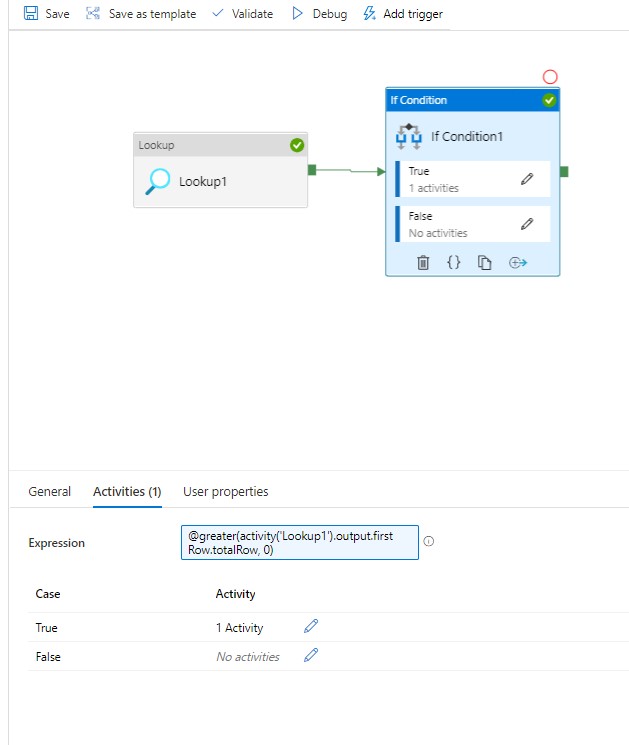

No mapping - works great, but copies only upper-level JSON fields. When i do "Import schema" button, i can load full JSON structureb but hav an error in one of load iterations.

Maybe this page should have raised an issue backthen in 2020 and now it was fixed? =)

Maybe some wise MS Azure developers should start working on this BUG in the near future? ;)

Error code 2200

Failure type User configuration issue

Details

ErrorCode=UserErrorTypeInSchemaTableNotSupported,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=Failed to get the type from schema table. This could be caused by missing Sql Server System CLR Types.,Source=Microsoft.DataTransfer.ClientLibrary,''Type=System.InvalidCastException,Message=Unable to cast object of type 'System.DBNull' to type 'System.Type'.,Source=Microsoft.DataTransfer.ClientLibrary,'

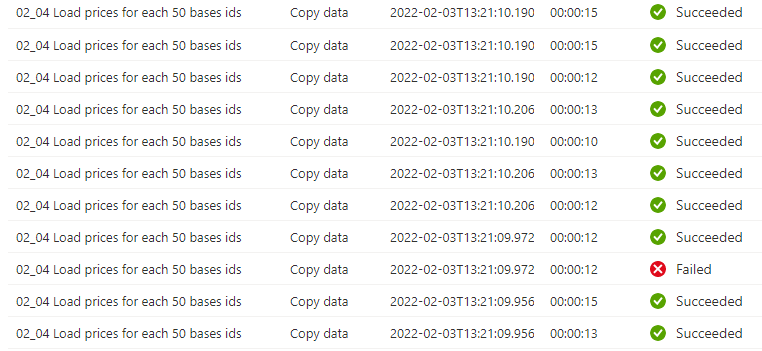

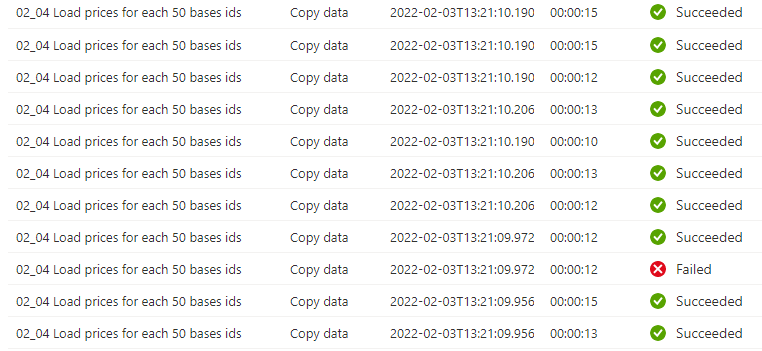

Please note that all requests, except one, are Successful and data is really loaded into Parquet: