@Swapnil I understand this is an old post but I have spent a ton of time testing different scenarios for tabular model refreshes so I thought I would share what I learned.

Background first:

· I have SQL Server 2016 (1200 compatibility tabular model), 16 tables (4 calculated date tables).

· The database is about 1.8 GB (according to the DAX Studio VertiPaq Analyzer).

· I have tried all of these processing methods in Visual Studio and SSMS.

· The source data are from a statewide incident management and call center. LIVE is the keyword here. Tickets and calls come in constantly all day. The purpose here is to keep the dashboards as up to date as possible without wiping the data out completely.

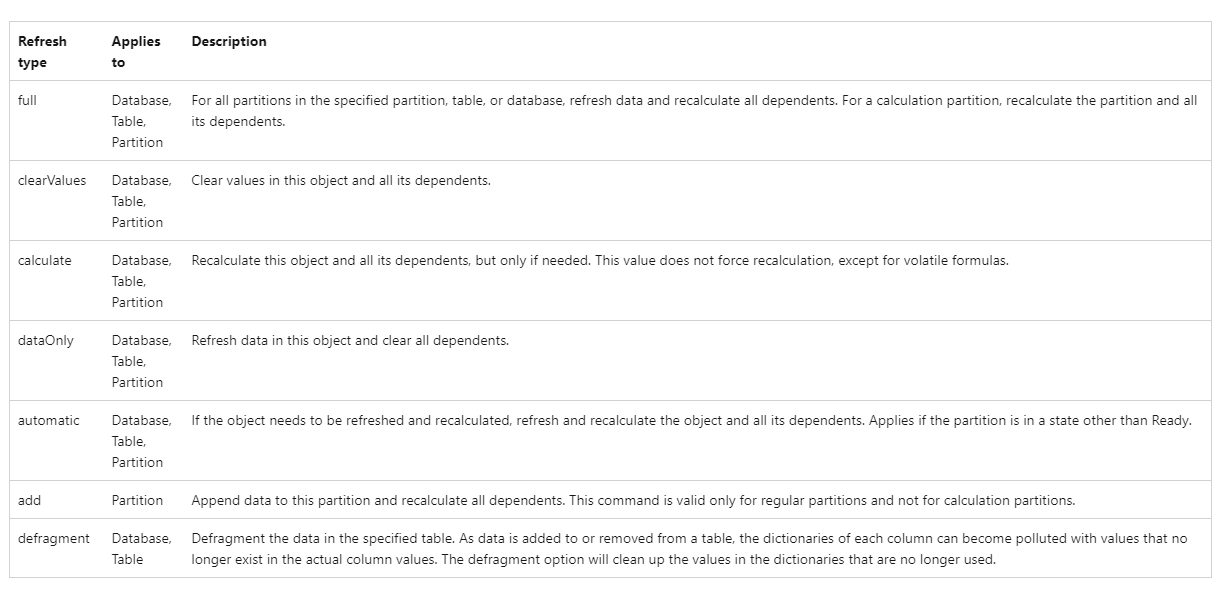

Now, if you are like me, the one-pager from Microsoft about the tabular model Refresh command (TMSL) plus the one about Process database, table, or partition (Analysis Services) is not nearly enough information to explain what is actually happening. However, from them you do get a picture of what is available to try.

There is one thing in particular that strikes me as odd in the table above. For the [calculate] and [automatic] refresh types, what does, “…if needed” and, “If the object needs to be refreshed and recalculated…” mean? I may never find out but what I found is that the results are inconsistent when using these refresh types. In some cases (but not all), I did notice changes in my test dashboard after using automatic refresh.

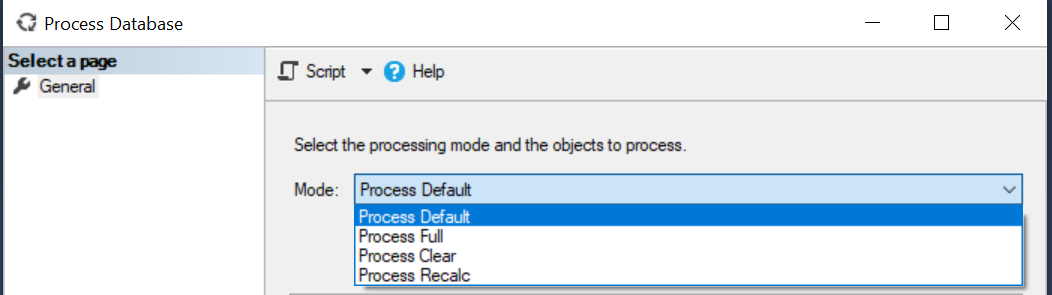

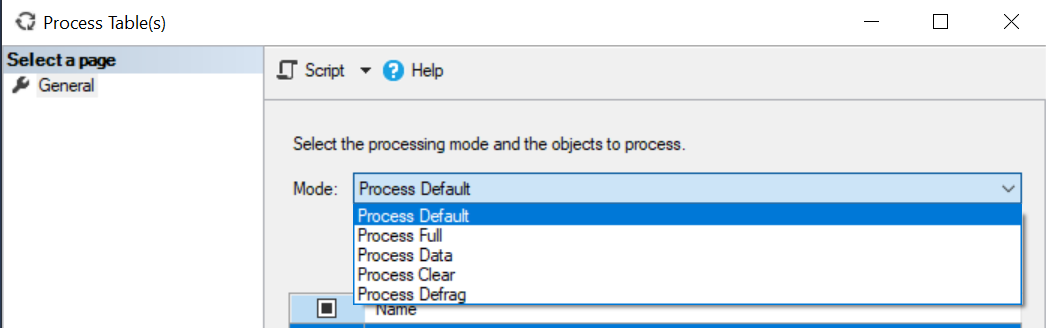

By the way, the refresh keywords used in SSMS and Visual Studio are slightly different than those used for scripting (TMSL) your refresh job in SQL Server Agent (not a big deal but it was not always clear to me that automatic and default were the same).

| SSMS/Visual Studio |

TMSL |

| Full |

Full |

| Clear |

clearValues |

| Recalc |

calculate |

| Data |

dataOnly |

| Default |

automatic |

| Defrag |

defragment |

And here is a sample TMSL script for an automatic refresh of two tables followed by a full refresh of the database:

<Statement xmlns="urn:schemas-microsoft-com:xml-analysis">

{

"sequence": {

"maxParallelism": 1 ,

"operations": [

{

"refresh": {

"type": "automatic" ,

"objects": [

{

"database": "DBName" ,

"table": "Table1"

},

{

"database": "DBName" ,

"table": "Table2"

},

]

}

},

{

"refresh": {

"type": "full" ,

"objects": [

{

"database": "DBName"

}

]

}

}

]

}

</Statement>

So I decided to continue using SQL Server Agent to setup the refresh, and then I tested out different scenarios to see how each behaved. Here’s what I found.

| Refresh Commands |

Outcome |

Approximate Duration |

| dataOnly refresh on tables; calculate refresh on DB |

Wipes data on dashboards until the calculate finishes |

3 minutes |

| automatic refresh on tables and DB |

Not sure; sometimes this does nothing |

3 seconds |

| full refresh on tables and DB |

Works but takes the longest |

3 minutes |

| automatic refresh on tables; full refresh on DB |

Works but takes longer than just full on DB |

3 minutes |

| calculate refresh on DB only |

Nothing |

3 seconds |

| dataOnly refresh on DB only |

Wipes data on dashboards |

2 minutes |

| automatic refresh on DB only |

Works but not every time |

45 seconds |

| full refresh on DB only |

Works every time |

2:30 |

In my case, the full refresh on the database worked the best in terms of consistency and duration. I did not notice a difference (aside from how long it took) in my testing when doing a full refresh on tables followed by a full refresh on the database. If you want to refresh frequently and want to ensure your dashboard stays in working condition without seeing, "Can't display the visual" on everything, I would suggest not using a dataOnly refresh.

A post from Chris Koester was helpful in terms of combining the steps into one rather than having a separate step for each table like I had previously done. However, for me, using a dataOnly refresh on the tables followed by a calculate on the database resulted in disappearing data on the dashboards until the job was complete.

I am sure there will be infinitely more testing on my part but I at least wanted to share some of my experiences so that hopefully others can benefit from this information.