Hi @Shahin Mortazave ,

If you want to pull data into Azure SQL DB for a specific range, you have to create do the following:

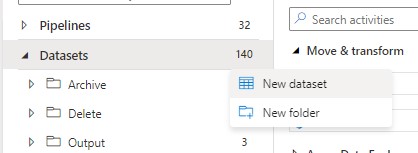

- create Datasets for your on-prem SQL table + your Azure SQL Table

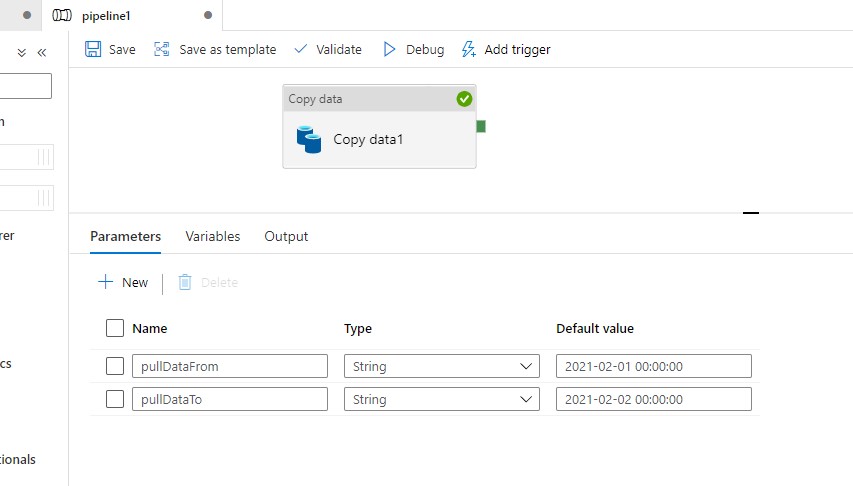

- create a pipeline with two parameters pullDataFrom and pullDataTo

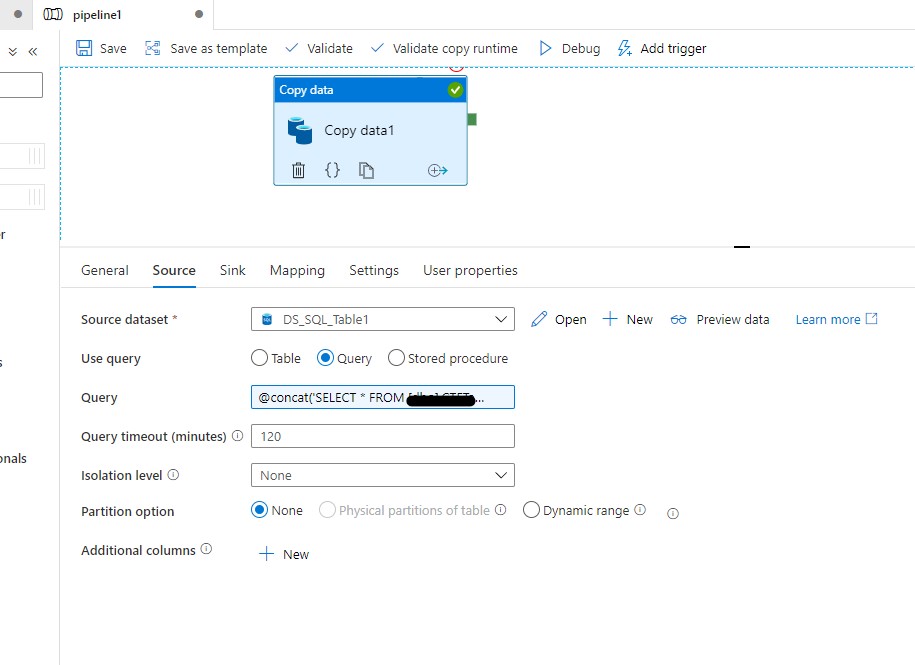

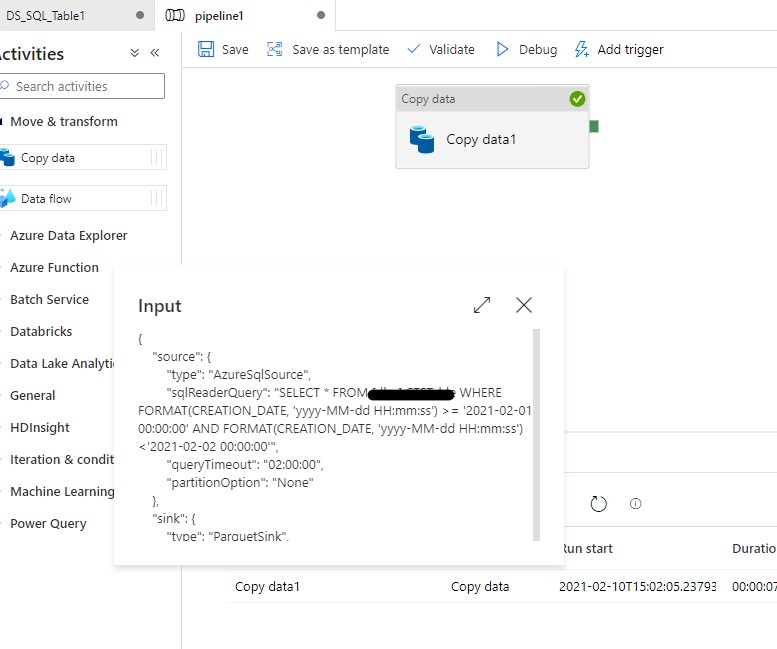

- add a CopyActivity into the pipeline where source --> Dataset_onPrem_Table and sink --> Dataset_Destination_Table

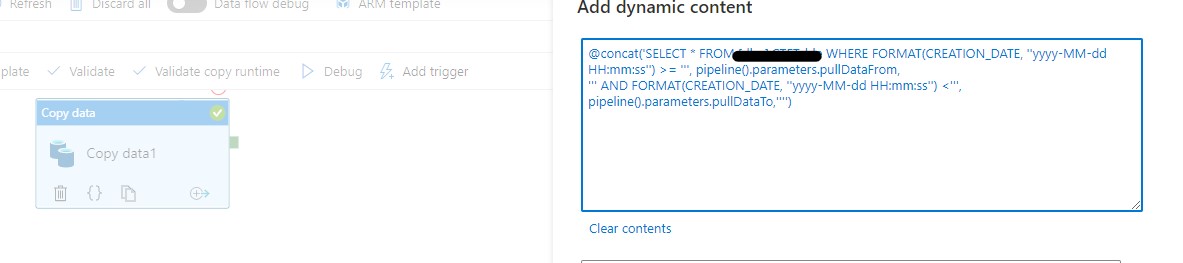

- now, in the CopyActivity > source --> select Query option and write your select statement there. @markus.bohland@hotmail.de ('SELECT * FROM Table1 WHERE FORMAT(CREATION_DATE, ''yyyy-MM-dd HH:mm:ss'') >= ''', pipeline().parameters.pullDataFrom,

''' AND FORMAT(CREATION_DATE, ''yyyy-MM-dd HH:mm:ss'') <''', pipeline().parameters.pullDataTo,'''') - before pulling data on schedule, run the pipeline to pull and load all the records to the Azure SQL Table by widen the pullDataFrom and pullDataTo date until your schedule the pipeline.

- in the trigger, you can use the following expression to dynamically generate the daterange e.g., @markus.bohland@hotmail.de (formatDateTime(adddays(utcnow(), -1), 'yyyy-MM-dd'), ' 00:00:00')

@markus.bohland@hotmail.de (formatDateTime(utcnow(), 'yyyy-MM-dd'), ' 00:00:00')

Please see the screenshots for details:

----------

If the above response is helpful, please accept as answer and up-vote it. Thanks!