Hi @Vidyashree Salimath ,

Thanks for reaching out in Microsoft Q&A forum.

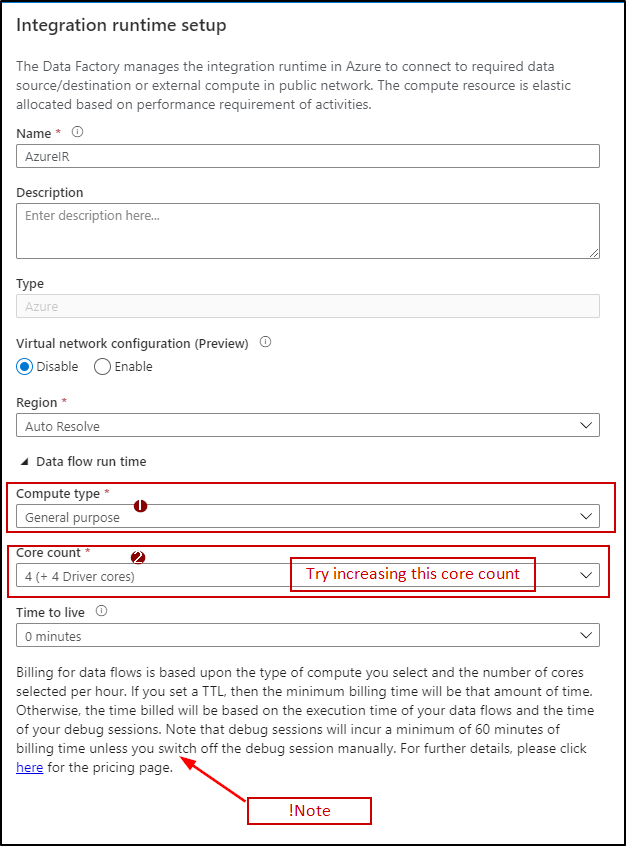

By looking at the error message it seems like issue is due to spark resources overloaded. I would recommend you to please try with bigger Integration runtime (more cores)

Please try with General Purpose compute type incase if you are using Memory optimized and also if you have configured some custom key partition in transformations, try defaulting to current partitioning so that spark can handle that optimization for you.

NOTE: Billing for data flows is based upon the type of compute you select and the number of cores selected per hour. If you set a TTL, then the minimum billing time will be that amount of time. Otherwise, the time billed will be based on the execution time of your data flows and the time of your debug sessions. Note that debug sessions will incur a minimum of 60 minutes of billing time unless you switch off the debug session manually. For further details, please click here for the pricing page.

In case if you still encounter the issue even after using bigger IR, please share below details for further investigation.

- failed pipeline runID

- failed activity runID

- Compute type used in IR

- Core counts used in IR

- Any custom key partition used?

Hope the above info helps. Looking forward to your confirmation.

----------

Thank you

Please do consider to click on "Accept Answer" and "Upvote" on the post that helps you, as it can be beneficial to other community members.