Hi @Anonymous ,

Thanks for reaching out.

Based on the error message I don't think the issue is related to SHIR. I believe either of your source/sink is Azure Storage. If yes, then Azure Storage may throttle your application if it approaches the scalability limits. In some cases, it may be unable to handle a request due to some transient condition. In both cases, the service may return a 503 (Server Busy) or Timeout (500) error.

The client application (ADF in your case) should typically retry the operation that causes one of these errors. However, if Azure Storage is throttling your application because it is exceeding scalability targets, or even if the service was unable to serve the request for some other reason, aggressive retries may make the problem worse. Using an exponential back off retry policy is recommended, and the client libraries default to this behavior. For example, your application may retry after 2 seconds, then 4 seconds, then 10 seconds, then 30 seconds, and then give up completely. In this way, your application significantly reduces its load on the service, rather than exacerbating behavior that could lead to throttling.

For more information, please refer to : https://learn.microsoft.com/azure/storage/blobs/storage-performance-checklist#timeout-and-server-busy-errors

Here is a Performance and scalability checklist for Blob storage: https://learn.microsoft.com/azure/storage/blobs/storage-performance-checklist#capacity-and-transaction-targets. In this document, it gave out a Design consideration list, and have recommendation for our customers to consider when designing their application, in your case—it’s ADF pipeline design

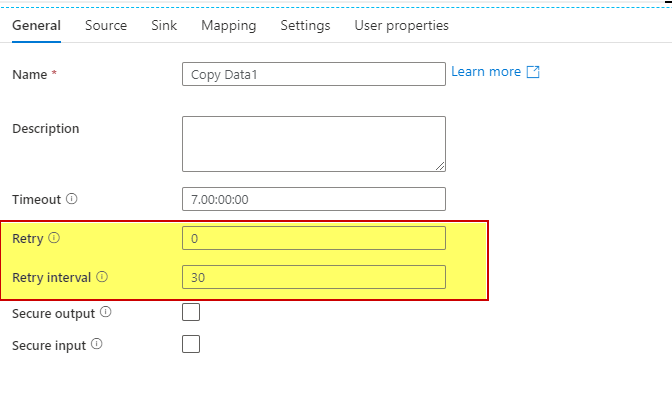

I would recommend you to please try setting retry options in your copy activity

If the issue still persists even after trying with retry options, please do share the failed pipeline runID as well as failed activity runID for further analysis.

Hope this info helps.

We look forward to your response.

----------

Thank you

Please do consider to click on "Accept Answer" and "Upvote" on the post that helps you, as it can be beneficial to other community members.