If you want to store the data in mounted ADLS Gen2, just give path like /mnt/mountname/whateverfolder

someDF.write.mode("overwrite").parquet("/mnt/Datalake3/feature/test1.parquet")

I removed /dbfs/ from above path. Try and see if it works

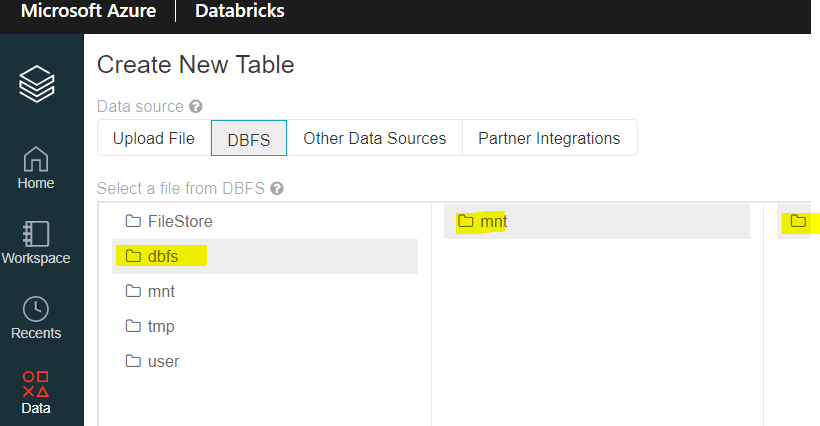

The data that is getting stored with your current code should be in internal ADB storage here:

----------

Please don't forget to Accept Answer and Up-vote if the response helped -- Vaibhav