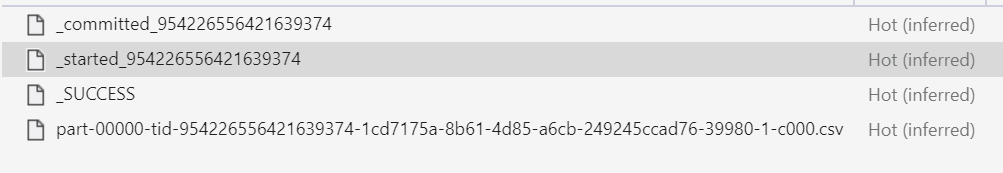

Try using Event based triggers in ADF. You should configure it such way that as soon as new file is created ending with .csv, pipeline will use this file to copy/process

See point 6 in below doc

https://learn.microsoft.com/en-us/azure/data-factory/how-to-create-event-trigger

----------

Please don't forget to Accept Answer and Up-vote if the response helped -- Vaibhav