Hello @sakuraime ,

Connecting Azure Synapse using OAuth 2.0 with a service principal for authentication is available from

Databricks Runtime 8.1 and above.

Below are the steps to connect Azure Synapse using OAuth 2.0 with a service principal for authentication:

Step1: Provide service Principal – permissions to Azure Synapse Analytics and storage account.

Azure Synapse Analytics: Go to workspace => Under settings => SQL Active Directory admin => Click on Set admin => Add registered application => Click on save.

Azure Storage temp account: Go to Storage account => Access Control (IAM) => Add role assignment => Select Role: Storage Blob Data Contributor Select: register application => Click on save.

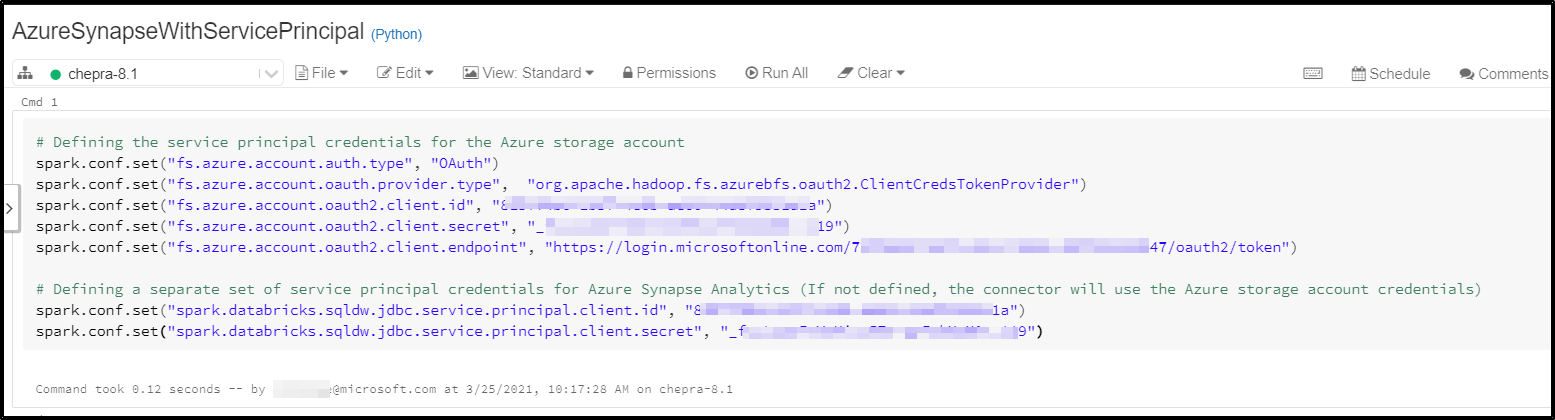

Step2: Define Service Principal credentials for the Azure Synapse Analytics and storage account.

Python Code:

# Defining the service principal credentials for the Azure storage account

spark.conf.set("fs.azure.account.auth.type", "OAuth")

spark.conf.set("fs.azure.account.oauth.provider.type", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")

spark.conf.set("fs.azure.account.oauth2.client.id", "<application-id>")

spark.conf.set("fs.azure.account.oauth2.client.secret", "<service-credential>")

spark.conf.set("fs.azure.account.oauth2.client.endpoint", "https://login.microsoftonline.com/<directory-id>/oauth2/token")

# Defining a separate set of service principal credentials for Azure Synapse Analytics (If not defined, the connector will use the Azure storage account credentials)

spark.conf.set("spark.databricks.sqldw.jdbc.service.principal.client.id", "<application-id>")

spark.conf.set("spark.databricks.sqldw.jdbc.service.principal.client.secret", "<service-credential>")

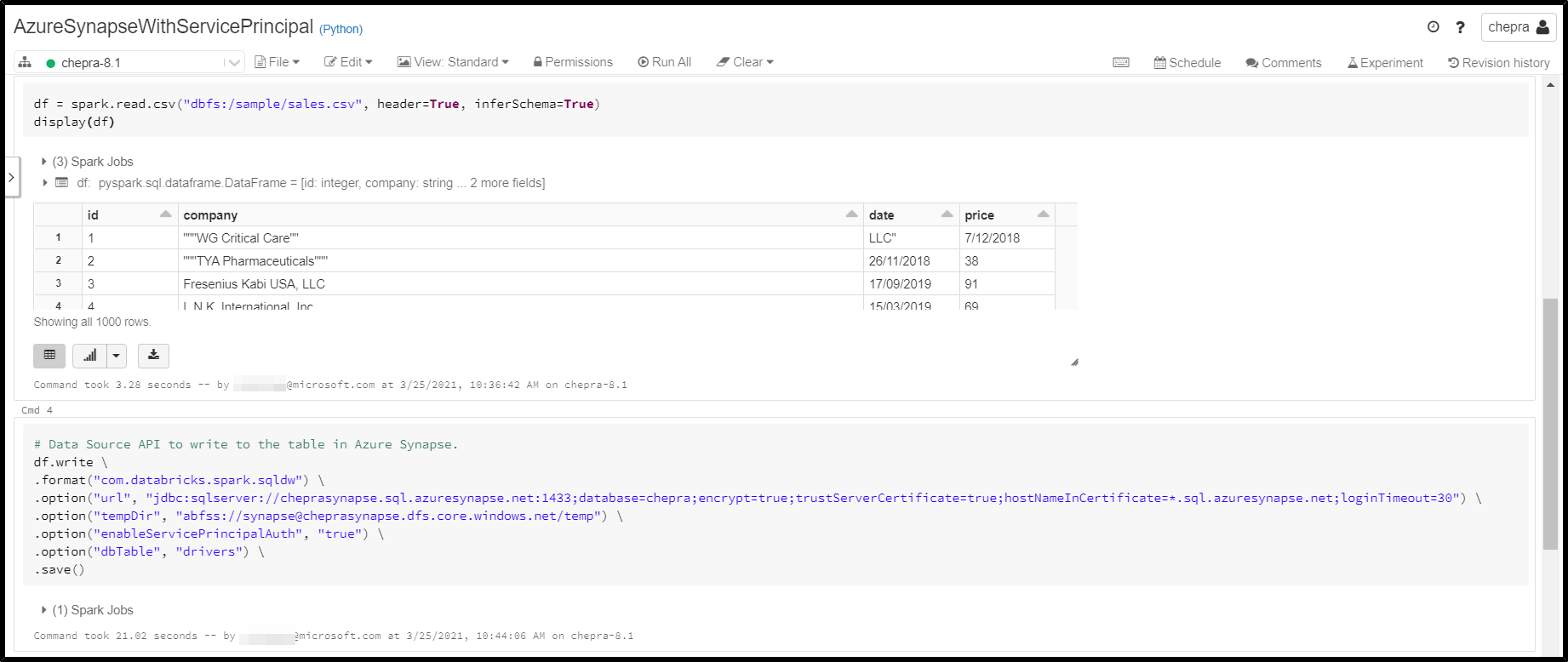

Step3: Set the enableServicePrincipalAuth option in the connection configuration.

Sample URL: "url", "jdbc:sqlserver://<workspacename>.sql.azuresynapse.net:1433;database=<databasename>;encrypt=true;trustServerCertificate=true;hostNameInCertificate=*.sql.azuresynapse.net;loginTimeout=30"

Load data from an Azure Synapse query.

Python Code:

df = spark.read \

.format("com.databricks.spark.sqldw") \

.option("url", "jdbc:sqlserver://<the-rest-of-the-connection-string>") \

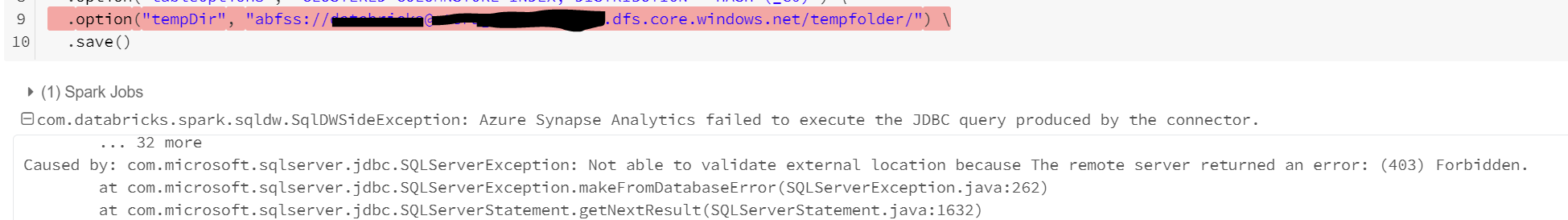

.option("tempDir", "abfss://<your-container-name>@<your-storage-account-name>.dfs.core.windows.net/<your-directory-name>") \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("dbTable", "<your-table-name>") \

.load()

Write data to the table in Azure Synapse.

Python Code:

df.write \

.format("com.databricks.spark.sqldw") \

.option("url", "jdbc:sqlserver://<the-rest-of-the-connection-string>") \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("dbTable", "<your-table-name>") \

.option("tempDir", "abfss://<your-container-name>@<your-storage-account-name>.dfs.core.windows.net/<your-directory-name>") \

.save()

Hope this helps. Do let us know if you any further queries.

------------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.