Hello @sakuraime ,

How to find the URL?

Go to Dedicated SQL Pool => Under settings => Connection Strings => Select JDBC (SQL authentication)

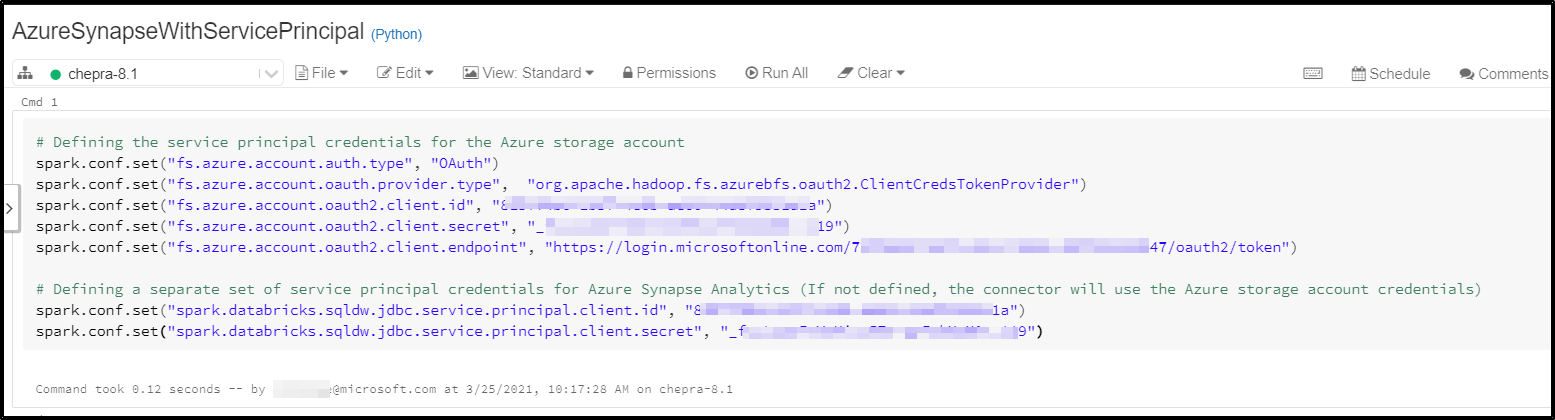

Sample code:

# Otherwise, set up the Blob storage account access key in the notebook session conf.

spark.conf.set(

"fs.azure.account.key.<your-storage-account-name>.blob.core.windows.net",

"<your-storage-account-access-key>")

# Get some data from an Azure Synapse table.

df = spark.read \

.format("com.databricks.spark.sqldw") \

.option("url", "jdbc:sqlserver://<the-rest-of-the-connection-string>") \

.option("tempDir", "wasbs://<your-container-name>@<your-storage-account-name>.blob.core.windows.net/<your-directory-name>") \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("dbTable", "<your-table-name>") \

.load()

# Load data from an Azure Synapse query.

df = spark.read \

.format("com.databricks.spark.sqldw") \

.option("url", "jdbc:sqlserver://<the-rest-of-the-connection-string>") \

.option("tempDir", "wasbs://<your-container-name>@<your-storage-account-name>.blob.core.windows.net/<your-directory-name>") \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("query", "select x, count(*) as cnt from table group by x") \

.load()

# Apply some transformations to the data, then use the

# Data Source API to write the data back to another table in Azure Synapse.

df.write \

.format("com.databricks.spark.sqldw") \

.option("url", "jdbc:sqlserver://<the-rest-of-the-connection-string>") \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("dbTable", "<your-table-name>") \

.option("tempDir", "wasbs://<your-container-name>@<your-storage-account-name>.blob.core.windows.net/<your-directory-name>") \

.save()

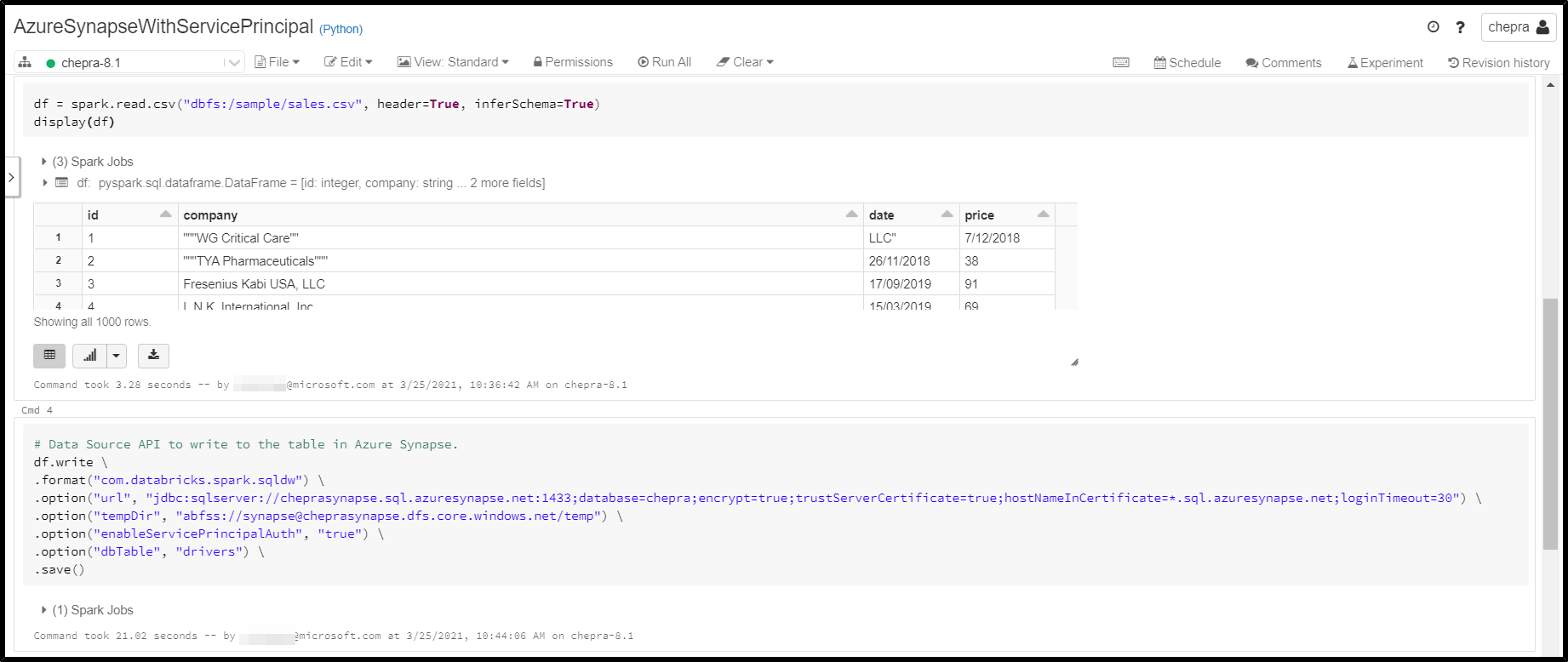

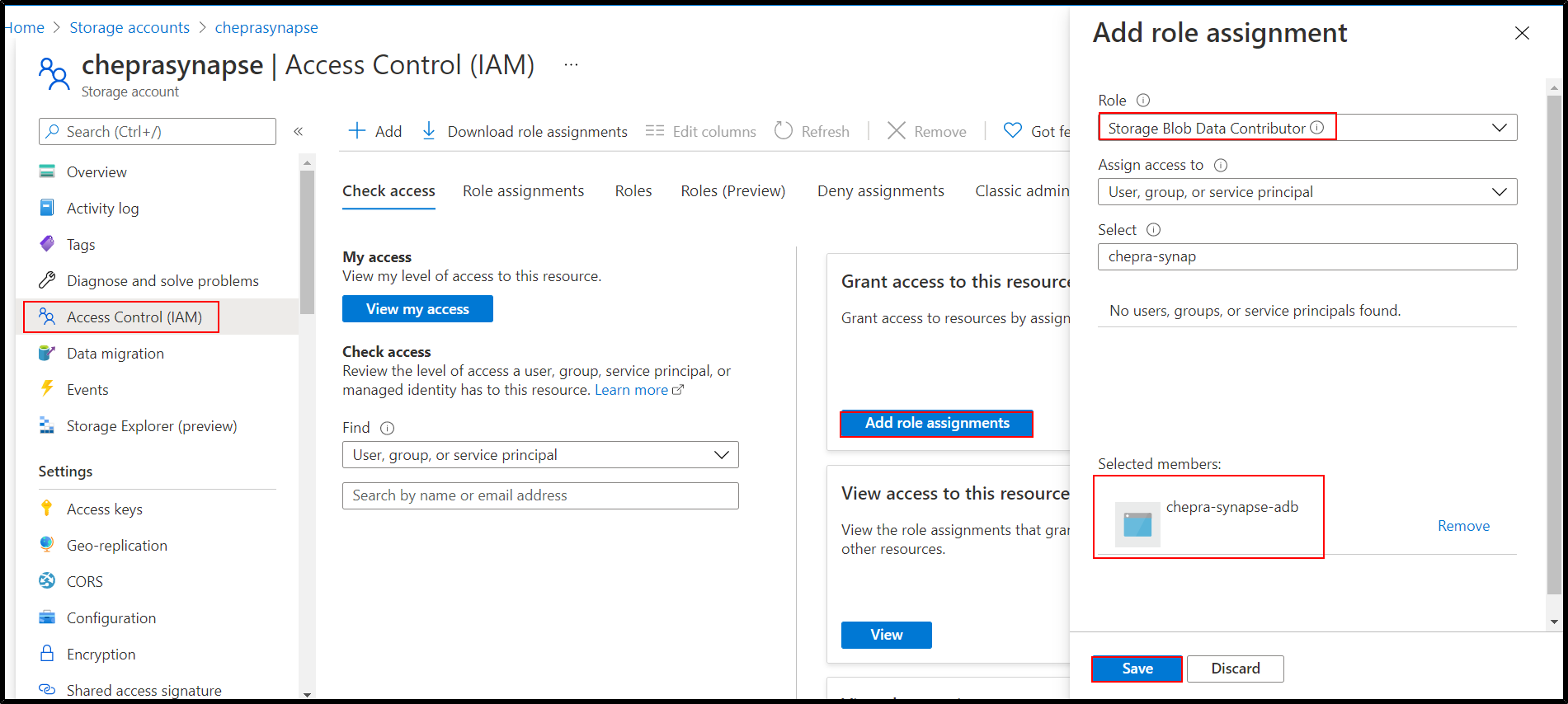

Tested from my end:

For more details, refer Azure Databricks - Azure Synapse Analytics and Write Data from Azure Databricks to Azure Dedicated SQL Pool(formerly SQL DW) using ADLS Gen 2.

Hope this helps. Do let us know if you any further queries.

------------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.