Hi there,

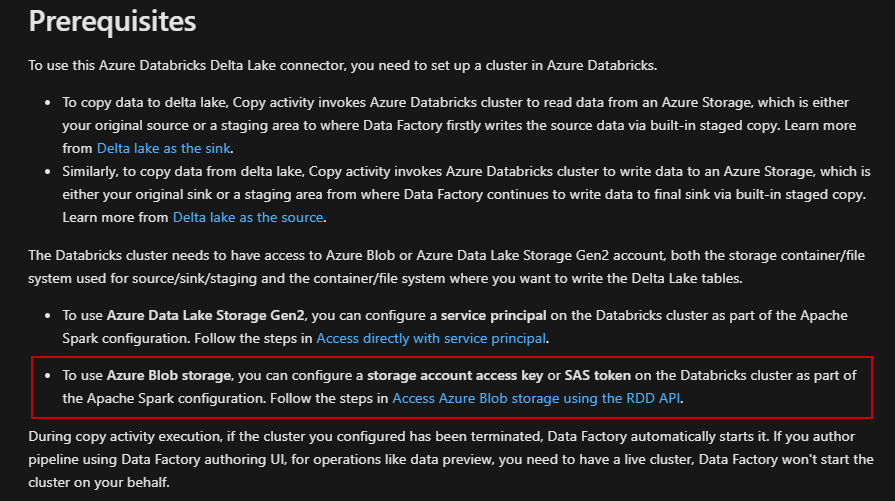

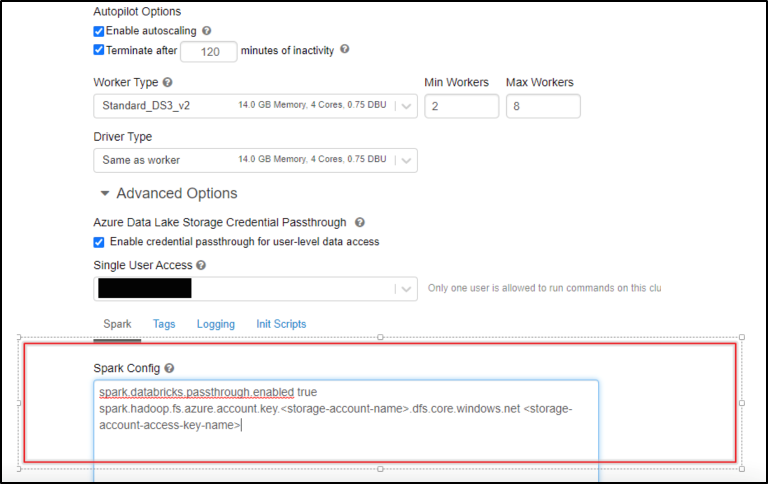

As per the offline discussion, adding the the storage access key on the Databricks cluster as part of Apache Spark configuration resolved the issue. This has been called out in the Prerequisite section of this doc: Copy data to and from Azure Databricks Delta Lake by using Azure Data Factory

Hope this helps anyone who experience similar issue.

----------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.