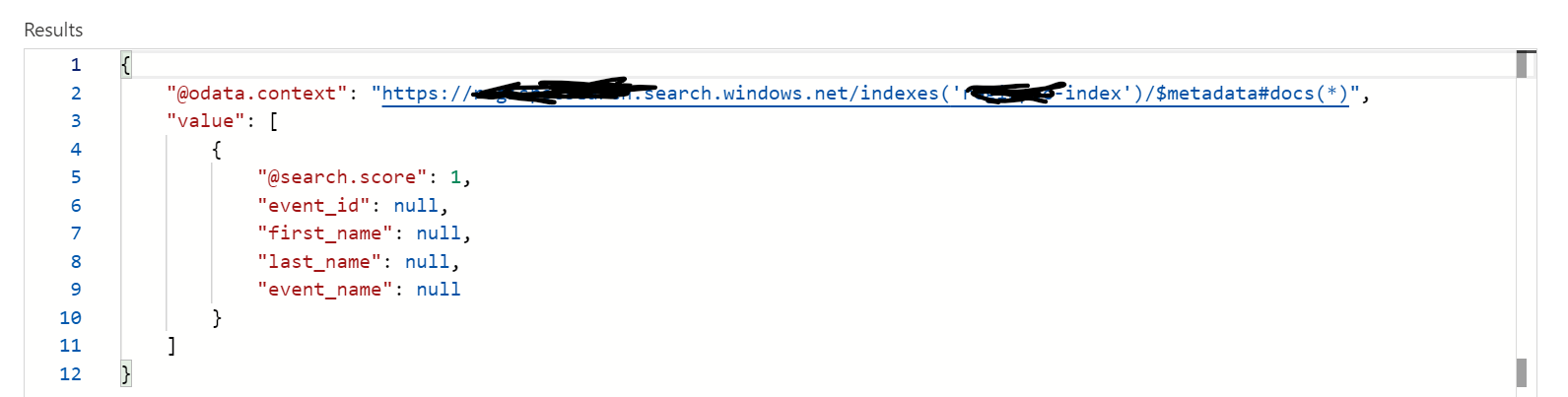

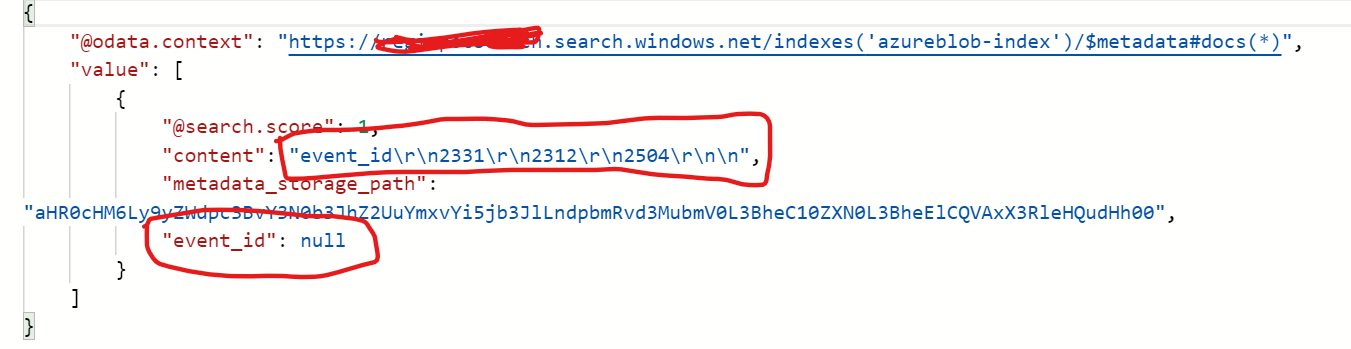

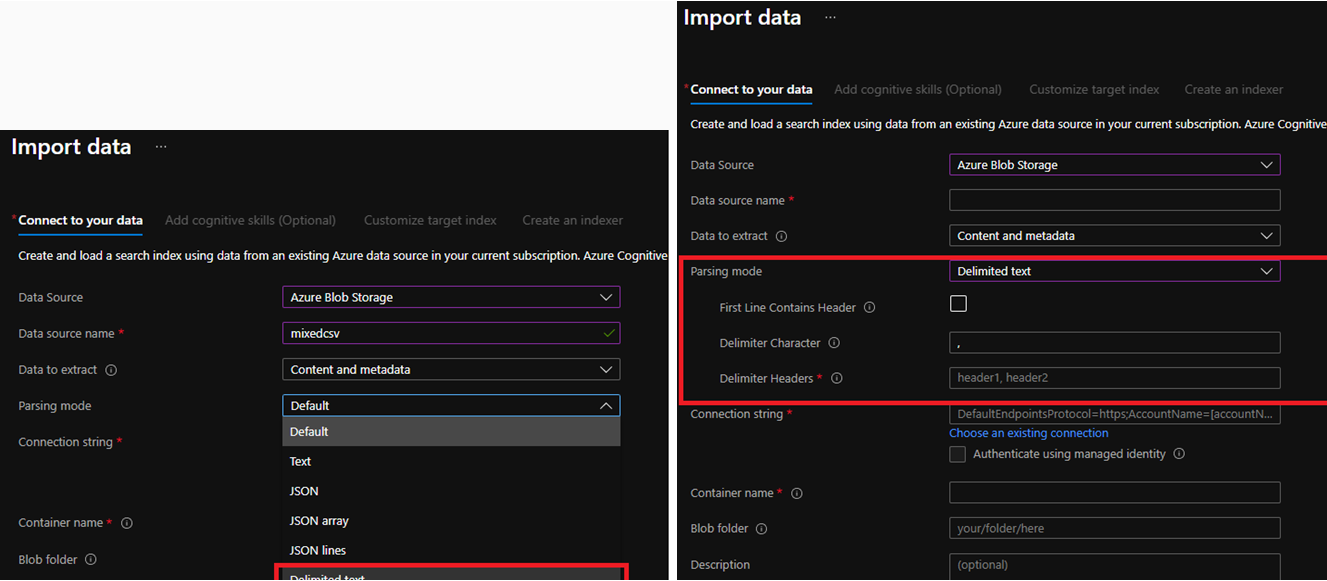

Thanks for reply. The default parsing mode for Azure Search Blob Indexers will just extract all of the textual data in the file into the “content” field, as you saw in your second experiment. It sounds like what you want is to parse the excel document such that each row is treated as a separate document (and indexed as a separate entry), and each column a separate field? If that’s the case, consider changing the configuration on the indexer to read the document’s data as delineated records rather than a blob of text.

may be the indexer definition would look something like:

{

"name" : "my-csv-indexer",

...

"parameters" : { "configuration" : { "parsingMode" : "delimitedText", "firstLineContainsHeaders" : true, "delimitedTextDelimiter" : "," } }

}

Be sure to change the delimiter to whatever they save the files with. CSV is the most common.

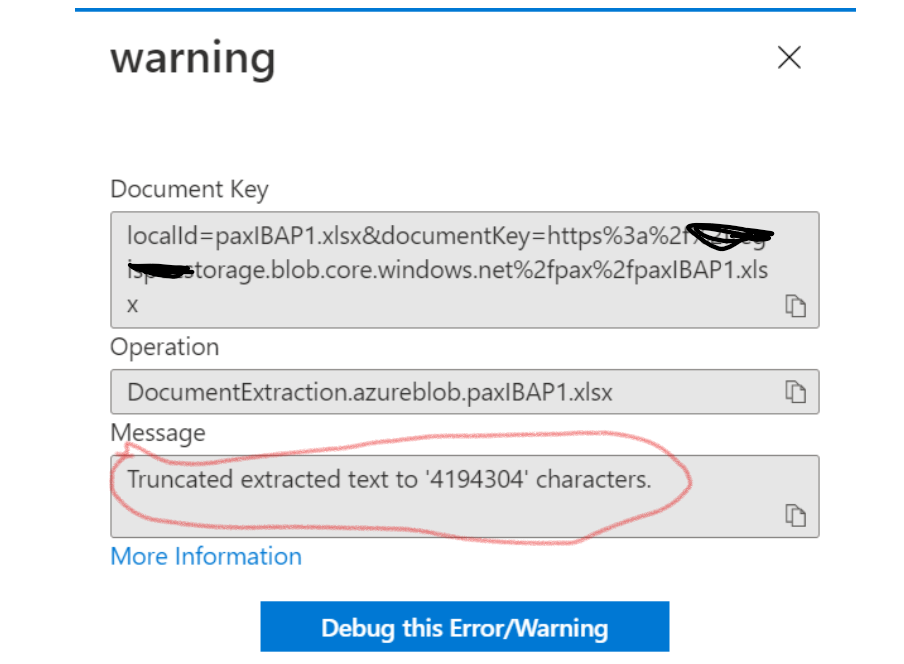

The truncation warning you saw is also significant and it may mean you’re not indexing all rows in the document. You may need to split the files up into smaller chunks.