Hello @Imran Mondal and welcome to Microsoft Q&A.

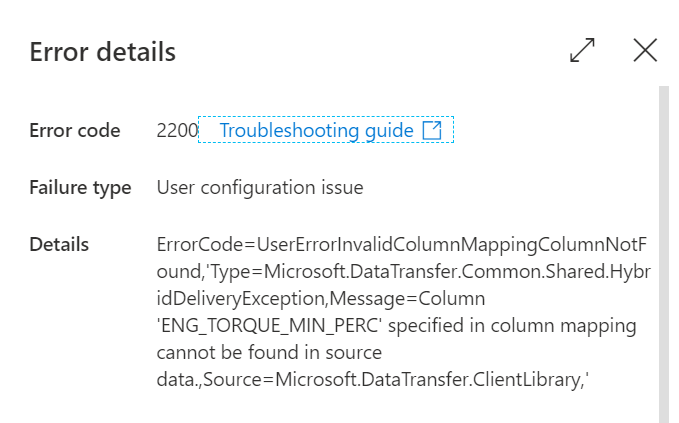

I noticed that your mapping was very straightforward, always X -> X , never X -> Y . When the column names are always exactly the same, you can skip the mapping section and leave it empty. When a column is included in the mapping, it becomes required. When the mapping is left empty, then Data Factory tries to auto-map, expecting X -> X.

In this way, by automapping, you can avoid the missing columns.

However the partition and rowkey must always be present (if using column).

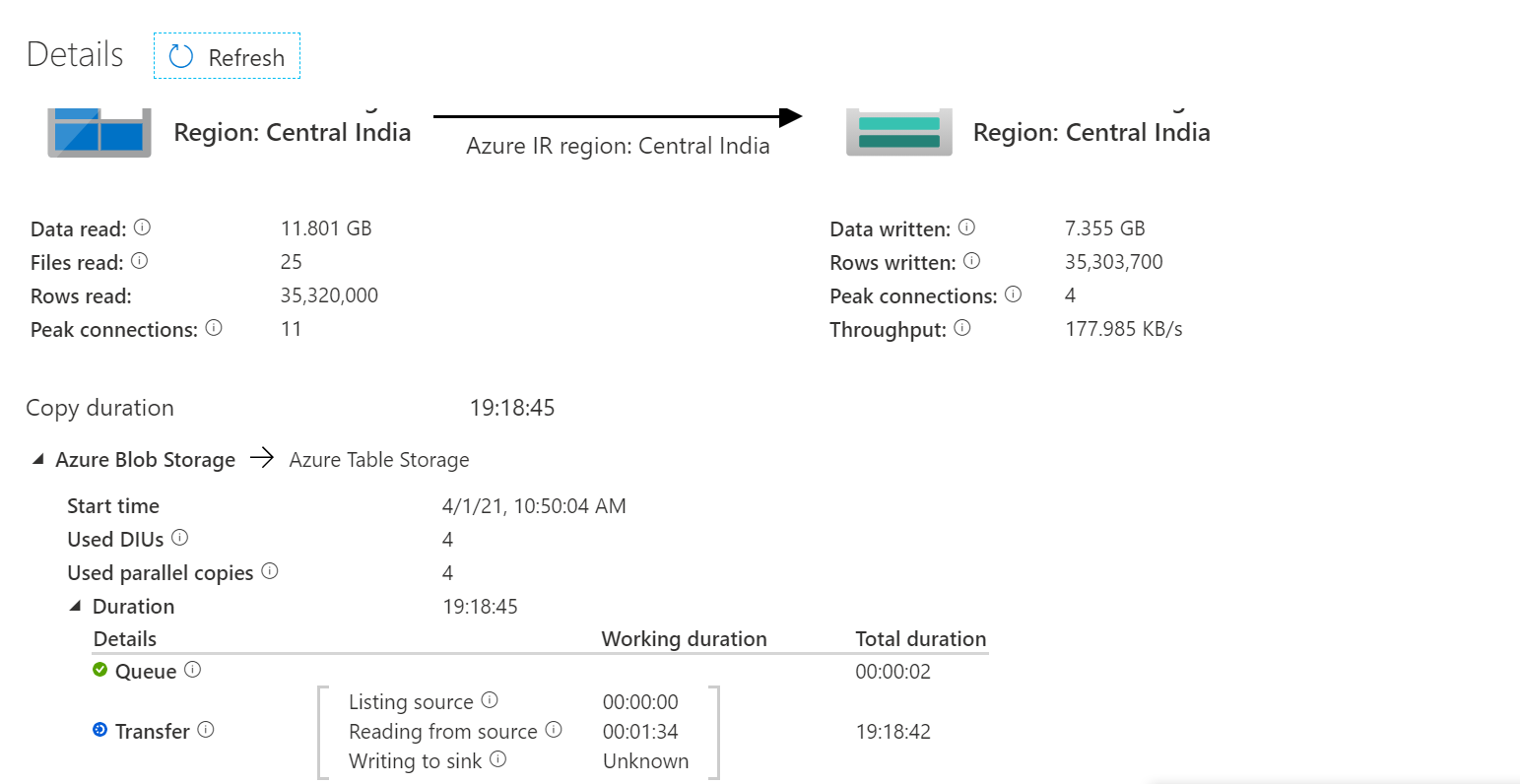

and should complete with3-5 minutes, which is not happening currently, it is taking more than 2 hr to copy, How can I improve this. is it possible ??

and should complete with3-5 minutes, which is not happening currently, it is taking more than 2 hr to copy, How can I improve this. is it possible ??