Hi @ElDiablo ,

Welcome to Microsoft Q&A!

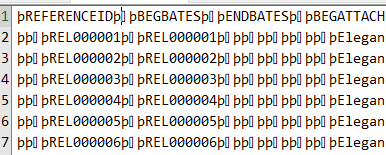

After checking, your DDL of AllTags provided is not working with your text provided.

It could be better to provide the complete DDL as below as a good starting:

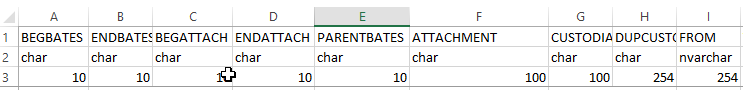

drop table if exists AllTags

CREATE TABLE dbo.AllTags (

REFERENCEID varchar (100) ,

[BEGBATES] varchar (100) ,

[ENDBATES] varchar (100) ,

[BEGATTACH] varchar (100) ,

[ENDATTACH] varchar (100) ,

[PARENTBATES] varchar (100) ,

[ATTACHMENT] varchar (1000),

CUSTODIAN varchar(100),

DUPCUSTODIAN varchar(100),

[FROM] varchar(100),

[TO] varchar(100),

CC varchar(100),

BCC varchar(100),

SUBJECT varchar(100),

DATESENT varchar(100),

TIMESENT varchar(100),

DATERCVD varchar(100),

TIMERCVD varchar(100),

FILEEXT varchar(100),

AUTHOR varchar(100),

CREATEDATE varchar(100),

CREATETIME varchar(100),

DATELASTMOD varchar(100),

TIMELASTMOD varchar(100),

FILENAME varchar(100),

DUPFILENAME varchar(100),

FILELENGTH varchar(100),

PGCOUNT varchar(100),

DOCTYPE varchar(100),

FAMDATE varchar(100),

FAMTIME varchar(100),

TIMEZONE varchar(100),

PATH varchar(max),

DUPPATH varchar(max),

DEDUPHASH varchar(100),

NATIVEPATH varchar(100),

OCRPATH varchar(100),

TITLE varchar(100),

COMPANY varchar(100),

DATEACCESSED varchar(100),

TIMEACCESSED varchar(100),

DATEPRINTED varchar(100),

TIMEPRINTED varchar(100),

CONVDATE varchar(100),

CONVTIME varchar(100),

ATTACHLIST varchar(100),

FAMILYRANGE varchar(100),

ALLCUSTODIANS varchar(100),

ALLFILENAMES varchar(100),

ALLFILEPATHS varchar(max),

HASHMD5 varchar(100),

HASHSHA varchar(100),

TAGS varchar(100),

DOCNOTE varchar(100),

PRIVNOTE varchar(100),

REDACTRSNS varchar(100),

DISCOID varchar(100),

MESSAGEID varchar(100),

THREADID varchar(100),

ATTACHCOUNT varchar(100),

HIDDENTYPE varchar(100),

METAREDACTED varchar(100),

INREPLYTOID varchar(100),

OBJECTHASH varchar(100),

REVISION varchar(100),

HEADER varchar(100),

IMPORTANCE varchar(100),

DELIVERYRECEIPT varchar(100),

READRECEIPT varchar(100),

SENSITIVITY varchar(100),

LASTAUTHOR varchar(100),

ESUBJECT varchar(100),

DATEAPPTSTART varchar(100),

DATEAPPTEND varchar(100),

CALBEGDATE varchar(100),

CALENDDATE varchar(100),

CALBEGTIME varchar(100),

CALENDTIME varchar(100),

CALENDUR varchar(100),

RECORDTYPE varchar(100),

REVISIONNUMBER varchar(100),

Exception varchar(100),

ExceptionDetails varchar(100),

TextLimitExceeded varchar(100)

)

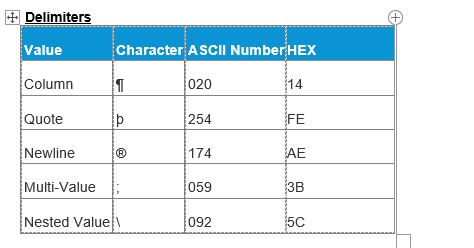

If your version is SQL Server 2017 (14.x) CTP 1.1 and later, you could have a try with below:

BULK INSERT AllTags

FROM 'C:\Data\Swap Drive\REL000001-REL296747\VOL0002.DAT'

WITH (

FIRSTROW = 2 ,

DATAFILETYPE ='char',

FIELDTERMINATOR = '0x14',

ROWTERMINATOR = '\n',

BATCHSIZE=250000 ,

CODEPAGE=65001,

MAXERRORS= 2,

FIELDQUOTE=N'þ' , ---adding this row

KEEPNULLS

);

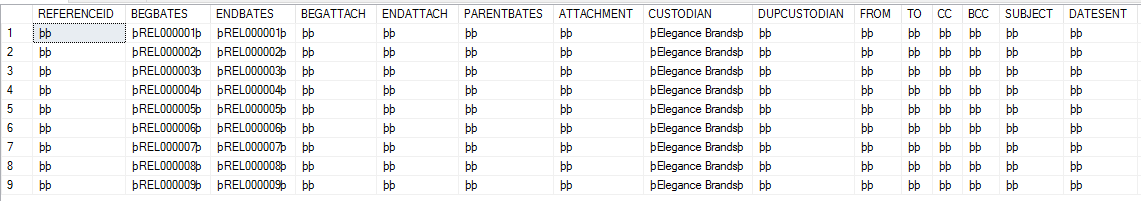

select * from AllTags

Below is part of the output but is a good starting. Then you could try to deal with the data in TSQL side, like using REPLACE function.

Best regards

Melissa

If the answer is helpful, please click "Accept Answer" and upvote it.

Note: Please follow the steps in our documentation to enable e-mail notifications if you want to receive the related email notification for this thread.