I was able to resolve this issue. There were two corrupted files out of many hundreds that were fine in the source. Removing these two corrupted files allowed the data flow to process and complete successfully again as normal.

Job failed due to reason: at Source... ...Job aborted due to stage failure

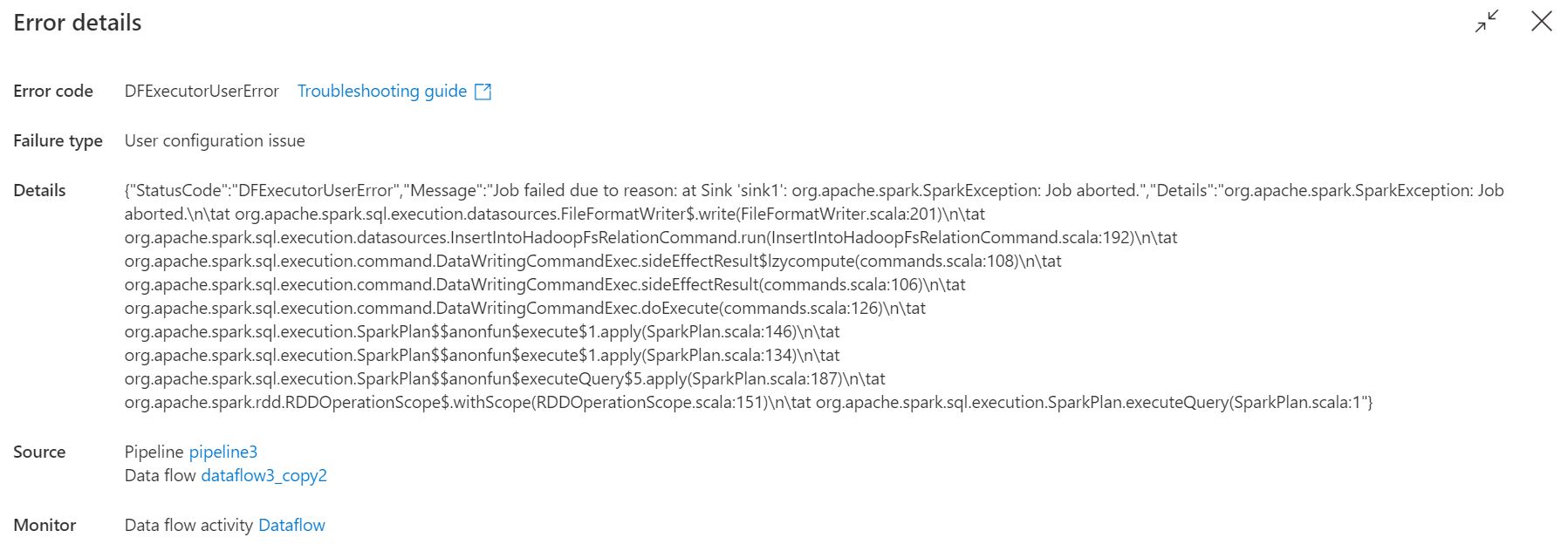

I have a pipeline in Synapse which calls a data flow. That data flow has started to fail and return the below error.

{"message":"Job failed due to reason: at Source 'RawTransaction': org.apache.spark.SparkException: Job aborted due to stage failure: Task 8 in stage 43.0 failed 4 times, most recent failure: Lost task 8.3 in stage 43.0 (TID 3283, 58924e9c16f8411a93ee73d20b870adc0004d790991, executor 1): org.apache.spark.SparkException: Exception thrown in awaitResult: \n\tat org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:226)\n\tat org.apache.spark.util.ThreadUtils$.parmap(ThreadUtils.scala:290)\n\tat org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$.readParquetFootersInParallel(ParquetFileFormat.scala:538)\n\tat org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anonfun$9.apply(ParquetFileFormat.scala:611)\n\tat org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anonfun$9.apply(ParquetFileFormat.scala:603)\n\tat org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$23.apply(RDD.scala:823)\n\tat org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$23.app. Details:at Source 'RawTransaction': org.apache.spark.SparkException: Job aborted due to stage failure: Task 8 in stage 43.0 failed 4 times, most recent failure: Lost task 8.3 in stage 43.0 (TID 3283, 58924e9c16f8411a93ee73d20b870adc0004d790991, executor 1): org.apache.spark.SparkException: Exception thrown in awaitResult: \n\tat org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:226)\n\tat org.apache.spark.util.ThreadUtils$.parmap(ThreadUtils.scala:290)\n\tat org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$.readParquetFootersInParallel(ParquetFileFormat.scala:538)\n\tat org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anonfun$9.apply(ParquetFileFormat.scala:611)\n\tat org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anonfun$9.apply(ParquetFileFormat.scala:603)\n\tat org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$23.apply(RDD.scala:823)\n\tat org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$23.apply(RDD.scala:823)\n\tat org.","failureType":"UserError","target":"Prepare_forsight_All","errorCode":"DFExecutorUserError"}

I have tried switching from memory optimised to general compute, and increasing the cores on the IR but to no resolution.

Azure Data Lake Storage

Azure Synapse Analytics

Azure Data Factory

1 additional answer

Sort by: Most helpful

-

praveen sharma 1 Reputation point

2022-02-16T15:36:36.977+00:00 this worked for me as well, thank you so much for sharing.