Hello @Hugo Dantas and welcome to Microsoft Q&A. Please let me share a few ideas with you.

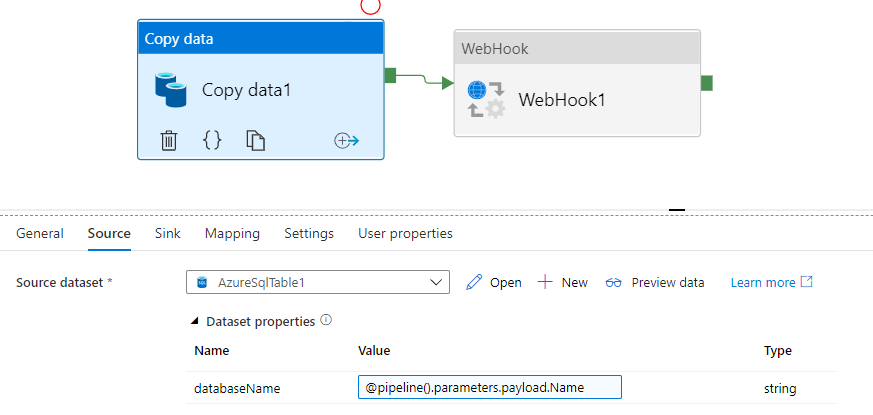

For the solution you are currently pursuing, you would use a Lookup activity to read contents of your trigger-blob. This does not get put into a parameter, as parameter is defined when the pipeline starts. The trigger itself cannot read the contents of the blob. To interpret a string as an object, use the @json(blob_contents) function.

However, there is a way to more directly trigger the pipeline. Instead of using blob event triggers, have you considered using your Azure Function to trigger the pipeline run directly?

You can use the REST API , the body can carry parameters.

There is also a .NET method.

And others.

The Powershell actually takes a file as parameter input.