Hi @Zilvinas Kundrotas ,

Hi,

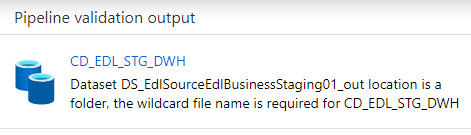

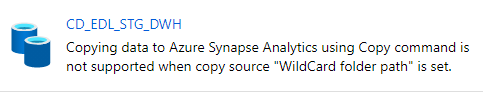

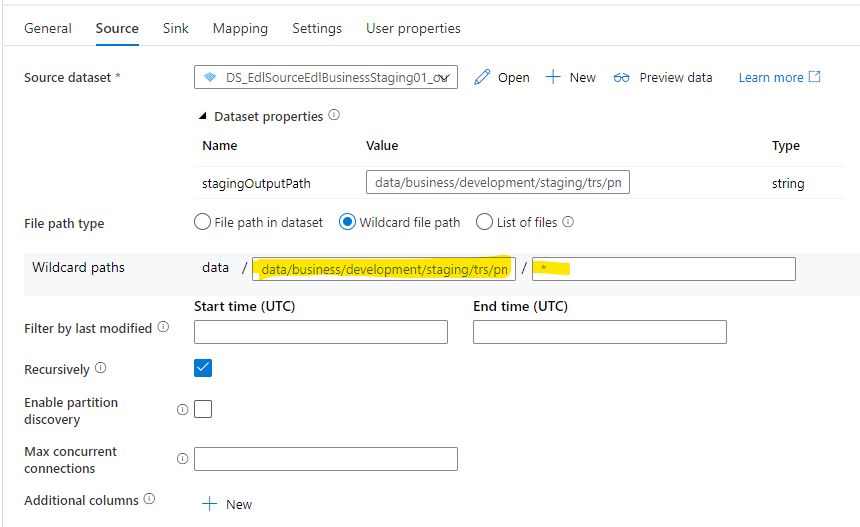

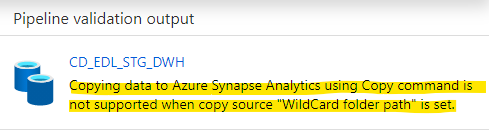

I think the problem is how the UI build the configuration to be sent plus the validation problems.

Anyhow and thanks to @PRADEEPCHEEKATLA I found a combination that works:

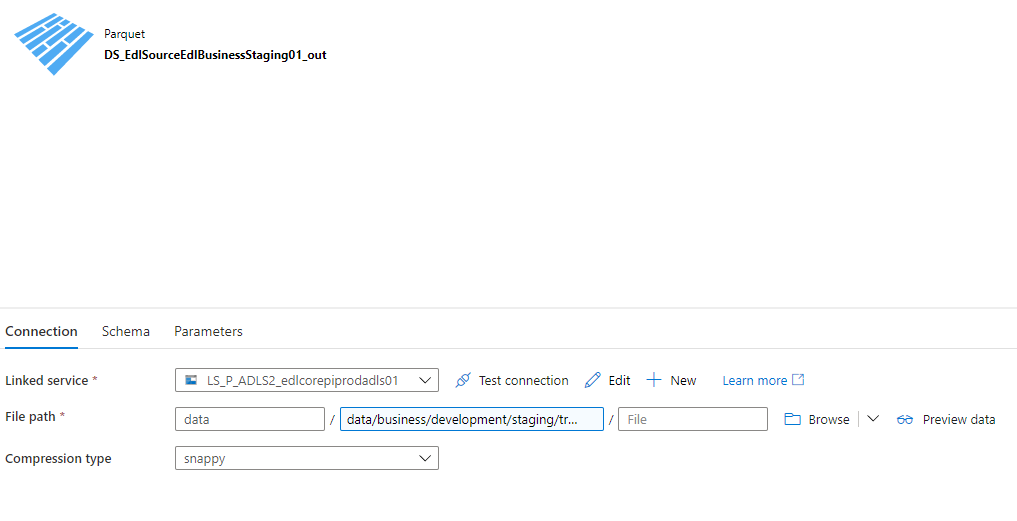

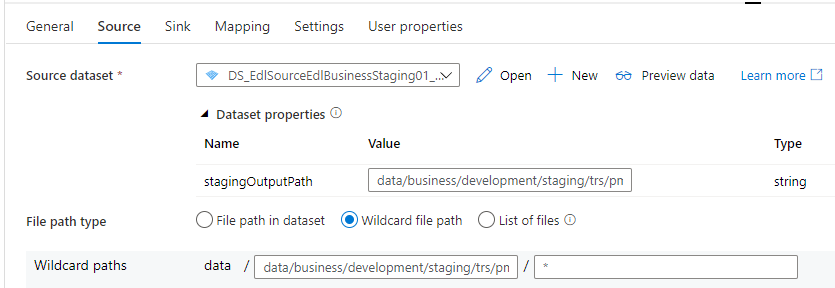

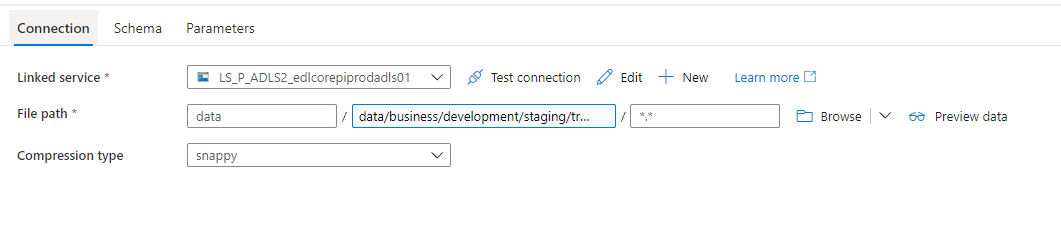

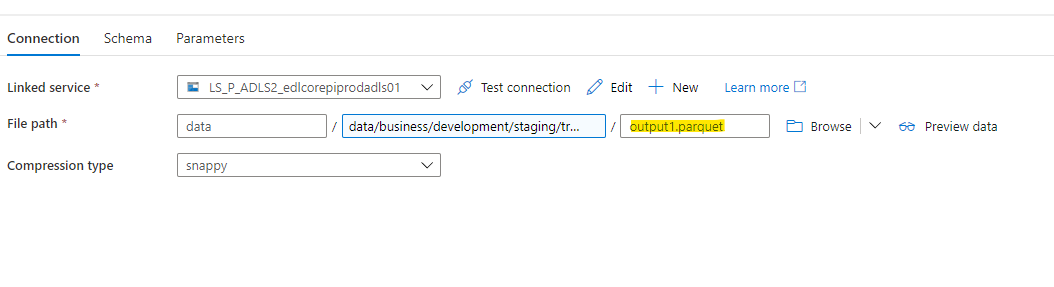

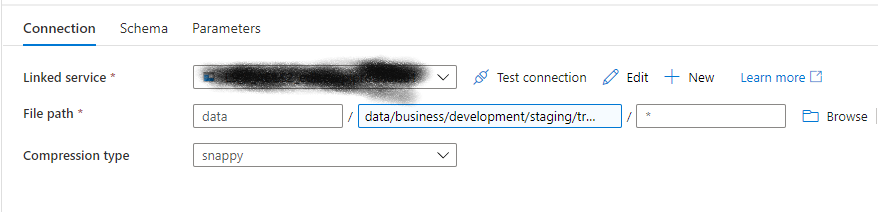

In the dataset, define everything including the wildcard for the file name:

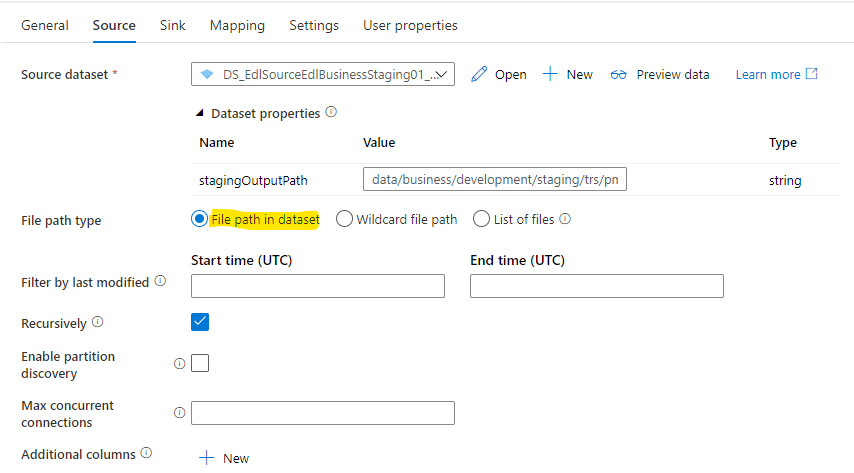

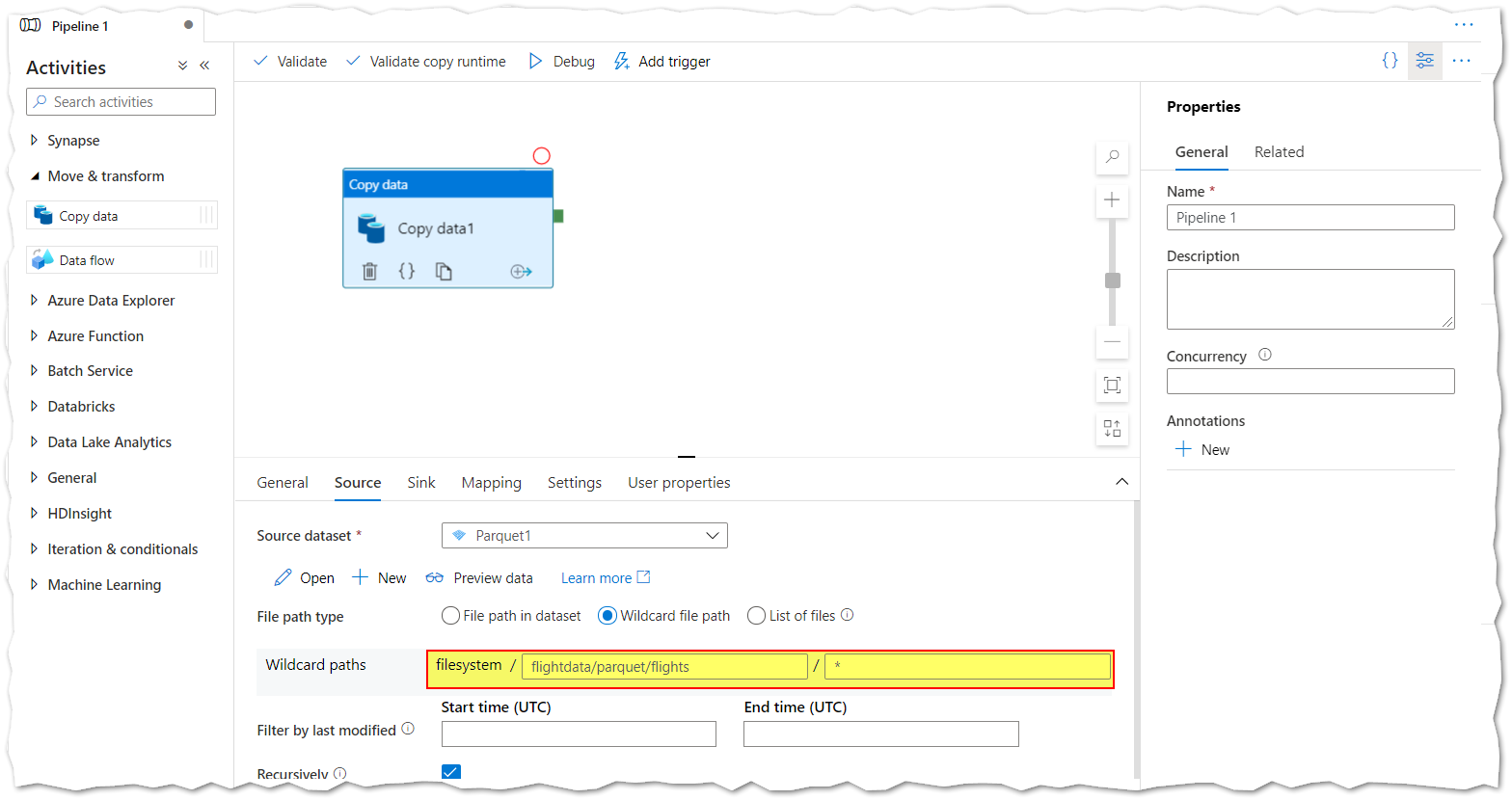

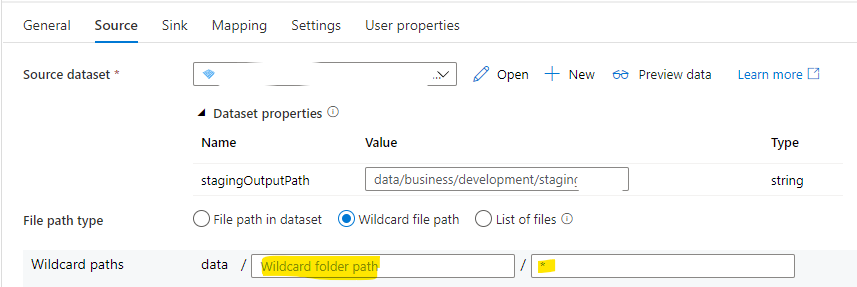

Then define the wildcard for the file name of the copy activity but leave the folder path empty:

That "hacks" the interface and produces the following input:

"source": {

"type": "ParquetSource",

"storeSettings": {

"type": "AzureBlobFSReadSettings",

"recursive": true,

"wildcardFileName": "*",

"enablePartitionDiscovery": false

}

}

I guess it will be fixed in next releases.