Hi Team,

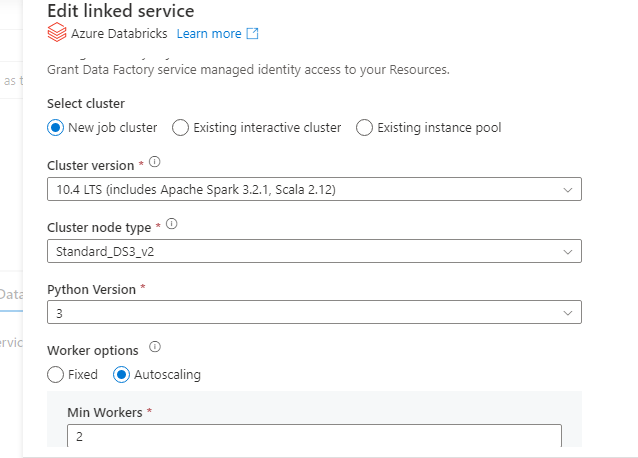

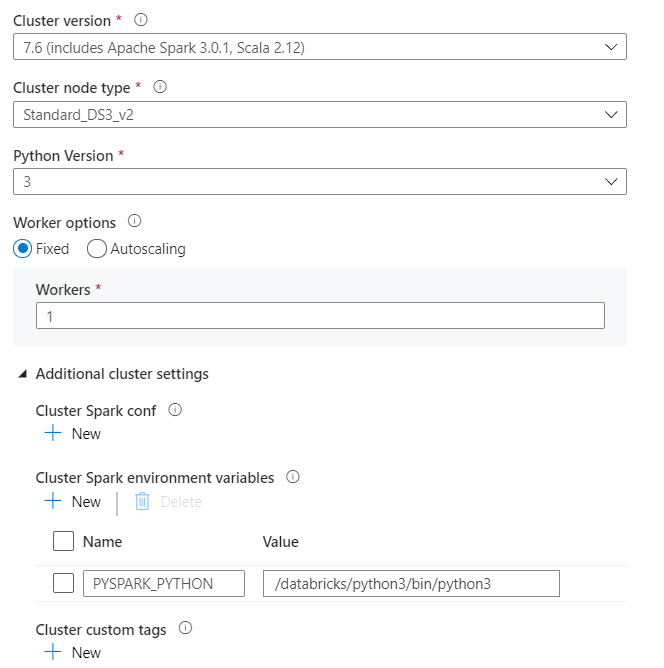

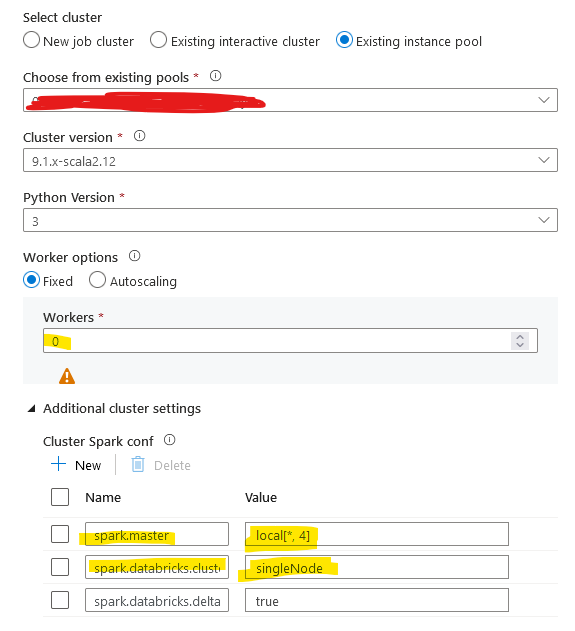

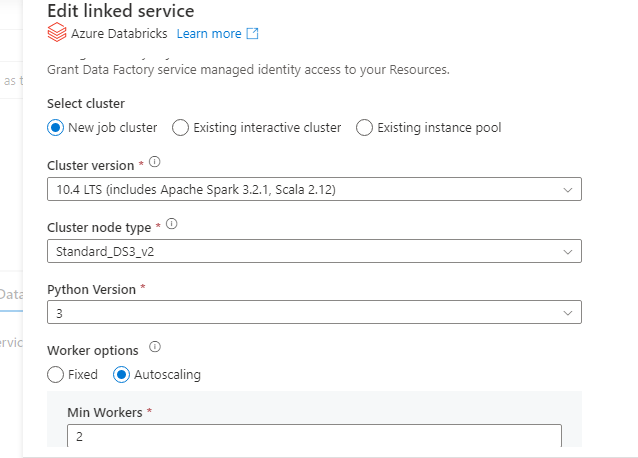

I am facing similar issues which i used new job cluster choose in my ADF linkedservice, the third party libraries can't able to use it. it is throwing the error as below like.

I am trying to execute the notebook via azure datafactory to Azure Databricks notebook but unable to success my ADF pipeline, if I run the azure databricks notebook separately on my pyspark scripts, there is no error but if run via the ADF pipeline, i am getting below like.

ModuleNotFoundError: No module named 'prophet'

ModuleNotFoundError Traceback (most recent call last) in 6 import pandas as pd 7 import pyspark.pandas as ps ----> 8 from prophet import Prophet 9 from pyspark.sql.types import StructType, StructField, StringType, FloatType, TimestampType, DateType, IntegerType 10

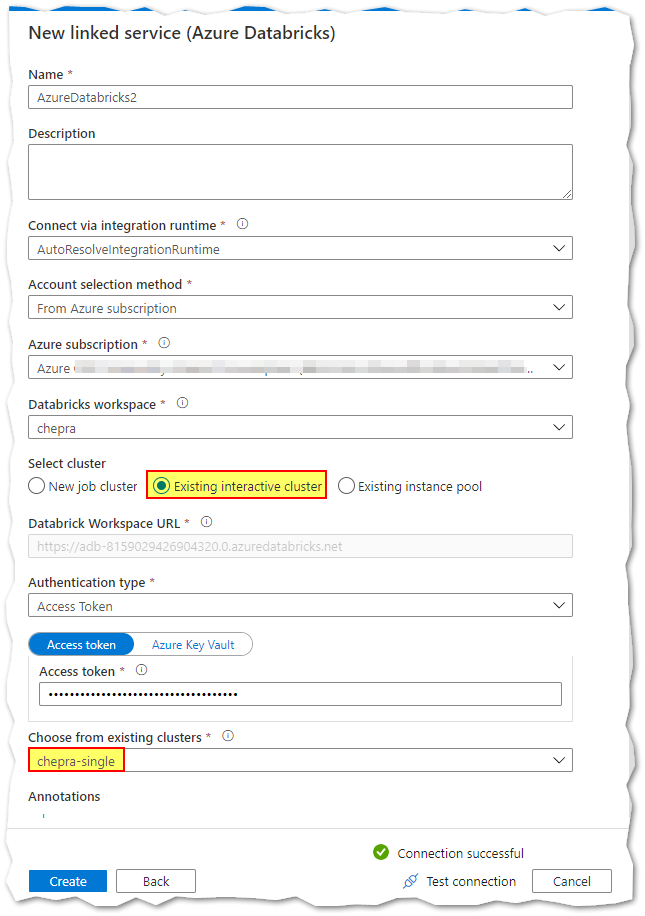

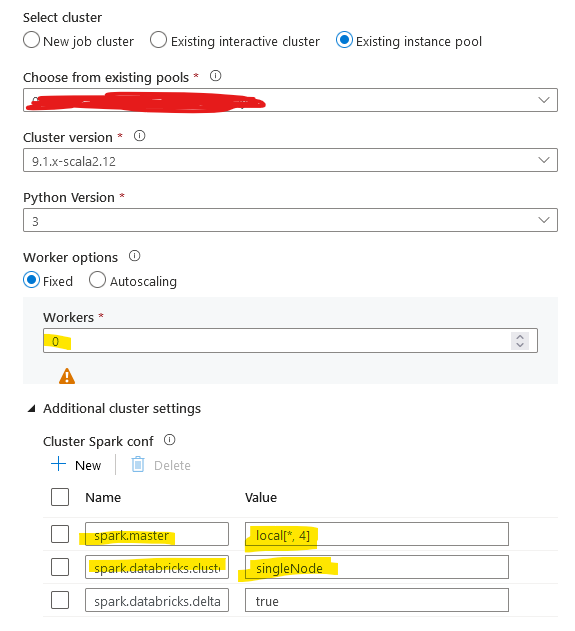

also if i choose exiting job cluster which is already install my libraries so that the notebook executed properly in ADF.

how do we handle to use new job cluster option to use execute my databricks notebook in my ADF. there is option append libraries to provided my DBSF filestore location of .wheel files but it is not able install and getting as below error.

%pip install /dbfs/FileStore/jars/prophet/prophet-1.1-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

Python interpreter will be restarted.

Processing /dbfs/FileStore/jars/prophet/prophet-1.1-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

Requirement already satisfied: matplotlib>=2.0.0 in /databricks/python3/lib/python3.8/site-packages (from prophet==1.1) (3.4.2)

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ProtocolError('Connection aborted.', ConnectionResetError(104, 'Connection reset by peer'))': /simple/setuptools-git/

WARNING: Retrying (Retry(total=3, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ProtocolError('Connection aborted.', ConnectionResetError(104, 'Connection reset by peer'))': /simple/setuptools-git/

WARNING: Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ProtocolError('Connection aborted.', ConnectionResetError(104, 'Connection reset by peer'))':

Kindly help me some one to resolve the issues.