Same problem for me!

Though I have in Jupiter:

Num GPUs Available: 1

My tensorflow models are calculating slower than on my local Core i5 PC without any GPU.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

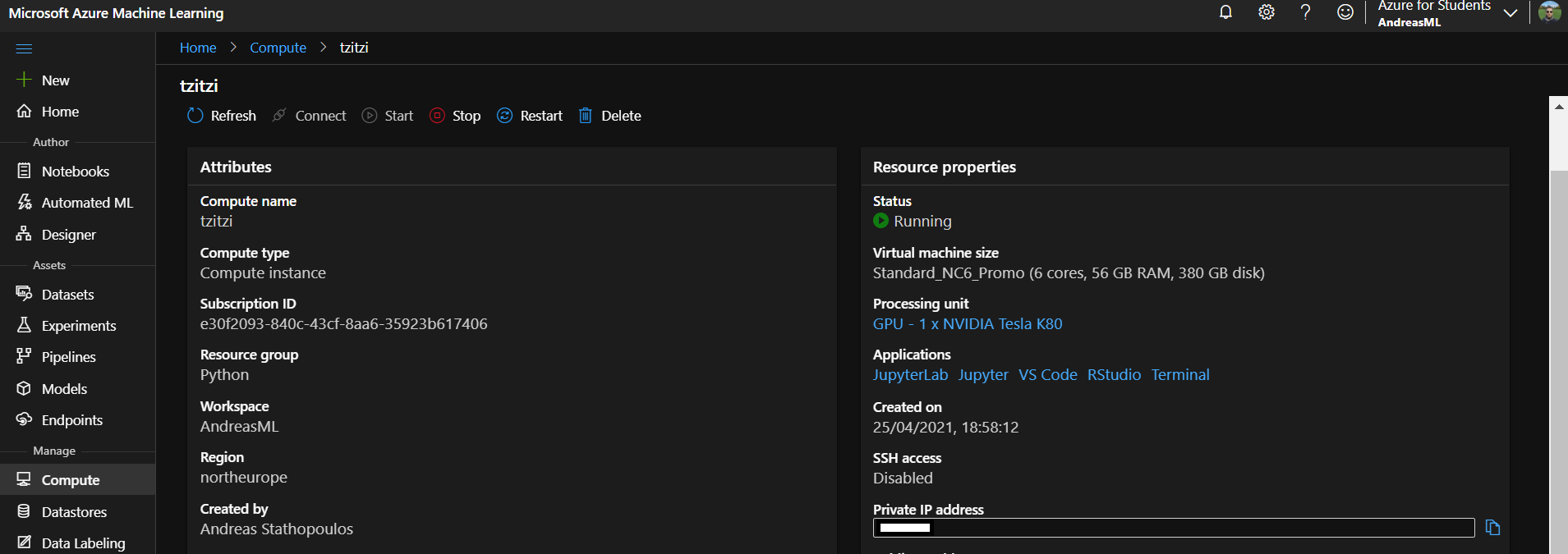

Subscription ID : e30f2093-840c-43cf-8aa6-35923b617406

I have created a Machine Learning resource and then uploaded a Jupyter Notebook that I have previously runned in Google Colab.

In the Google Colab free GPU my Deep Learning model for Object Localization (Yolov5) runs in a heavier model (Yolov5l.pt for 100 epochs) in 4.5 hours and in Azure my model in its slightest version (Yolov5s.pt) runs for 12 hours and it is only in the half of the process (41 epochs out of 100).

Why is it so slow ?

What can I change to make it faster ?

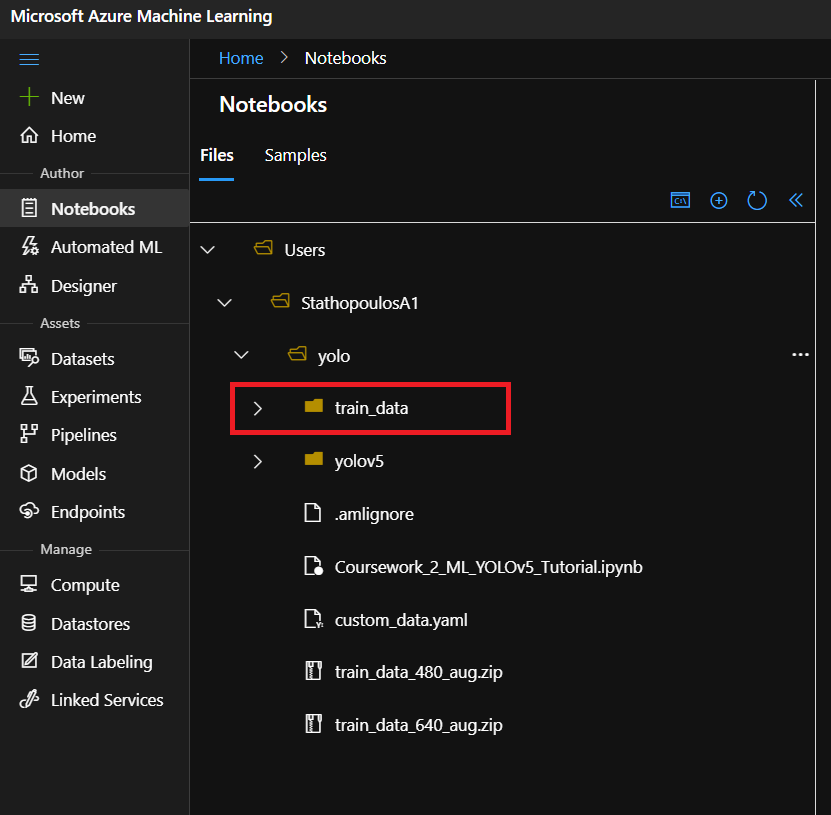

FYI: In the second image I show you that I have uploaded in this place my training dataset.

Same problem for me!

Though I have in Jupiter:

Num GPUs Available: 1

My tensorflow models are calculating slower than on my local Core i5 PC without any GPU.

Have you tried looking at the server IOPS? NC6 Promo only supports Standard disks which have an IOPS ceiling of 500.