Hello again @sakuraime .

There is no "easy button" way to import/export, especially linked services. There are a few ways, depending upon how much you need to move, how tech-savvy you are, and whether the linked services use Key Vault or store credentials inside.

----------

Pipelines are much easier to move than linked services. The simplest way to copy a resource is to find the { } button.

Clicking the { } button brings up the JSON definition of the resource. Click the "Copy to clipboard" button. Then go to the new factory and create a new resource. Rename the new resource to have the same name as the old resource. Then click the { } button for the new resource and erase and paste in the definition.

This method is good when you only have a few resources to copy over. It also requires you to have first moved any supporting resources. If you try to paste in a pipeline, but are missing the linked service, it will throw an error. Move the linked service, then dataset, then pipeline.

All three of these can be gathered at once by clicking the ... button and downloading support files.

----------

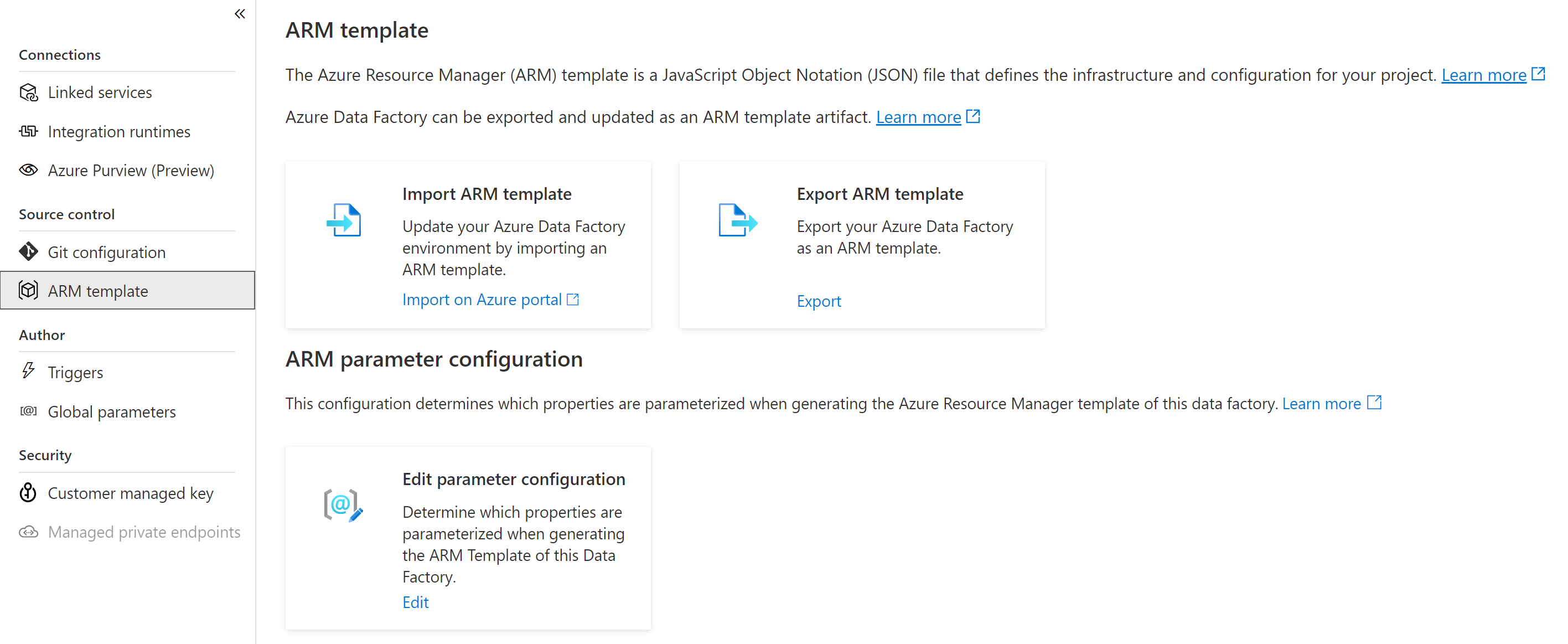

If you need to move over ALL of the linked services, datasets, and pipeline (and maybe more), then you could borrow a page from the CI/CD methods. You can export ARM template of the entire factory. This does not let you pick some items and not others. However you can change what is left as default values. Linked service Passwords or other credentials are not preserved in the exported files. This is to help keep your data safe. When you deploy the template over the new factory, you will need to re-enter any passwords or secrets.

Using Key Vault makes this much easier, as you only need to set up access to the Key Vault. No more need to enter passwords or secrets.

This process is a write-only on the new factory. If there is something with the same name, it may overwrite. Nothing will be erased.

----------

If you need to move a medium quantity of resources, more than a few but not all, and you are comfortable writing your own code, you can make use of the Data Factory SDKs or REST API. I have used the REST API to fetch pipeline definitions before. Linked services will still not give you the passwords, so there is still some work.