Hi Team, Could you please help me here.? Thanks!

Extract files in batches from data lake in Azure Data factory

Hi Team,

In my data lake storage has multiple files almost 100 which contains around 27M records and I am loading on the daily basis(Delta Load) in azure SQL DB Stage table (Using copy activity) which is causing performance issue. After stage table I am using stored procedure activity to load data in target table from stage table.

Currently pipeline is taking almost between 12-15 hours to get complete.

files type is CSV, but let me know if it is possible to implement the logic for Snappy.parquet files

COPY ACTVITY(Data lake to STG table)--->STORED PROCEDURE ACTIVITY(STG To Target)

Is there any way to load the files in batches ?

STEP1:-means first time copy only 10---Load in stg table---stage table to target table then truncate stg table.

STEP2:-means first time copy only 10-20---Load in stg table---stage table to target table then truncate stg table

Could you please help me here.?

I am looking forward your response.

Thanks,

Pankaj

Azure SQL Database

Azure Data Lake Storage

Azure Data Factory

2 answers

Sort by: Most helpful

-

-

MartinJaffer-MSFT 26,236 Reputation points

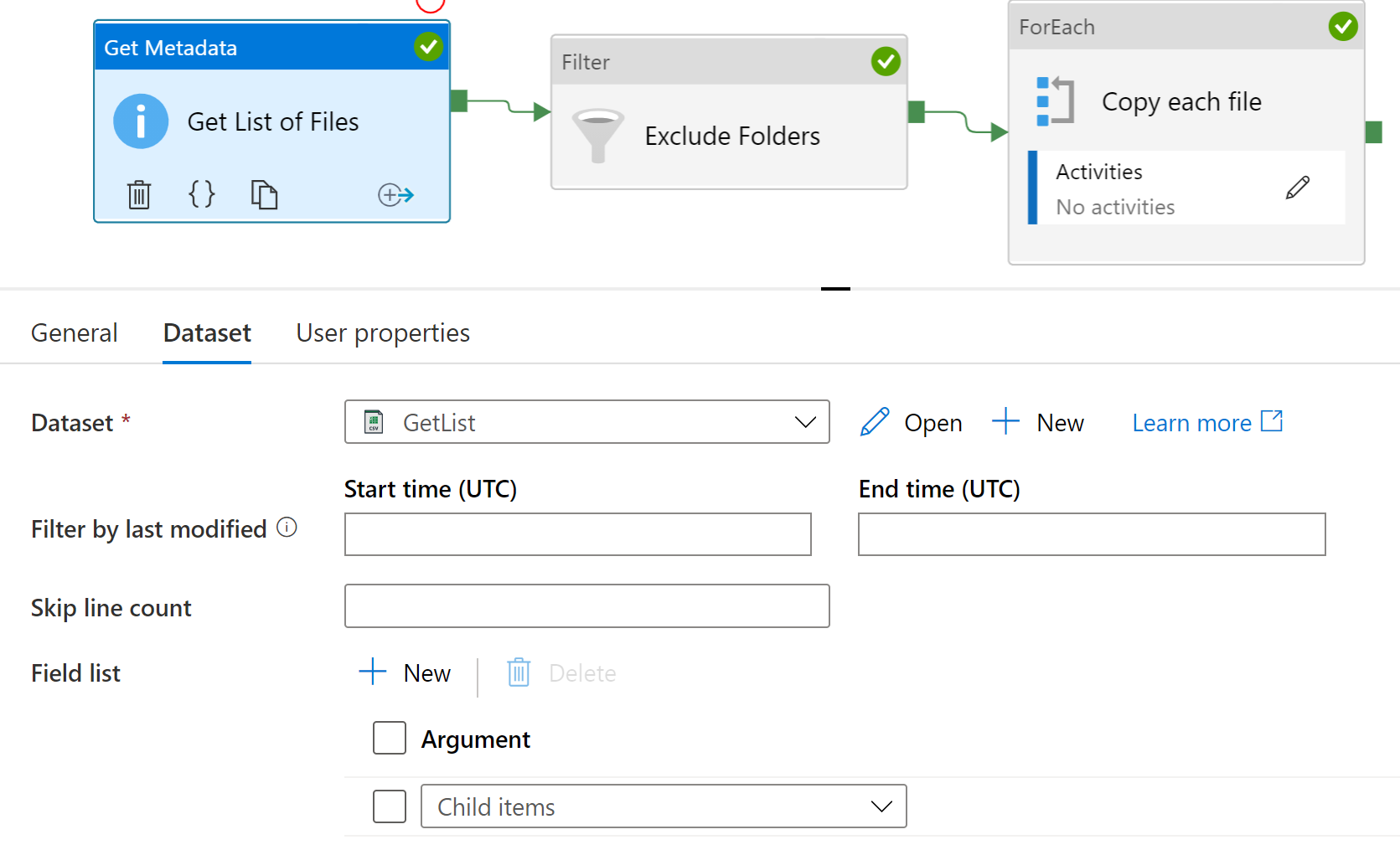

2021-05-06T15:15:55.48+00:00 Three step process:

- Get list of files in the folder

- Exclude any not-files (subfolders)

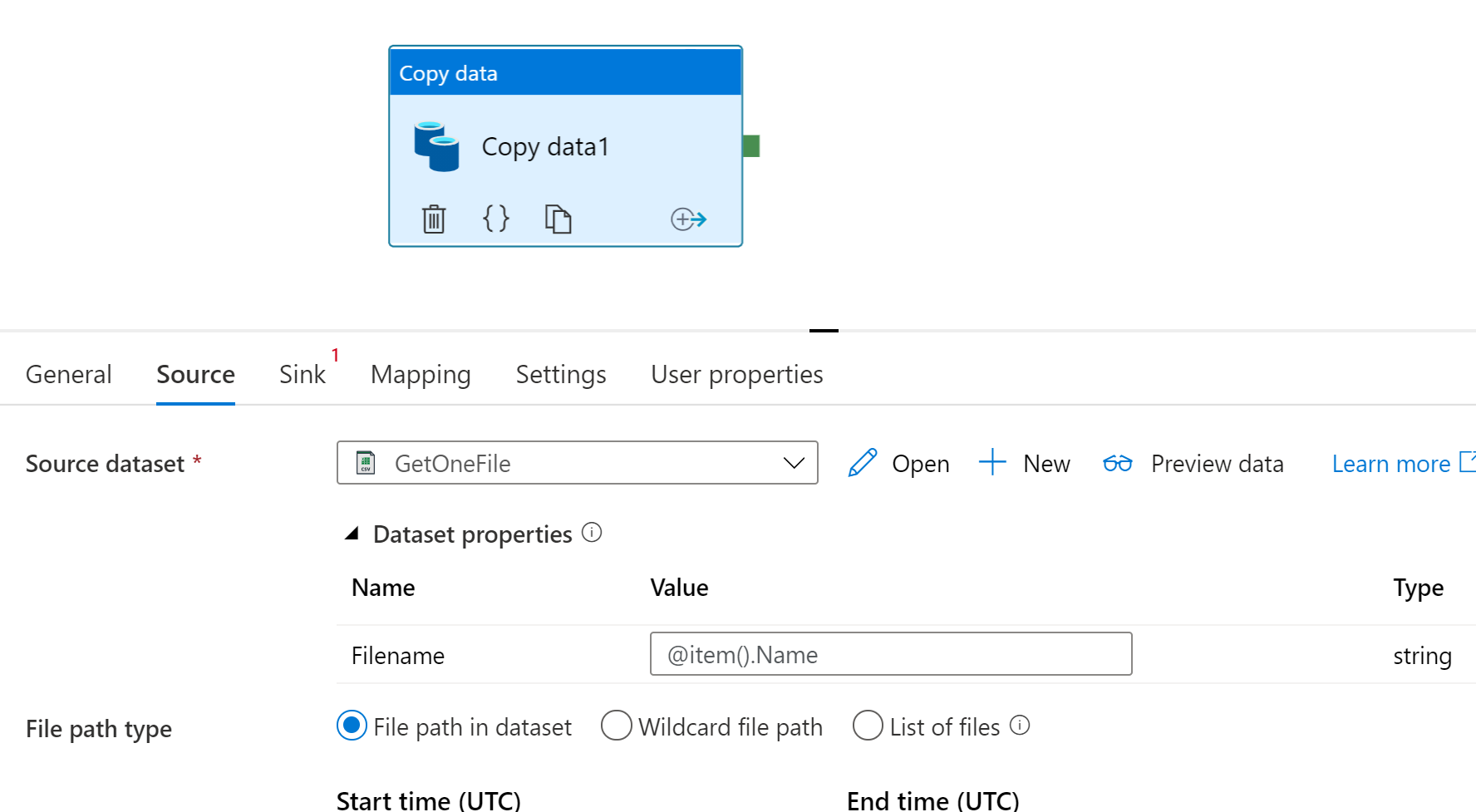

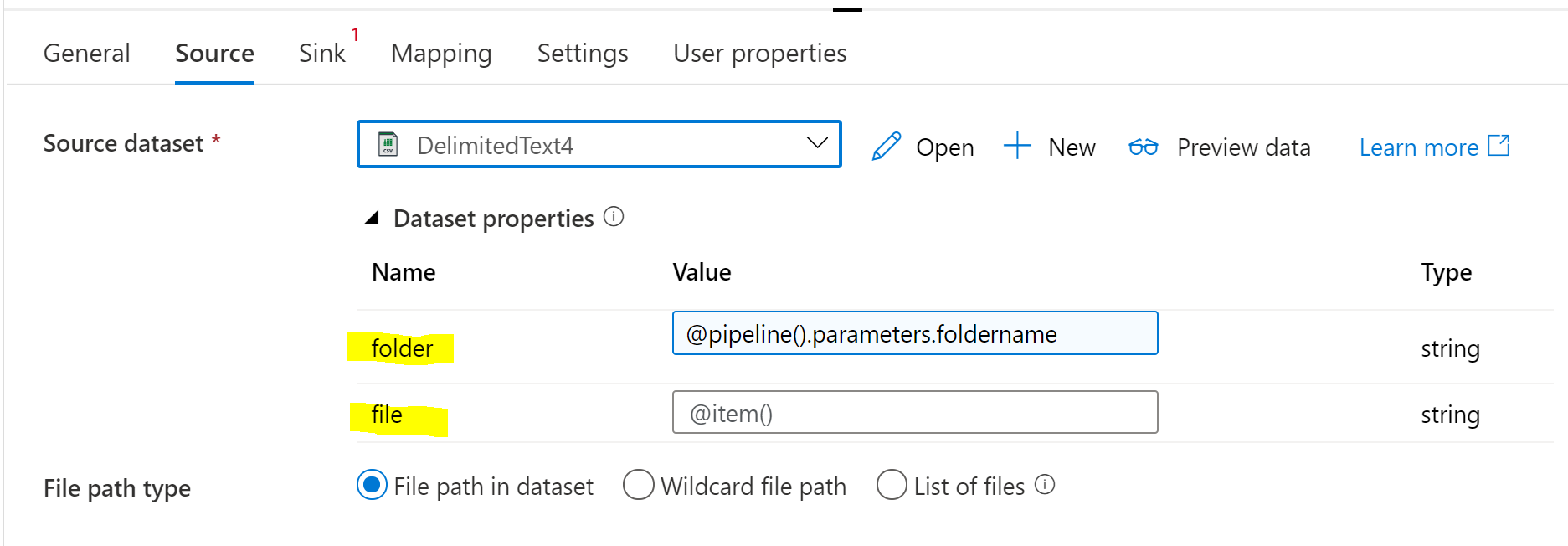

- ForEach Loop over the list of files. Pass the file name to Copy activity.

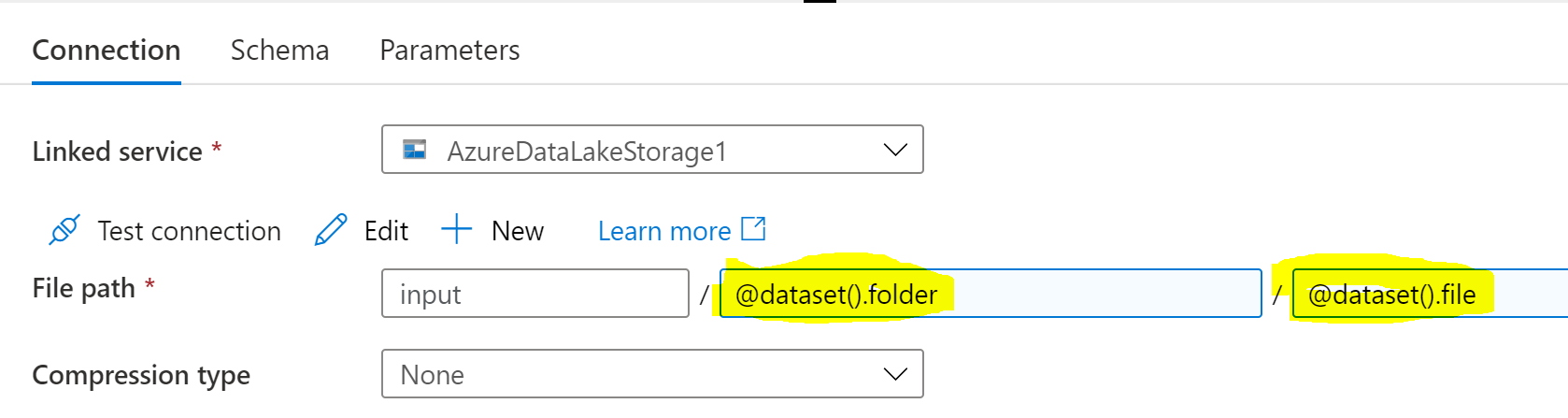

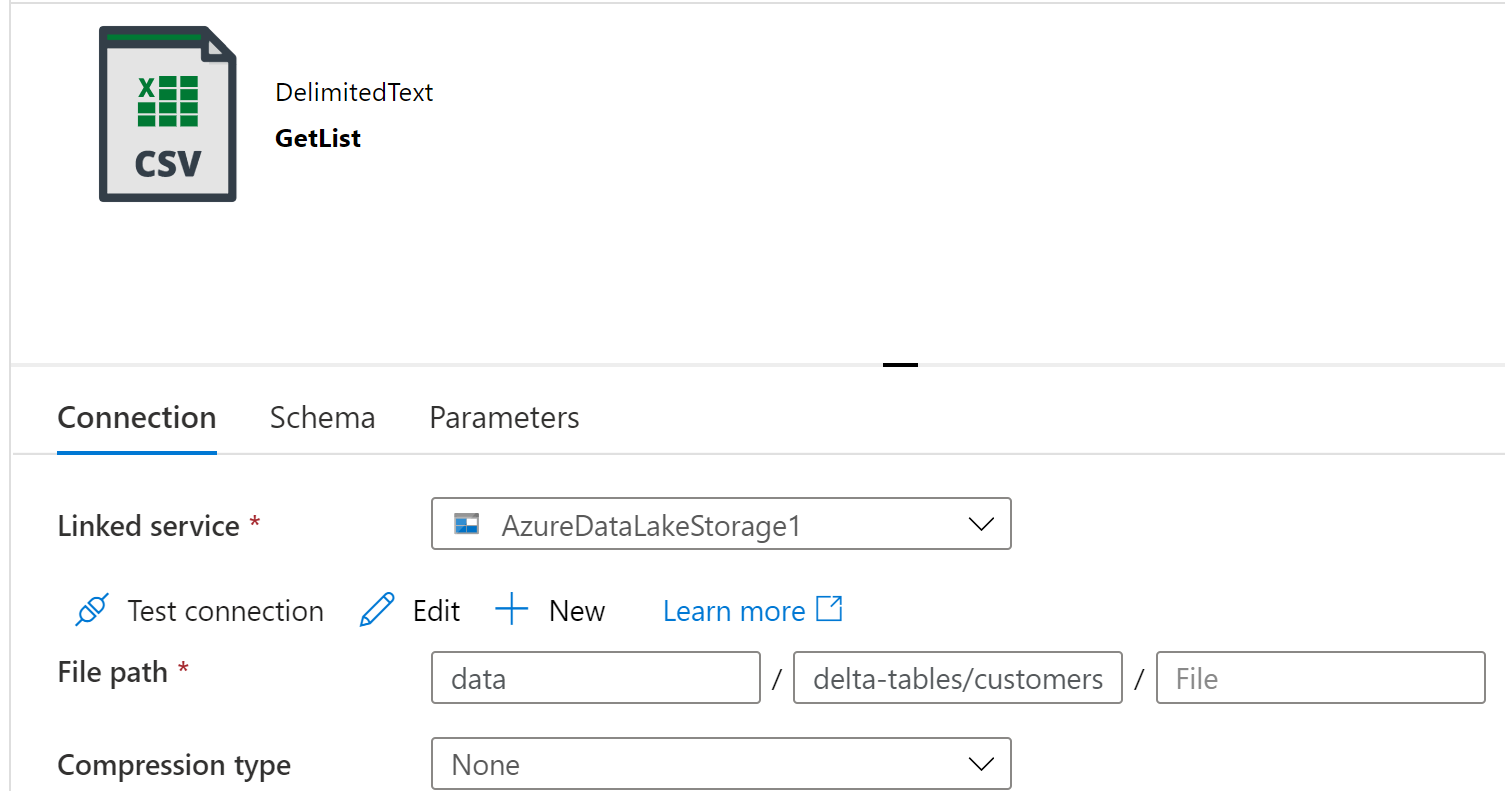

Set up dataset and Get Metadata activity to fetch files in folder

Filter activity:

Items: @activity('Get List of Files').output.childItems

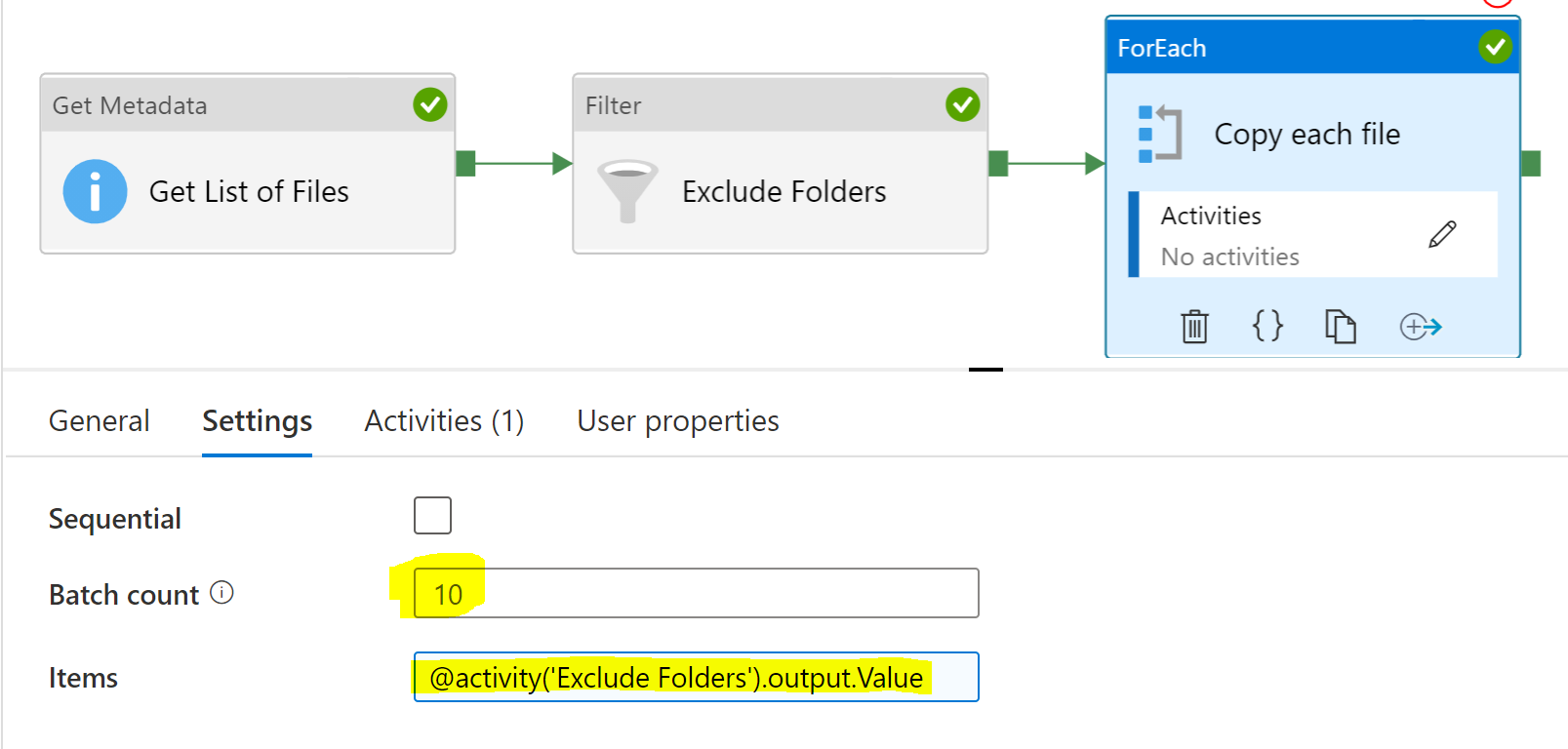

Condition: @equals(item().type,'File')Set up ForEach loop

Batch count: 10, or however many you want to be processed at a time

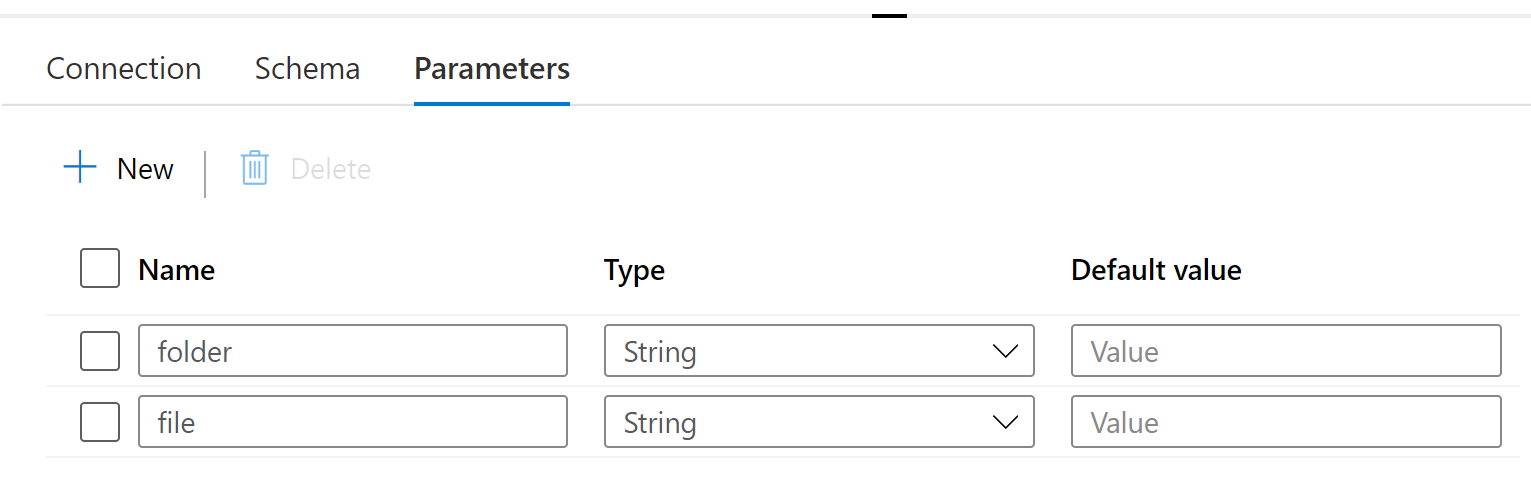

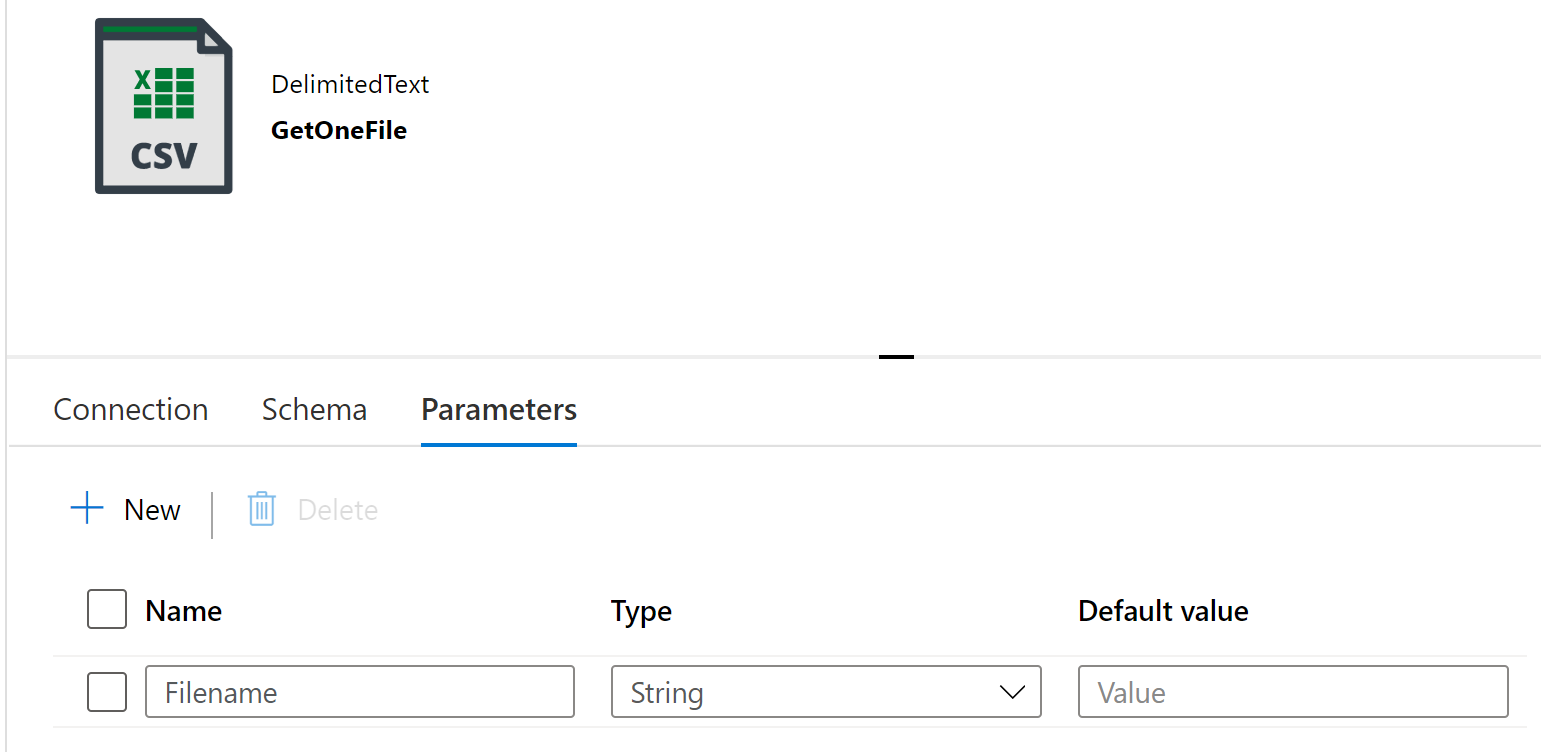

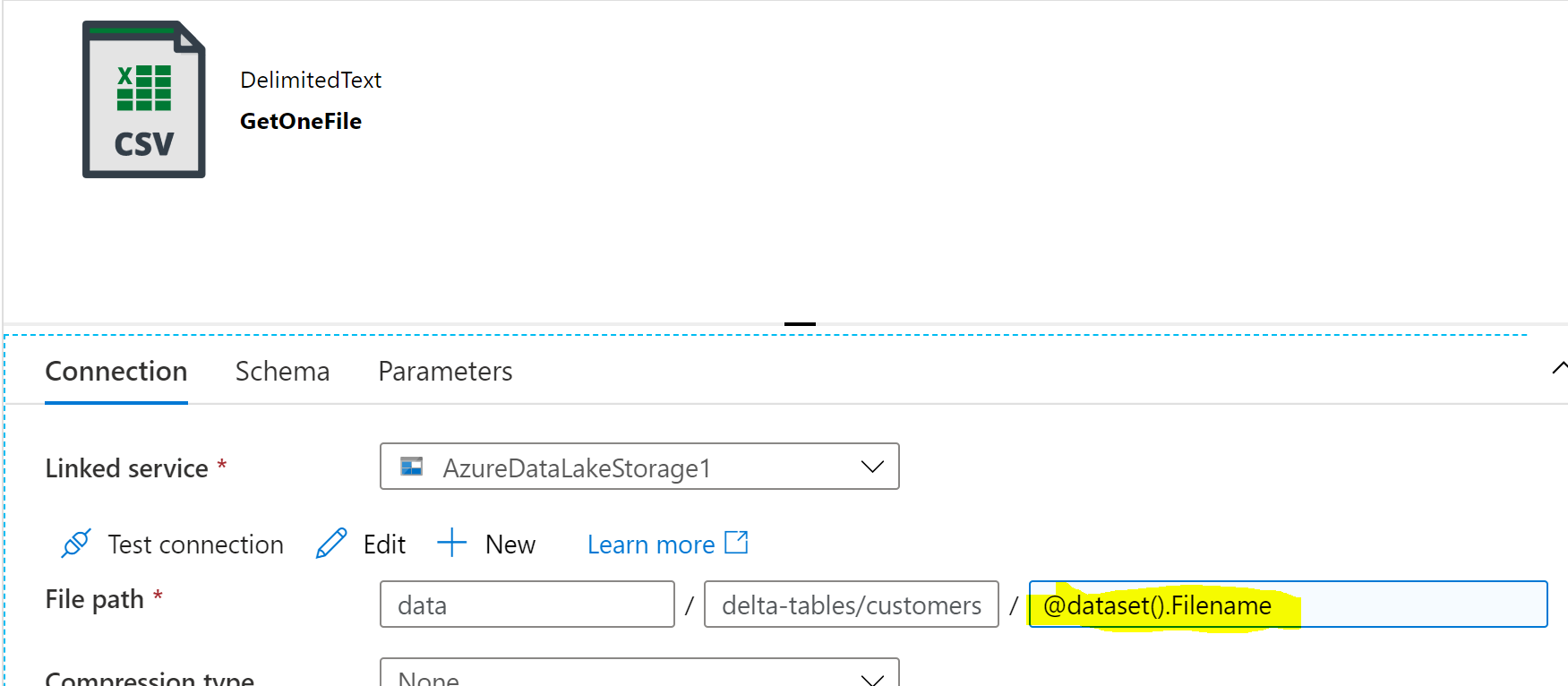

Items: @activity('Exclude Folders').output.ValueParameterize the dataset for copy activity.

Pass file name to copy activity