Hello @braxx ,

Thanks for asking and using Microsoft Q&A.

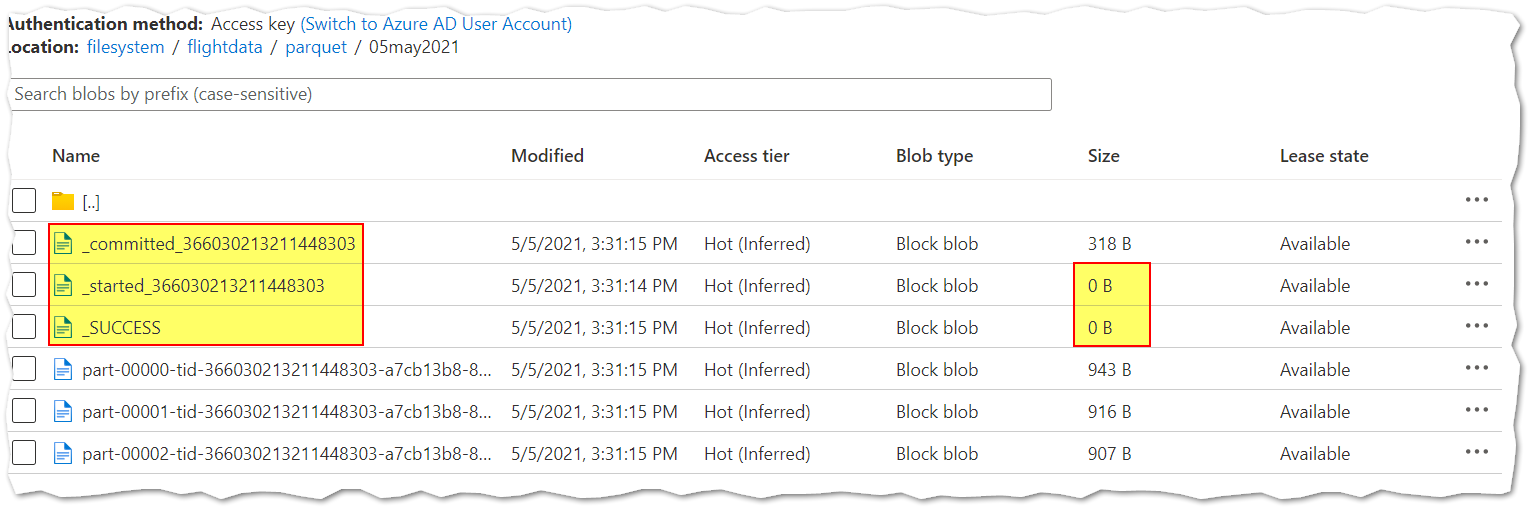

This is an expected behaviour when run any spark job to create these files.

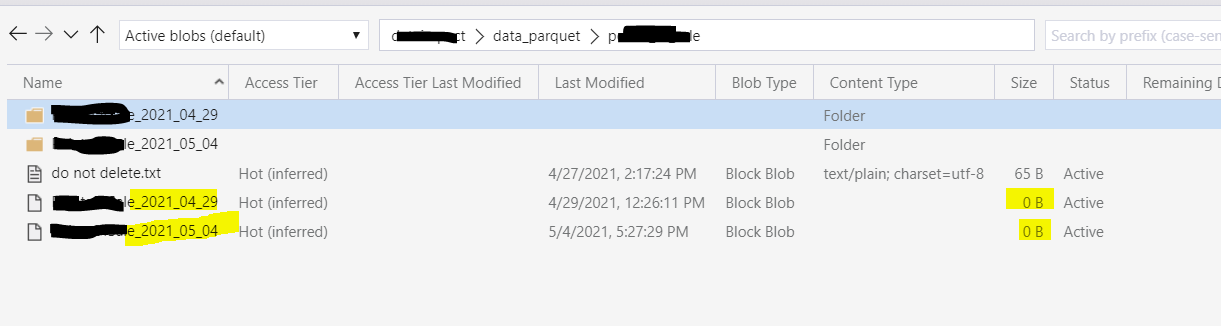

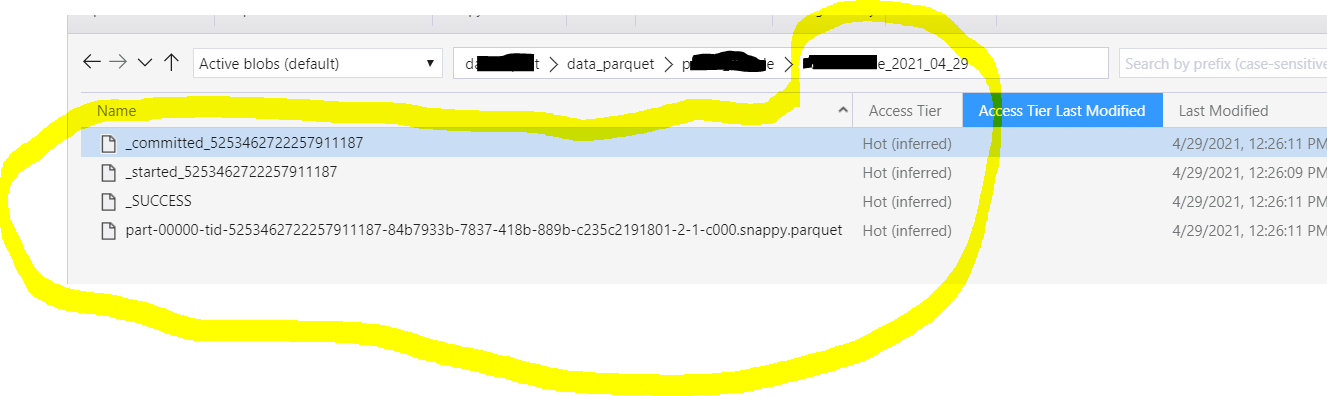

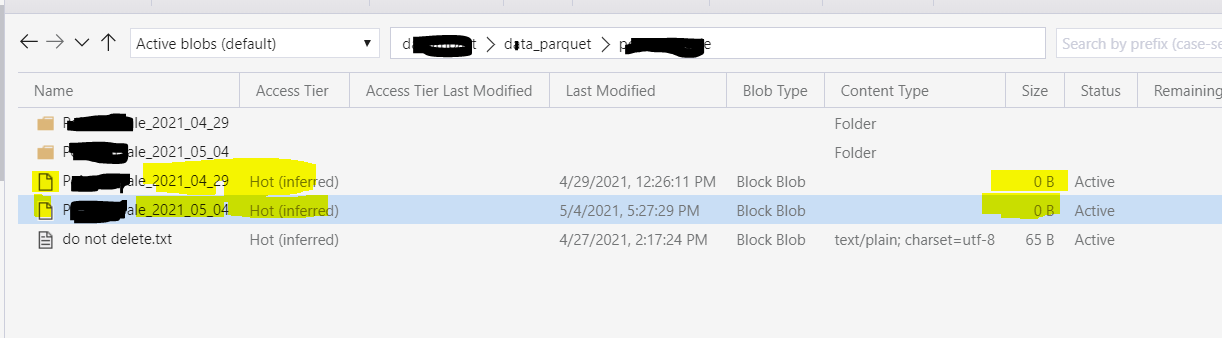

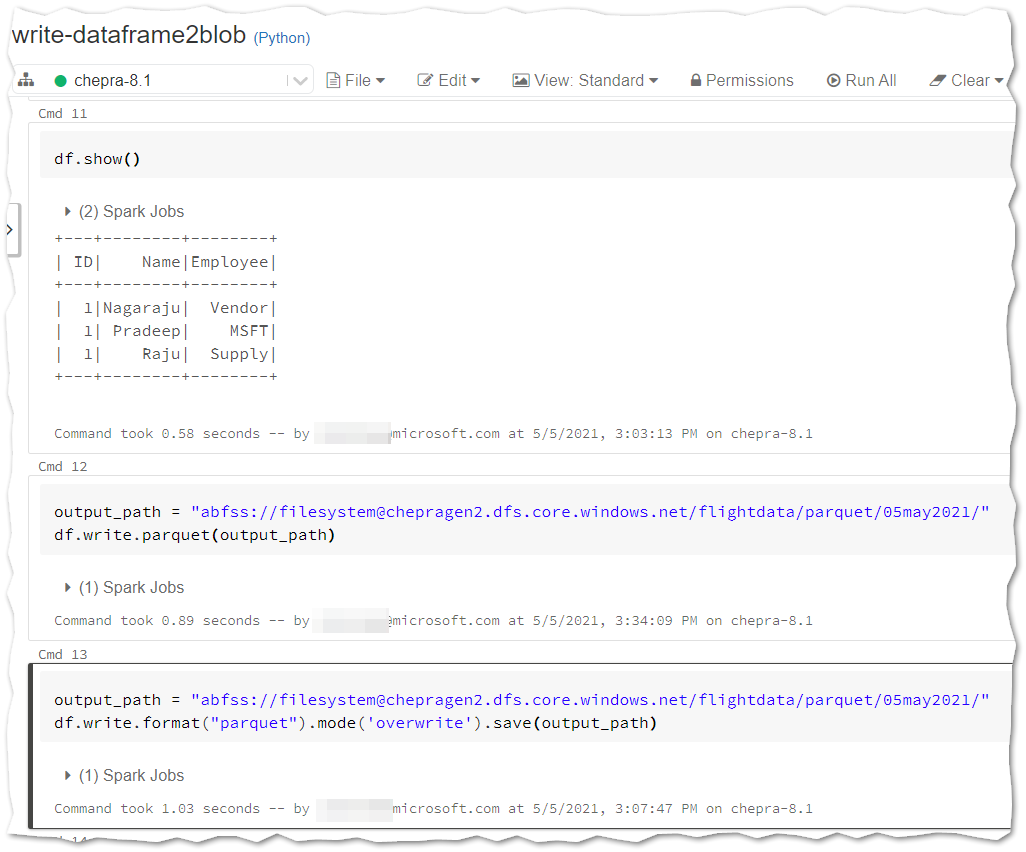

Expected output:

When DBIO transactional commit is enabled, metadata files starting with started<id> and committed<id> will accompany data files created by Apache Spark jobs. Generally you shouldn’t alter these files directly. Rather, use the VACUUM command to clean the files.

A combination of below three properties will help to disable writing all the transactional files which start with "_".

- We can disable the transaction logs of spark parquet write using

spark.sql.sources.commitProtocolClass =

org.apache.spark.sql.execution.datasources.SQLHadoopMapReduceCommitProtocol

This will help to disable the committed<TID> and started<TID> files but still _SUCCESS, _common_metadata and _metadata files will generate.

- We can disable the _common_metadata and _metadata files using

parquet.enable.summary-metadata=false

- We can also disable the _SUCCESS file using

mapreduce.fileoutputcommitter.marksuccessfuljobs=false

For more details, refer "Transactional Writes to Cloud Storage with DBIO" and "Stop Azure Databricks auto creating files" and "How do I prevent _success and _committed files in my write output?".

Hope this helps. Do let us know if you any further queries.

------------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.