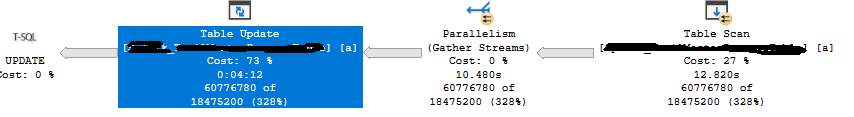

Any reason why the update statement cannot go parallel? anything can be done to make the update table operator go parallel in the update statement?

I have not worked enough with updates of this size to be able to speak with authority. (And one reason I have not worked a lot with them is that I tend to introduce batching from the start.) But permit me to think aloud a little bit.

For parallelism to be possible, it must be possible to somehow partition the table. And then I don't mean partitioning in terms of the feature of that name, just an ad-hoc partition. The reason for this is that if multiple threads are working on the table, they need to have

their own isolated space to work in. But that is kind of difficult to do in a heap, since the rows are physically located in arbitrary order.

Yes, threads can just be partitioned on some range on physical pages, and this is the case with the source. Here the pages have been partitioned. But there is no reason assume that the distribution of the source rows agree with the distribution of the target rows - in fact that sounds highly unlikely.

Sure, the threads just go and locate their rows in the target, and to not block each other they would have to take out tons of row locks. And if they need to add forward pointers, I guess they need to latch pages for a longer time. All and all, this is not that likely to be that efficient.

Not that I know what SQL Server can do, but say that both source and target table had had a clustered index on the same column. In this case, the partitions of the source table will match the target table. It goes without saying that the clustered index should not be on a column which you perform an update.

And speaking of clustered index - to be able to do batching, you will need indexes, and for batching of this size, you almost need to have a clustered index. But, yes, it takes time to create the clustered index to.