My colleague Siva was able to find a solution today. I am updating this in a hope that the solution will help someone:

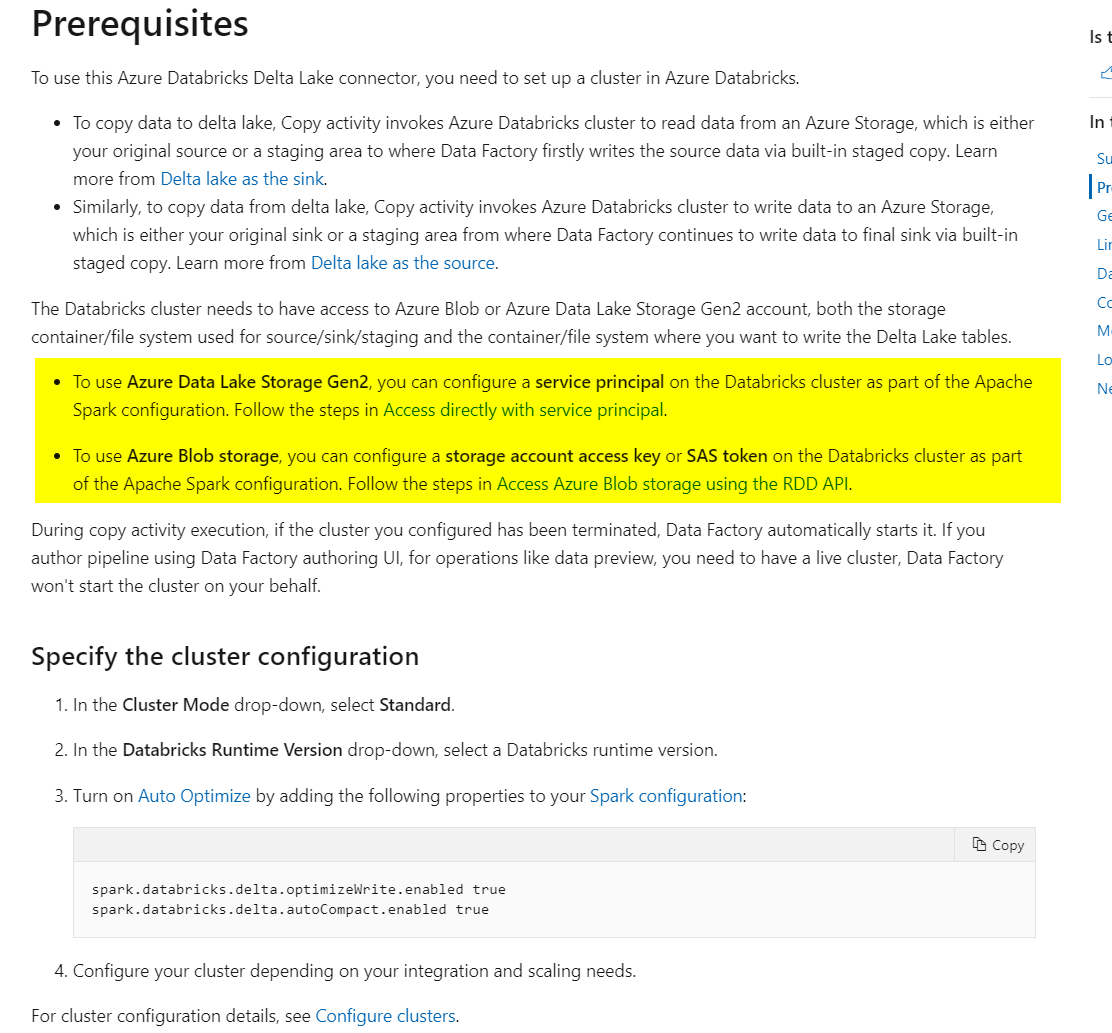

Basically, we need to enable staging to copy data from Deltalake. We need to update the spark config (go in databricks, spark cluster, edit, advanced. Leave whatever is in there as is, plus add the below) with the staging storage account key as per the comment below:

Spark Config: Edit the Spark Config by entering the connection information for your Azure Storage account .

This will allow your cluster to access the lab files. Enter the following:

spark.hadoop.fs.azure.account.key.<STORAGE_ACCOUNT_NAME>.blob.core.windows.net <ACCESS_KEY>, where <STORAGE_ACCOUNT_NAME> is your Azure Storage account name, and <ACCESS_KEY> is your storage access key.

Example: spark.hadoop.fs.azure.account.key.bigdatalabstore.blob.core.windows.net HD+91Y77b+TezEu1lh9QXXU2Va6Cjg9bu0RRpb/KtBj8lWQa6jwyA0OGTDmSNVFr8iSlkytIFONEHLdl67Fgxg==