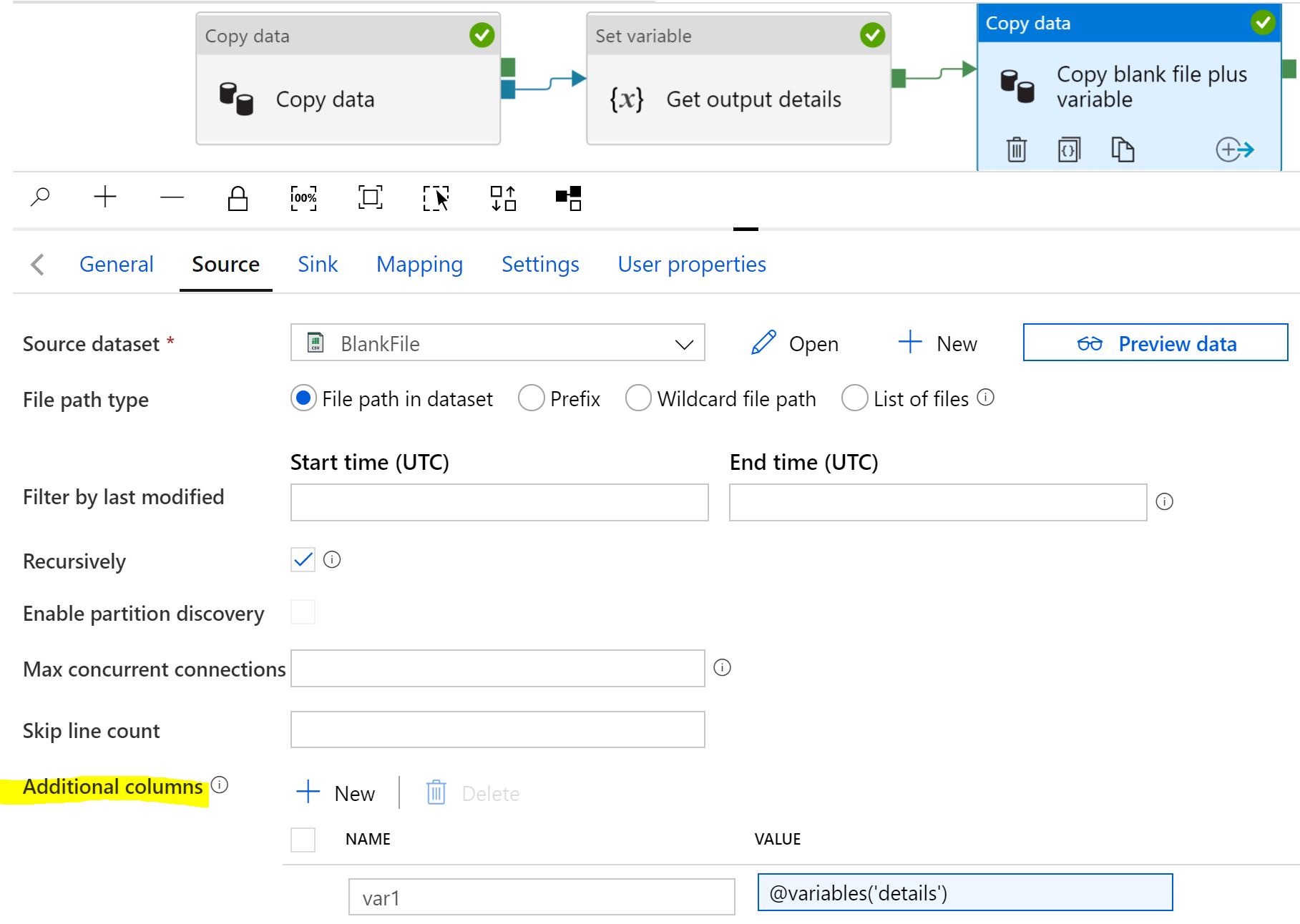

I did find a way to write the output to a file. I made use of the "Additional columns" feature of copying delimitedText dataset. See below picture.

After completion of the main copy activity, I grab the details I want from the output you shared, and put it in a string type variable.

Then I do another copy activity. This one takes an almost blank CSV file, and uses the "Additional colums" feature to add the variable as a new column, and write the combination to a new CSV file.

It is possible to skip the set variable step, and directly reference the details, but I find the set variable makes for easier debugging.

Which expression to use for getting the details depends upon what you want to capture.

If you wanted to capture only the data verification, it could look like @{activity('Copy data').output.dataConsistencyVerification}

You probably also want to capture the activityRunId or PipelineRunId @{activity('Copy data').activityRunId}