Hi @CoffeeCanuck ,

Welcome to Microsoft Q&A forum and thanks for reaching out.

As per my understanding your requirement is to move specific type of files to their respective folders in sink. If that is the case.

Option 1:

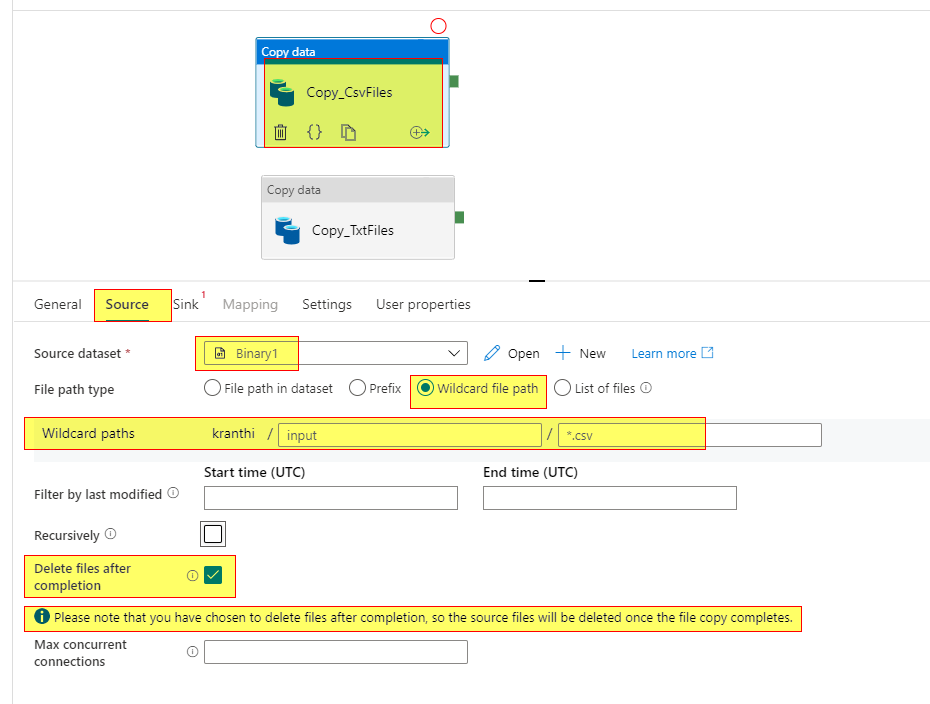

The simplest way is to create a pipeline with multiple parallel copy activities and use binary dataset on both source and sink side. Then use wildcard filename path in source to filter a particular type of files from a folder in ADLS Gen2. For this implementation you will have to use a copy activity for each file type (*.csv or *.txt , so on...)

Option 2:

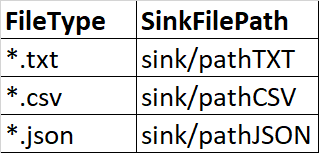

In case if would want to make this more dynamic with single copy activity, then an alternate would be to have a reference SQL table or reference File with two columns (FileType, SinkFilepath) to lookup the values.

Sample table as below:

Then have a lookup activity to lookup on the reference table/file and retrive the file type values and sink file path then pass the lookup output to subsequent ForEach activity which contains a Copy activity and map the lookup output values in copy activity source wild card file name settings and sink file path settings.

Note:

- Please make sure to uncheck

Recursivelyoption under copy activity source settings so that only file under specified folder are moved to sink. - Make sure you select binary dataset inorder to delete the files from source after copying to sink location (nothing but moving files)

The reason for the above solution is because you can avoid multiple activities like Filter activity, ForEach activity in your pipeline which would save cost as well. If you would like to use filter activity to filter by fileType as File and FileName endsWith '.csv' , then you will have to have a filter activity one for each file type (.csv/.txt/.json) then followed by ForEach activity containing a copy activity.

Hope this info help. Do let us know if you have further query.

----------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.