Hello @,

Thanks for the ask and using the Microsoft Q&A platform .

I tried the below snippet and it worked , Please do let me know how it goes .

cell1

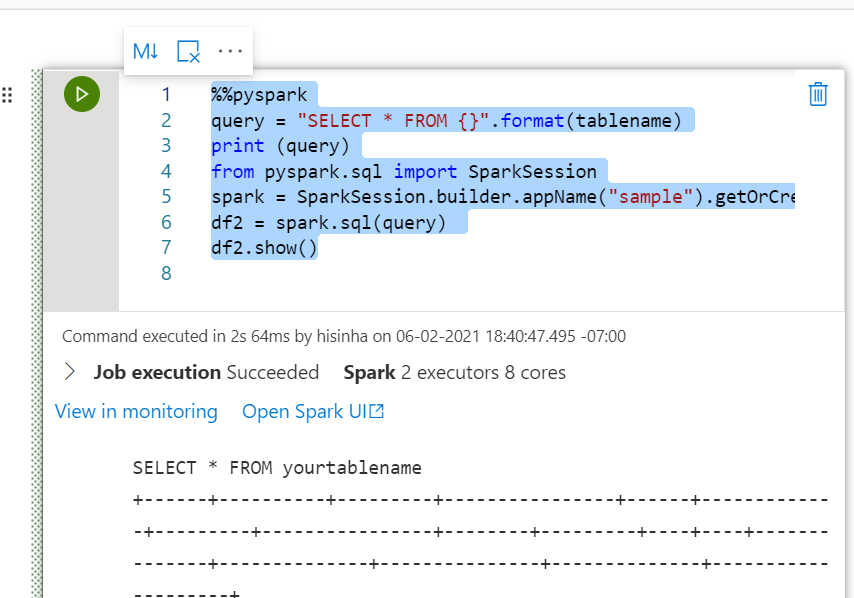

%%pyspark

tablename = "yourtablename"

cell2

%%pyspark

query = "SELECT * FROM {}".format(tablename)

print (query)

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("sample").getOrCreate()

df2 = spark.sql(query)

df2.show()

Thanks

Himanshu

Please do consider clicking on "Accept Answer" and "Up-vote" on the post that helps you, as it can be beneficial to other community members